Binary Packet Video Walkthrough

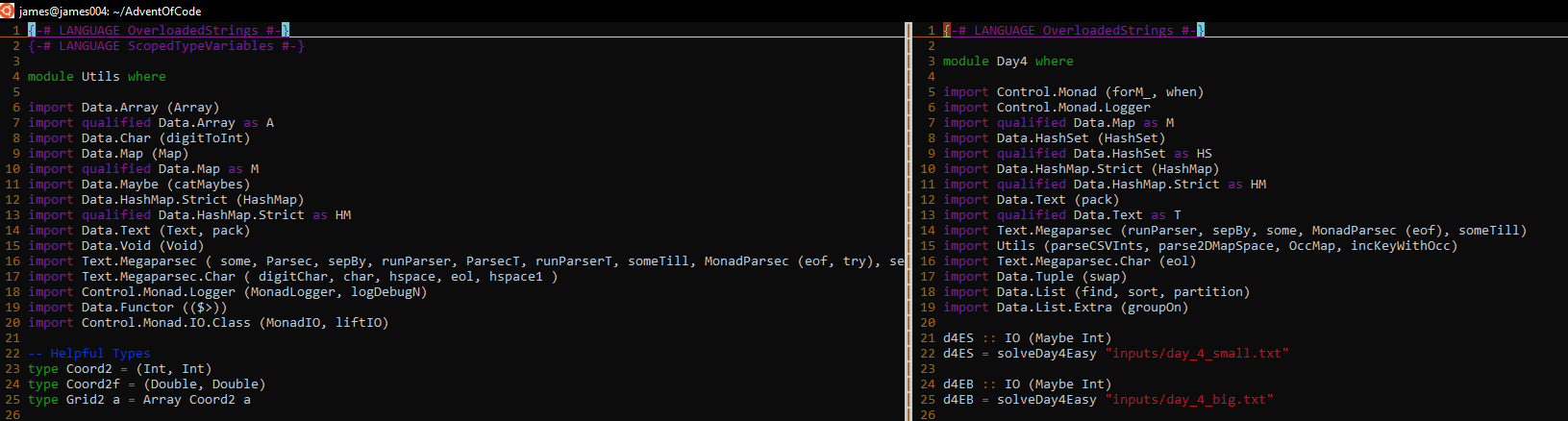

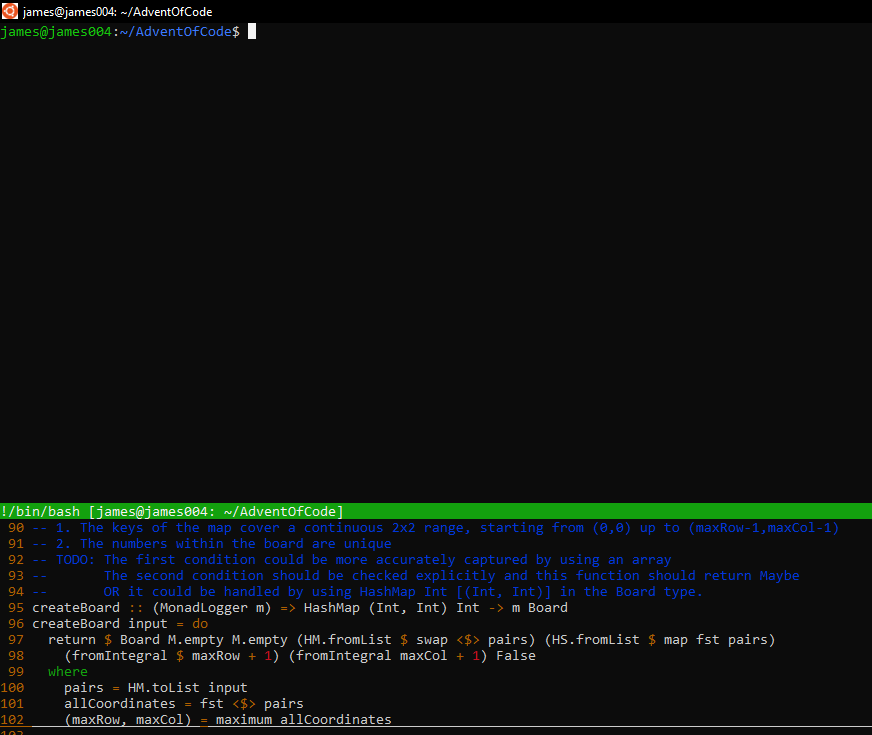

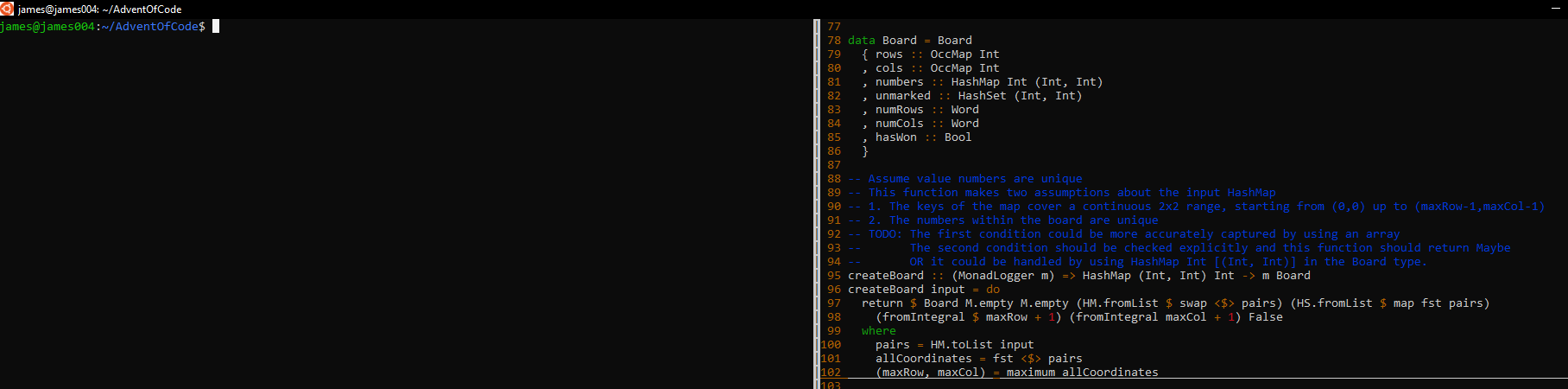

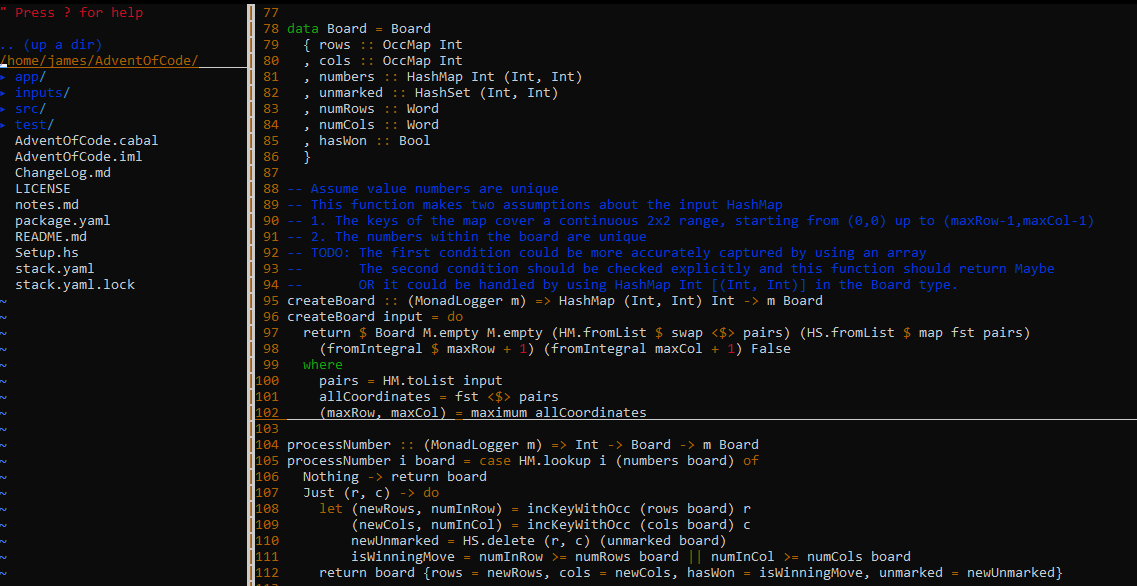

Here’s our 4th video walkthrough of some problems from last year’s Advent of Code. We had an in-depth code writeup back on Monday that you can check out. The video is here on YouTube, and you can also take a look at the code on GitHub!

If you’re enjoying this content, make sure to subscribe to our monthly newsletter! We’ll have some special offers coming out this month that you won’t want to miss!

Binary Packet Parsing

Today we're back with a new problem walkthrough, this time from Day 16 of last year's Advent of Code. In some sense, the parsing section for this problem is very easy - there's not much data to read from the file. In another sense, it's actually rather hard! This problem is about parsing a binary format, similar in some sense to how network packets work. It's a good exercise in handling a few different kinds of recursive cases.

As with the previous parts of this series, you can take a look at the code on GitHub here. This problem also has quite a few utilities, so you can observe those as well. This article is a deep-dive code walkthrough, so having the code handy to look at might be a good idea!

Problem Description

For this problem, we're decoding a binary packet. The packet is initially given as a hexadecimal string.

A0016C880162017C3686B18A3D4780But we'll turn it into binary and start working strictly with ones and zeros. However, the decoding process gets complicated because the packet is structured in a recursive way. But let's go over some of the rules.

Packet Header

Every packet has a six-bit header. The first three bits give a "version number" for the packet. The next three bits give a "type ID". That part's easy.

Then there are a series of rules about the rest of the information in the packet.

Literals

If the type ID is 4, the packet is a "literal". We then parse the remainder of the packet in 5-bit chunks. The first bit tells us if it is the last chunk of the packet (0 means yes, 1 means there are more chunks). The four other bits in the chunk are used to construct the binary number that forms the "value" of the literal. The more chunks, the higher the number can be.

Operator Sizes

Packets that aren't literals are operators. This means they contain a variable number of subpackets.

Operators have one bit (after the 6-bit header) giving a "length" type. A length type of "1" tells us that the following 11 bits give the number of subpackets. If the length bit is "0", then the next 15 bits give the length of all the subpackets in bits.

The Packet Structure

We'll see how these work out as we parse them. But with this structure in mind, one thing we can immediately do is come up with a recursive data type for a packet. I ended up calling this PacketNode since I thought of each as a node in a tree. It's pretty easy to see how to do this. We start with a base constructor for a Literal packet that only stores the version and the packet value. Then we just add an Operator constructor that will have a list of subpackets as well as a field for the operator type.

data PacketNode =

Literal Word8 Word64 |

Operator Word8 Word8 [PacketNode]

deriving (Show)Once we've parsed the packet, the "questions to answer" are, for the easy part, to take the sum of all the packet versions in our packet, and then to actually calculate the packet value recursively for the hard part. When we get to that part, we'll see how we use the operators to determine the value.

Solution Approach

The initial "parsing" part of this problem is actually quite easy. But we can observe that even after we have our binary values, it's still a parsing problem! We'll have an easy enough time answering the question once we've parsed our input into a PacketNode. So the core of the problem is parsing the ones and zeros into our PacketNode.

Since this is a parsing problem, we can actually use Megaparsec for the second part, instead of only for getting the input out of the file. Here's a possible signature for our core function:

-- More on this type later

data Bit = One | Zero

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m PacketNodeWhereas we normally use Text as the second type parameter to ParsecT, we can also use any list type, and the library will know what to do! With this function, we'll eventually be able to break our solution into its different parts. But first, we should start with some useful helpers for all our binary parsing.

Binary Utilities

Binary logic comes up fairly often in Advent of Code, and there are quite a few different utilities we would want to use with these ones and zeros. We start with a data type to represent a single bit. For maximum efficiency, we'd want to use a BitVector, but we aren't too worried about that. So we'll make a simple type with two constructors.

data Bit = Zero | One

deriving (Eq, Ord)

instance Show Bit where

show Zero = "0"

show One = "1"Our first order of business is turning a hexadecimal character into a list of bits. Hexadecimal numbers encapsulate 4 bits. So, for example, 0 should be [Zero, Zero, Zero, Zero], 1 should be [Zero, Zero, Zero, One], and F should be [One, One, One, One]. This is a simple pattern match, but we'll also have a failure case.

parseHexChar :: (MonadLogger m) => Char -> MaybeT m [Bit]

parseHexChar '0' = return [Zero, Zero, Zero, Zero]

parseHexChar '1' = return [Zero, Zero, Zero, One]

parseHexChar '2' = return [Zero, Zero, One, Zero]

parseHexChar '3' = return [Zero, Zero, One, One]

parseHexChar '4' = return [Zero, One, Zero, Zero]

parseHexChar '5' = return [Zero, One, Zero, One]

parseHexChar '6' = return [Zero, One, One, Zero]

parseHexChar '7' = return [Zero, One, One, One]

parseHexChar '8' = return [One, Zero, Zero, Zero]

parseHexChar '9' = return [One, Zero, Zero, One]

parseHexChar 'A' = return [One, Zero, One, Zero]

parseHexChar 'B' = return [One, Zero, One, One]

parseHexChar 'C' = return [One, One, Zero, Zero]

parseHexChar 'D' = return [One, One, Zero, One]

parseHexChar 'E' = return [One, One, One, Zero]

parseHexChar 'F' = return [One, One, One, One]

parseHexChar c = logErrorN ("Invalid Hex Char: " <> pack [c]) >> mzeroIf we wanted, we could also include lowercase, but this problem doesn't require it.

We also want to be able to turn a list of bits into a decimal number. We'll do this for a couple different sizes of numbers. For smaller numbers (8 bits or below), we might want to return a Word8. For larger numbers we can do Word64. Calculating the decimal number is a tail recursive process, where we track the accumulated sum and the current power of 2.

bitsToDecimal8 :: [Bit] -> Word8

bitsToDecimal8 bits = if length bits > 8

then error ("Too long! Use bitsToDecimal64! " ++ show bits)

else btd8 0 1 (reverse bits)

where

btd8 :: Word8 -> Word8 -> [Bit] -> Word8

btd8 accum _ [] = accum

btd8 accum mult (b : rest) = case b of

Zero -> btd8 accum (mult * 2) rest

One -> btd8 (accum + mult) (mult * 2) rest

bitsToDecimal64 :: [Bit] -> Word64

bitsToDecimal64 bits = if length bits > 64

then error ("Too long! Use bitsToDecimalInteger! " ++ (show $ bits))

else btd64 0 1 (reverse bits)

where

btd64 :: Word64 -> Word64 -> [Bit] -> Word64

btd64 accum _ [] = accum

btd64 accum mult (b : rest) = case b of

Zero -> btd64 accum (mult * 2) rest

One -> btd64 (accum + mult) (mult * 2) restLast of all, we should write a parser for reading a hexadecimal string from our file. This is easy, because Megaparsec already has a parser for a single hexadecimal character.

parseHexadecimal :: (MonadLogger m) => ParsecT Void Text m String

parseHexadecimal = some hexDigitCharBasic Bit Parsing

With all these utilities in place, we can get started with parsing our list of bits. As mentioned above, we want a function that generally looks like this:

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m PacketNodeHowever, we need one extra nuance. Because we have one layer that will parse several consecutive packets based on the number of bits parsed, we should also return this number as part of our function. In this way, we'll be able to determine if we're done with the subpackets of an operator packet.

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m (PacketNode, Word64)We'll also want a wrapper around this function so we can call it from a normal context with the list of bits as the input. This looks a lot like the existing utilities (e.g. for parsing a whole file). We use runParserT from Megaparsec and do a case-branch on the result.

parseBits :: (MonadLogger m) => [Bit] -> MaybeT m PacketNode

parseBits bits = do

result <- runParserT parsePacketNode "Utils.hs" bits

case result of

Left e -> logErrorN ("Failed to parse: " <> (pack . show $ e)) >> mzero

Right (packet, _) -> return packetWe ignore the "size" of the parsed packet in the primary case, but we'll use its result in the recursive calls to parsePacketNode!

Having done this, we can now start writing basic parser functions. To parse a single bit, we'll just wrap the anySingle combinator from Megaparsec.

parseBit :: ParsecT Void [Bit] m Bit

parseBit = anySingleIf we want to parse a certain number of bits, we'll want to use the monadic count combinator. Let's write a function that parses three bits and turns it into a Word8, since we'll need this for the packet version and type ID.

parse3Bit :: ParsecT Void [Bit] m Word8

parse3Bit = bitsToDecimal8 <$> count 3 parseBitWe can then immediately use this to start filling in our parsing function!

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m (PacketNode, Word64)

parsePacketNode = do

packetVersion <- parse3Bit

packetTypeId <- parse3Bit

...Then the rest of the function will depend upon the different cases we might parse.

Parsing a Literal

We can start with the "literal" case. This parses the "value" contained within the packet. We need to track the number of bits we parse so we can use this result in our parent function!

parseLiteral :: ParsecT Void [Bit] m (Word64, Word64)As explained above, we examine chunks 5 bits at a time, and we end the packet once we have a chunk that starts with 0. This is a "while" loop pattern, which suggests tail recursion as our solution!

We'll have two accumulator arguments. First, the series of bits that contribute to our literal value. Second, the number of bits we've parsed so far (which must include the signal bit).

parseLiteral :: ParsecT Void [Bit] m (Word64, Word64)

parseLiteral = parseLiteralTail [] 0

where

parseLiteralTail :: [Bit] -> Word64 -> ParsecT Void [Bit] m (Word64, Word64)

parseLiteralTail accumBits numBits = do

...First, we'll parse the leading bit, followed by the four bits in the chunk value. We append these to our previously accumulated bits, and add 5 to the number of bits parsed:

parseLiteral :: ParsecT Void [Bit] m (Word64, Word64)

parseLiteral = parseLiteralTail [] 0

where

parseLiteralTail :: [Bit] -> Word64 -> ParsecT Void [Bit] m (Word64, Word64)

parseLiteralTail accumBits numBits = do

leadingBit <- parseBit

nextBits <- count 4 parseBit

let accum' = accumBits ++ nextBits

let numBits' = numBits + 5

...If the leading bit is 0, we're done! We can return our value by converting our accumulated bits to decimal. Otherwise, we recurse with our new values.

parseLiteral :: ParsecT Void [Bit] m (Word64, Word64)

parseLiteral = parseLiteralTail [] 0

where

parseLiteralTail :: [Bit] -> Word64 -> ParsecT Void [Bit] m (Word64, Word64)

parseLiteralTail accumBits numBits = do

leadingBit <- parseBit

nextBits <- count 4 parseBit

let accum' = accumBits ++ nextBits

let numBits' = numBits + 5

if leadingBit == Zero

then return (bitsToDecimal64 accum', numBits')

else parseLiteralTail accum' numBits'Then it's very easy to incorporate this into our primary function. We check the type ID, and if it's "4" (for a literal), we call this function, and return with the Literal packet constructor.

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m (PacketNode, Word64)

parsePacketNode = do

packetVersion <- parse3Bit

packetTypeId <- parse3Bit

if packetTypeId == 4

then do

(literalValue, literalBits) <- parseLiteral

return (Literal packetVersion literalValue, literalBits + 6)

else

...Now we need to consider the "operator" cases and their subpackets.

Parsing from Number of Packets

We'll start with the simpler of these two cases, which is when we are parsing a specific number of subpackets. The first step, of course, is to parse the length type bit.

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m (PacketNode, Word64)

parsePacketNode = do

packetVersion <- parse3Bit

packetTypeId <- parse3Bit

if packetTypeId == 4

then do

(literalValue, literalBits) <- parseLiteral

return (Literal packetVersion literalValue, literalBits + 6)

else do

lengthTypeId <- parseBit

if lengthTypeId == One

then do

...First, we have to count out 11 bits and use that to determine the number of subpackets. Once we have this number, we just have to recursively call the parsePacketNode function the given number of times.

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m (PacketNode, Word64)

parsePacketNode = do

...

if packetTypeId == 4

then ...

else do

lengthTypeId <- parseBit

if lengthTypeId == One

then do

numberOfSubpackets <- bitsToDecimal64 <$> count 11 parseBit

subPacketsWithLengths <- replicateM (fromIntegral numberOfSubpackets) parsePacketNode

...We'll unzip these results to get our list of packets and the lengths. To get our final packet length, we take the sum of the sizes, but we can't forget to add the header bits and the length type bit (7 bits), and the bits from the number of subpackets (11).

parsePacketNode :: (MonadLogger m) => ParsecT Void [Bit] m (PacketNode, Word64)

parsePacketNode = do

...

if packetTypeId == 4

then ...

else do

lengthTypeId <- parseBit

if lengthTypeId == One

then do

numberOfSubpackets <- bitsToDecimal64 <$> count 11 parseBit

subPacketsWithLengths <- replicateM (fromIntegral numberOfSubpackets) parsePacketNode

let (subPackets, lengths) = unzip subPacketsWithLengths

return (Operator packetVersion packetTypeId subPackets, sum lengths + 7 + 11)

elseParsing from Number of Bits

Parsing based on the number of bits in all the subpackets is a little more complicated, because we have more state to track. As we loop through the different subpackets, we need to keep track of how many bits we still have to parse. So we'll make a separate helper function.

parseForPacketLength :: (MonadLogger m) => Int -> Word64 -> [PacketNode] -> ParsecT Void [Bit] m ([PacketNode], Word64)

parseForPacketLength remainingBits accumBits prevPackets = ...The base case comes when we have 0 bits remaining. Ideally, this occurs with exactly 0 bits. If it's a negative number, this is a problem. But if it's successful, we'll reverse the accumulated packets and return the number of bits we've accumulated.

parseForPacketLength :: (MonadLogger m) => Int -> Word64 -> [PacketNode] -> ParsecT Void [Bit] m ([PacketNode], Word64)

parseForPacketLength remainingBits accumBits prevPackets = if remainingBits <= 0

then do

if remainingBits < 0

then error "Failing"

else return (reverse prevPackets, accumBits)

else ...In the recursive case, we make one new call to parsePacketNode (the original function, not this helper). This gives us a new packet, and some more bits that we've parsed (this is why we've been tracking that number the whole time). So we can subtract the size from the remaining bits, and add it to the accumulated bits. And then we'll make the actual recursive call to this helper function.

parseForPacketLength :: (MonadLogger m) => Int -> Word64 -> [PacketNode] -> ParsecT Void [Bit] m ([PacketNode], Word64)

parseForPacketLength remainingBits accumBits prevPackets = if remainingBits <= 0

then do

if remainingBits < 0

then error "Failing"

else return (reverse prevPackets, accumBits)

else do

(newPacket, size) <- parsePacketNode

parseForPacketLength (remainingBits - fromIntegral size) (accumBits + fromIntegral size) (newPacket : prevPackets)And that's all! All our different pieces fit together now and we're able to parse our packet!

Solving the Problems

Now that we've parsed the packet into our structure, the rest of the problem is actually quite easy and fun! We've created a straightforward recursive structure, and so we can loop through it in a straightforward recursive way. We'll just always use the Literal as the base case, and then loop through the list of packets for the base case.

Let's start with summing the packet versions. This will return a Word64 since we could be adding a lot of package versions. With a Literal package, we just immediately return the version.

sumPacketVersions :: PacketNode -> Word64

sumPacketVersions (Literal v _) = fromIntegral v

...Then with operator packets, we just map over the sub-packets, take the sum of their versions, and then add the original packet's version.

sumPacketVersions :: PacketNode -> Word64

sumPacketVersions (Literal v _) = fromIntegral v

sumPacketVersions (Operator v _ packets) = fromIntegral v +

sum (map sumPacketVersions packets)Now, for calculating the final packet value, we again start with the Literal case, since we'll just return its value. Note that we'll do this monadically, since we'll have some failure conditions in the later parts.

calculatePacketValue :: MonadLogger m => PacketNode -> MaybeT m Word64

calculatePacketValue (Literal _ x) = return xNow, for the first time in the problem, we actually have to care what the operators mean! Here's a summary of the first few operators:

0 = Sum of all subpackets

1 = Product of all subpackets

2 = Minimum of all subpackets

3 = Maximum of all subpacketsThere are three other operators following the same basic pattern. They expect exactly two subpackets and perform a binary, boolean operator. If it is true, the value is 1. If the operation is false, the packet value is 0.

5 = Greater than operator (<)

6 = Less than operator (>)

7 = Equals operator (==)For the first set of operations, we can recursively calculate the value of the sub-packets, and take the appropriate aggregate function over the list.

calculatePacketValue :: MonadLogger m => PacketNode -> MaybeT m Word64

calculatePacketValue (Literal _ x) = return x

calculatePacketValue (Operator _ 0 packets) = sum <$> mapM calculatePacketValue packets

calculatePacketValue (Operator _ 1 packets) = product <$> mapM calculatePacketValue packets

calculatePacketValue (Operator _ 2 packets) = minimum <$> mapM calculatePacketValue packets

calculatePacketValue (Operator _ 3 packets) = maximum <$> mapM calculatePacketValue packets

...For the binary operations, we first have to verify that there are only two packets.

calculatePacketValue :: MonadLogger m => PacketNode -> MaybeT m Word64

...

calculatePacketValue (Operator _ 5 packets) = do

if length packets /= 2

then logErrorN "> operator '5' must have two packets!" >> mzero

else ...Then we just de-structure the packets, calculate each value, compare them, and then return the appropriate value.

calculatePacketValue :: MonadLogger m => PacketNode -> MaybeT m Word64

...

calculatePacketValue (Operator _ 5 packets) = do

if length packets /= 2

then logErrorN "> operator '5' must have two packets!" >> mzero

else do

let [p1, p2] = packets

v1 <- calculatePacketValue p1

v2 <- calculatePacketValue p2

return (if v1 > v2 then 1 else 0)

calculatePacketValue (Operator _ 6 packets) = do

if length packets /= 2

then logErrorN "< operator '6' must have two packets!" >> mzero

else do

let [p1, p2] = packets

v1 <- calculatePacketValue p1

v2 <- calculatePacketValue p2

return (if v1 < v2 then 1 else 0)

calculatePacketValue (Operator _ 7 packets) = do

if length packets /= 2

then logErrorN "== operator '7' must have two packets!" >> mzero

else do

let [p1, p2] = packets

v1 <- calculatePacketValue p1

v2 <- calculatePacketValue p2

return (if v1 == v2 then 1 else 0)

calculatePacketValue p = do

logErrorN ("Invalid packet! " <> (pack . show $ p))

mzeroConcluding Code

To tie everything together, we just follow the steps.

- Parse the hexadecimal from the file

- Transform the hexadecimal string into a list of bits

- Parse the packet

- Answer the question

For the first part, we use sumPacketVersions on the resulting packet.

solveDay16Easy :: String -> IO (Maybe Int)

solveDay16Easy fp = runStdoutLoggingT $ do

hexLine <- parseFile parseHexadecimal fp

result <- runMaybeT $ do

bitLine <- concatMapM parseHexChar hexLine

packet <- parseBits bitLine

return $ sumPacketVersions packet

return (fromIntegral <$> result)And the "hard" solution is the same, except we use calculatePacketValue instead.

solveDay16Hard :: String -> IO (Maybe Int)

solveDay16Hard fp = runStdoutLoggingT $ do

hexLine <- parseFile parseHexadecimal fp

result <- runMaybeT $ do

bitLine <- concatMapM parseHexChar hexLine

packet <- parseBits bitLine

calculatePacketValue packet

return (fromIntegral <$> result)And we're done!

Conclusion

That's all for this solution! As always, you can take a look at the code on GitHub. Later this week I'll have the video walkthrough as well. To keep up with all the latest news, make sure to subscribe to our monthly newsletter! Subscribing will give you access to our subscriber resources, like our Beginners Checklist and our Production Checklist.

Polymer Expansion Video Walkthrough

Earlier this week I did a write-up of the Day 14 Problem from Advent of Code 2021. Today, I’m releasing a video walkthrough that you can watch here on YouTube!

If you’re enjoying this content, make sure to subscribe to our monthly newsletter! This will give you access to our subscriber resources!

Polymer Expansion

Today we're back with another Advent of Code walkthrough. We're doing the problem from Day 14 of last year. Here are a couple previous walkthroughs:

If you want to see the code for today, you can find it here on GitHub!

If you're enjoying these problem overviews, make sure to subscribe to our monthly newsletter!

Problem Statement

The subject of today's problem is "polymer expansion". What this means in programming terms is that we'll be taking a string and inserting new characters into it based on side-by-side pairs.

The puzzle input looks like this:

NNCB

NN -> C

NC -> B

CB -> H

...The top line of the input is our "starter string". It's our base for expansion. The lines that follow are codes that explain how to expand each pair of characters.

So in our original string of four characters (NNCB), there are three pairs: NN, NC, and CB. With the exception of the start and end characters, each character appears in two different pairs. So for each pair, we find the corresponding "insertion character" and construct a new string where all the insertion characters come between their parent pairs. The first pair gives us a C, the second pair gives us a new B, and the third pair gets us a new H.

So our string for the second step becomes: NCNBCHB. We'll then repeat the expansion a certain number of times.

For the first part, we'll run 10 steps of the expansion algorithm. For the second part, we'll do 40 steps. Each time, our final answer comes from taking the number of occurrences of the most common letter in the final string, and subtracting the occurrences of the least common letter.

Utilities

The main utility we'll end up using for this problem is an occurrence map. I decided to make this general idea for counting the number of occurrences of some item, since it's such a common pattern in these puzzles. The most generic alias we could have is a map where the key and value are parameterized, though the expectation is that i is an Integral type:

type OccMapI a i = Map a iThe most common usage is counting items up from 0. Since this is an unsigned, non-negative number, we would use Word.

type OccMap a = Map a WordHowever, for today's problem, we're gonna be dealing with big numbers! So just to be safe, we'll use the unbounded Integer type, and make a separate type definition for that.

type OccMapBig a = Map a IntegerWe can make a couple useful helper functions for this occurrence map. First, we can add a certain number value to a key.

addKey :: (Ord a, Integral i) => OccMapI a i -> a -> i -> OccMapI a i

addKey prevMap key count = case M.lookup key prevMap of

Nothing -> M.insert key count prevMap

Just x -> M.insert key (x + count) prevMapWe can add a specialization of this for "incrementing" a key, adding 1 to its value. We won't use this for today's solution, but it helps in a lot of cases.

incKey :: (Ord a, Integral i) => OccMapI a i -> a -> OccMapI a i

incKey prevMap key = addKey prevMap key 1Now with our utilities out of the way, let's start parsing our input!

Parsing the Input

First off, let's define the result types of our parsing process. The starter string comes on the first line, so that's a separate String. But then we need to create a mapping between character pairs and the resulting character. We'll eventually want these in a HashMap, so let's make a type alias for that.

type PairMap = HashMap (Char, Char) CharNow for parsing, we need to parse the start string, an empty line, and then each line of the code mapping.

Since most of the input is in the code mapping lines, let's do that first. Each line consists of parsing three characters, just separated by the arrow. This is very straightforward with Megaparsec.

parsePairCode :: (MonadLogger m) => ParsecT Void Text m (Char, Char, Char)

parsePairCode = do

input1 <- letterChar

input2 <- letterChar

string " -> "

output <- letterChar

return (input1, input2, output)Now let's make a function to combine these character tuples into the map. This is a nice quick fold:

buildPairMap :: [(Char, Char, Char)] -> HashMap (Char, Char) Char

buildPairMap = foldl (\prevMap (c1, c2, c3) -> HM.insert (c1, c2) c3 prevMap) HM.emptyThe rest of our parsing function then parses the starter string and a couple newline characters before we get our pair codes.

parseInput :: (MonadLogger m) => ParsecT Void Text m (String, PairMap)

parseInput = do

starterCode <- some letterChar

eol >> eol

pairCodes <- sepEndBy1 parsePairCode eol

return (starterCode, buildPairMap pairCodes)Then it will be easy enough to use our parseFile function from previous days. Now let's figure out our solution approach.

A Naive Approach

Now at first, the polymer expansion seems like a fairly simple problem. The root of the issue is that we have to write a function to run one step of the expansion. In principle, this isn't a hard function. We loop through the original string, two letters at a time, and gradually construct the new string for the next step.

One way to handle this would be with a tail recursive helper function. We could accumulate the new string (in reverse) through an accumulator argument.

runExpand :: (MonadLogger m)

=> PairMap

-> String -- Accumulator

-> String -- Remaining String

-> m StringThe "base case" of this function is when we have only one character left. In this case, we append it to the accumulator and reverse it all.

runExpand :: (MonadLogger m) => PairMap -> String -> String -> m String

runExpand pairMap accum [lastChar] = return $ reverse (lastChar : accum)For the recursive case, we imagine we have at least two characters remaining. We'll look these characters up in our map. Then we'll append the first character and the new character to our accumulator, and then recurse on the remainder (including the second character).

runExpand :: (MonadLogger m) => PairMap -> String -> String -> m String

runExpand _ accum [lastChar] = return $ reverse (lastChar : accum)

runExpand pairMap accum (firstChar: secondChar : rest) = do

let insertChar = pairMap HM.! (nextChar, secondChar)

runExpand pairMap (insertChar : firstChar : accum) (secondChar : rest)There are some extra edge cases we could handle here, but this isn't going to be how we solve the problem. The approach works...in theory. In practice though, it only works for a small number of steps. Why? Well the problem description gives a hint: This polymer grows quickly. In fact, with each step, our string essentially doubles in size - exponential growth!

This sort of solution is good enough for the first part, running only 10 steps. However, as the string gets bigger and bigger, we'll run out of memory! So we need something more efficient.

A Better Approach

The key insight here is that we don't actually care about the order of the letters in the string at any given time. All we really need to think about is the number of each kind of pair that is present. How does this work?

Well recall some of our basic code pairs from the top:

NN -> C

NC -> B

CB -> H

BN -> BWith the starter string like NNCB, we have one NN pair, an NC pair, and CB pair. In the next step, the NN pair generates two new pairs. Because a C is inserted between the N, we lose the NN pair but gain a NC pair and a CN pair. So after expansion the number of resulting NC pairs is 1, and the number of CN pairs is 1.

However, this is true of every NN pair within our string! Suppose we instead start off this with:

NNCBNN

Now there are two NN pairs, meaning the resulting string will have two NC pairs and two CN pairs, as you can see by taking a closer look at the result: NCNBCHBBNCN.

So instead of keeping the complete string in memory, all we need to do is use the "occurrence map" utility to store the number of each pair for our current state. So we'll keep folding over an object of type OccMapBig (Char, Char).

The first step of our solution then is to construct our initial mapping from the starter code. We can do this by folding through the starter string in a similar way to the example code in the naive solution. We one or zero characters are left in our "remainder", that's a base case and we can return the map.

-- Same signature as naive approach

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer)

expandPolymerLong numSteps starterCode pairMap = do

let starterMap = buildInitialMap M.empty starterCode

...

where

buildInitialMap :: OccMapBig (Char, Char) -> String -> OccMapBig (Char, Char)

buildInitialMap prevMap "" = prevMap

buildInitialMap prevMap [_] = prevMap

...Now for the recursive case, we have at least two characters remaining, so we'll just increment the value for the key formed by these characters!

-- Same signature as naive approach

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer)

expandPolymerLong numSteps starterCode pairMap = do

let starterMap = buildInitialMap M.empty starterCode

...

where

buildInitialMap :: OccMapBig (Char, Char) -> String -> OccMapBig (Char, Char)

buildInitialMap prevMap "" = prevMap

buildInitialMap prevMap [_] = prevMap

buildInitialMap prevMap (firstChar : secondChar : rest) = buildInitialMap (incKey prevMap (firstChar, secondChar)) (secondChar : rest)The key point, of course, is how to expand our map each step, so let's do this next!

A New Expansion

To run a single step in our naive solution, we could use a tail-recursive helper to gradually build up the new string (the "accumulator") from the old string (the "remainder" or "rest"). So our type signature looked like this:

runExpand :: (MonadLogger m)

=> PairMap

-> String -- Accumulator

-> String -- Remainder

-> m StringFor our new expansion step, we're instead taking one occurrence map and transforming it into a new occurrence map. For convenience, we'll include an integer argument keeping track of which step we're on, but we won't need to use it in the function. We'll do all this within expandPolymerLong so that we have access to the PairMap argument.

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer)

expandPolymerLong numSteps starterCode pairMap = do

...

where

runStep ::(MonadLogger m) => OccMapBig (Char, Char) -> Int -> m (OccMapBig (Char, Char))

runStep = ...The runStep function has a simple idea behind it though. We gradually reconstruct our occurrence map by folding through the pairs in the previous map. We'll make a new function runExpand to act as the folding function.

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer)

expandPolymerLong numSteps starterCode pairMap = do

...

where

runStep ::(MonadLogger m) => OccMapBig (Char, Char) -> Int -> m (OccMapBig (Char, Char))

runStep prevMap _ = foldM runExpand M.empty (M.toList prevMap)

runExpand :: (MonadLogger m) => OccMapBig (Char, Char) -> ((Char, Char), Integer) -> m (OccMapBig (Char, Char))

runExpand = ...For this function, we begin by looking up the two-character code in our map. If for whatever reason it doesn't exist, we'll move on, but it's worth logging an error message since this isn't supposed to happen.

runExpand :: (MonadLogger m) => OccMapBig (Char, Char) -> ((Char, Char), Integer) -> m (OccMapBig (Char, Char))

runExpand prevMap (code@(c1, c2), count) = case HM.lookup code pairMap of

Nothing -> logErrorN ("Missing Code: " <> pack [c1, c2]) >> return prevMap

Just newChar -> ...Now once we've found the new character, we'll create our first new pair and our second new pair by inserting the new character with our previous characters.

runExpand :: (MonadLogger m) => OccMapBig (Char, Char) -> ((Char, Char), Integer) -> m (OccMapBig (Char, Char))

runExpand prevMap (code@(c1, c2), count) = case HM.lookup code pairMap of

Nothing -> logErrorN ("Missing Code: " <> pack [c1, c2]) >> return prevMap

Just newChar -> do

let first = (c1, newChar)

second = (newChar, c2)

...And to wrap things up, we add the new count value for each of our new keys to the existing map! This is done with nested calls to addKey on our occurrence map.

runExpand :: (MonadLogger m) => OccMapBig (Char, Char) -> ((Char, Char), Integer) -> m (OccMapBig (Char, Char))

runExpand prevMap (code@(c1, c2), count) = case HM.lookup code pairMap of

Nothing -> logErrorN ("Missing Code: " <> pack [c1, c2]) >> return prevMap

Just newChar -> do

let first = (c1, newChar)

second = (newChar, c2)

return $ addKey (addKey prevMap first count) second countRounding Up

Now we have our last task: finding the counts of the characters in the final string, and subtracting the minimum from the maximum. This requires us to first disassemble our mapping of pair counts into a mapping of individual character counts. This is another fold step. But just like before, we use nested calls to addKey on an occurrence map! See how countChars works below:

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer)

expandPolymerLong numSteps starterCode pairMap = do

let starterMap = buildInitialMap M.empty starterCode

finalOccMap <- foldM runStep starterMap [1..numSteps]

let finalCharCountMap = foldl countChars M.empty (M.toList finalOccMap)

...

where

countChars :: OccMapBig Char -> ((Char, Char), Integer) -> OccMapBig Char

countChars prevMap ((c1, c2), count) = addKey (addKey prevMap c1 count) c2 countSo we have a count of the characters in our final string...sort of. Recall that we added characters for each pair. Thus the number we're getting is basically doubled! So we want to divide each value by 2, with the exception of the first and last characters in the string. If these are the same, we have an edge case. We divide the number by 2 and then add an extra one. Otherwise, if a character has an odd value, it must be on the end, so we divide by two and round up. We sum up this logic with the quotRoundUp function, which we apply over our finalCharCountMap.

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer) expandPolymerLong numSteps starterCode pairMap = do let starterMap = buildInitialMap M.empty starterCode finalOccMap <- foldM runStep starterMap [1..numSteps] let finalCharCountMap = foldl countChars M.empty (M.toList finalOccMap) let finalCounts = map quotRoundUp (M.toList finalCharCountMap) ... where quotRoundUp :: (Char, Integer) -> Integer quotRoundUp (c, i) = if even i then quot i 2 + if head starterCode == c && last starterCode == c then 1 else 0 else quot i 2 + 1

And finally, we consider the list of outcomes and take the maximum minus the minimum!

```haskell

expandPolymerLong :: (MonadLogger m) => Int -> String -> PairMap -> m (Maybe Integer)

expandPolymerLong numSteps starterCode pairMap = do

let starterMap = buildInitialMap M.empty starterCode

finalOccMap <- foldM runStep starterMap [1..numSteps]

let finalCharCountMap = foldl countChars M.empty (M.toList finalOccMap)

let finalCounts = map quotRoundUp (M.toList finalCharCountMap)

if null finalCounts

then logErrorN "Final Occurrence Map is empty!" >> return Nothing

else return $ Just $ fromIntegral (maximum finalCounts - minimum finalCounts)

where

buildInitialMap = ...

runStep = ...

runExpand = ...

countChars = ...

quotRoundUp = ...Last of all, we combine input parsing with solving the problem. Our "easy" and "hard" solutions look the same, just with different numbers of steps.

solveDay14Easy :: String -> IO (Maybe Integer)

solveDay14Easy fp = runStdoutLoggingT $ do

(starterCode, pairCodes) <- parseFile parseInput fp

expandPolymerLong 10 starterCode pairCodes

solveDay14Hard :: String -> IO (Maybe Integer)

solveDay14Hard fp = runStdoutLoggingT $ do

(starterCode, pairCodes) <- parseFile parseInput fp

expandPolymerLong 40 starterCode pairCodesConclusion

Hopefully that solution makes sense to you! In case I left anything out of my solution, you can peruse the code on GitHub. Later this week, we'll have a video walkthrough of this solution!

If you're enjoying this content, make sure to subscribe to our monthly newsletter, which will also give you access to our Subscriber Resources!

Dijkstra Video Walkthrough

Today I’m taking a really quick break from Advent of Code videos to do a video walkthrough of Dijkstra’s algorithm! I did several written walkthroughs of this algorithm a couple months ago:

However, I never did a video walkthrough on my YouTube channel, and a viewer specifically requested one, so here it is today!

If you enjoyed that video, make sure to subscribe to our monthly newsletter so you can stay up to date on our latest content! This will also give you access to our subscriber resources!

Octopus Energy - Video Walkthrough

Here’s another Video walkthrough, which you can find here on YouTube. This is for the Day 11 problem. You can find a detailed written walkthrough here. The code is also available on GitHub.

There will be one non-Advent-of-Code video later this week, and then next week we’ll be back with more problem solving walkthroughs!

Seven Segment Display - Video Walkthrough

As promised, today I’m back on YouTube, releasing a video walkthrough of my solution to the Seven Segment Display problem that we went over last week in this detailed blog post.

Next week I’ll be following up with another video walkthrough, this time for Day 11 that we covered earlier this week! Make sure to subscribe if you’re enjoying this content!

Flashing Octopuses and BFS

Today we continue our new series on Advent of Code solutions from 2021. Last time we solved the seven-segment logic puzzle. Today, we'll look at the Day 11 problem which focuses a bit more on traditional coding structures and algorithms.

This will be another in-depth coding write-up. For the next week or so after this I'll switch to doing video reviews so you can compare the styles. I haven't been too exhaustive with listing imports in these examples though, so if you're curious about those you can take a look at the full solution here on GitHub. So now, let's get started!

Problem Statement

For this problem, we're dealing with a set of Octopuses, (Advent of Code had an aquatic theme last year) and these octopuses apparently have an "energy level" and eventually "flash" when they reach their maximum energy level. They sit nicely in a 2D grid for us, and the puzzle input is just a grid of single-digit integers for their initial "energy level". Here's an example.

5483143223

2745854711

5264556173

6141336146

6357385478

4167524645

2176841721

6882881134

4846848554

5283751526Now, as time goes by, their energy levels increase. With each step, all energy levels go up by one. So after a single step, the energy grid looks like this:

6594254334

3856965822

6375667284

7252447257

7468496589

5278635756

3287952832

7993992245

5957959665

6394862637However, when an octopus reaches level 10, it flashes. This has two results for the next step. First, its own energy level always reverts to 0. Second, it increments the energy level of all neighbors as well. This, of course, can make things more complicated, because we can end up with a cascading series of flashes. Even an octopus that has a very low energy level at the start of a step can end up flashing. Here's an example.

Start:

11111

19891

18181

19891

11111

End:

34543

40004

50005

40004

34543The 1 in the center still ends up flashing. It has four neighbors as 9 which all flash. The surrounding 8's then flash because each has two 9 neighbors. As a result, the 1 has 8 neighbors flashing. Combining with its own increment, it becomes as 10, so it also flashes.

The good news is that all flashing octopuses revert to 0. They don't start counting again from other adjacent flashes so we can't get an infinite loop of flashing and we don't have to worry about the "order" of flashing.

For the first part of the problem, we have to find the total number of flashes after a certain number of steps. For the second part, we have to find the first step when all of the octopuses flash.

Solution Approach

There's nothing too difficult about the solution approach here. Incrementing the grid and finding the initial flashes are easy problems. The only tricky part is cascading the flashes. For this, we need a Breadth-First-Search where each item in the queue is a flash to resolve. As long as we're careful in our accounting and in the update step, we should be able to answer the questions fairly easily.

Utilities

As with last time, we'll start the coding portion with a few utilities that will (hopefully) end up being useful for other problems. The first of these is a simple one. We'll use a type synonym Coord2 to represent a 2D integer coordinate.

type Coord2 = (Int, Int)Next, we'll want another general parsing function. Last time, we covered parseLinesFromFile, which took a general parser and applied it to every line of an input file. But we also might want to incorporate the "line-by-line" behavior into our general parser, so we'll add a function to parse the whole file given a single ParsecT expression. The structure is much the same, it just does even less work than our prior example.

parseFile :: (MonadIO m) => ParsecT Void Text m a -> FilePath -> m a

parseFile parser filepath = do

input <- pack <$> liftIO (readFile filepath)

result <- runParserT parser "Utils.hs" input

case result of

Left e -> error $ "Failed to parse: " ++ show e

Right x -> return xLast of all, this problem deals with 2D grids and spreading out the "effect" of one square over all eight of its neighbors. So let's write a function to get all the adjacent coordinates of a tile. We'll call this neighbors8, and it will be very similar to a function getting neighbors in 4 directions that I used in this Dijkstra's algorithm implementation.

getNeighbors8 :: HashMap Coord2 a -> Coord2 -> [Coord2]

getNeighbors8 grid (row, col) = catMaybes

[maybeUp, maybeUpRight, maybeRight, maybeDownRight, maybeDown, maybeDownLeft, maybeLeft, maybeUpLeft]

where

(maxRow, maxCol) = maximum $ HM.keys grid

maybeUp = if row > 0 then Just (row - 1, col) else Nothing

maybeUpRight = if row > 0 && col < maxCol then Just (row - 1, col + 1) else Nothing

maybeRight = if col < maxCol then Just (row, col + 1) else Nothing

maybeDownRight = if row < maxRow && col < maxCol then Just (row + 1, col + 1) else Nothing

maybeDown = if row < maxRow then Just (row + 1, col) else Nothing

maybeDownLeft = if row < maxRow && col > 0 then Just (row + 1, col - 1) else Nothing

maybeLeft = if col > 0 then Just (row, col - 1) else Nothing

maybeUpLeft = if row > 0 && col > 0 then Just (row - 1, col - 1) else NothingThis function could also apply to an Array instead of a Hash Map. In fact, it might be even more appropriate there. But below we'll get into the reasons for using a Hash Map.

Parsing the Input

Now, let's get to the first step of the problem itself, which is to parse the input. In this case, the input is simply a 2D array of single-digit integers, so this is a fairly straightforward process. In fact, I figured this whole function could be re-used as well, so it could also be considered a utility.

The first step is to parse a line of integers. Since there are no spaces and no separators, this is very simple using some.

import Data.Char (digitToInt)

import Text.Megaparsec (some)

parseDigitLine :: ParsecT Void Text m [Int]

parseDigitLine = fmap digitToInt <$> some digitCharNow getting a repeated set of these "integer lists" over a series of lines uses the same trick we saw last time. We use sepEndBy1 combined with the eol parser for end-of-line.

parse2DDigits :: (Monad m) => ParsecT Void Text m [[Int]]

parse2DDigits = sepEndBy1 parseDigitLine eolHowever, we want to go one step further. A list-of-lists-of-ints is a cumbersome data structure. We can't really update it efficiently. Nor, in fact, can we even access 2D indices quickly. There are two good structures for us to use, depending on the problem. We can either use a 2D array, or a HashMap where the keys are 2D coordinates.

Because we'll be updating the structure itself, we want a Hash Map in this case. Haskell's Array structure has no good way to update its values without a full copy. If the structure were read only though, Array would be the better choice. For our current problem, the mutable array pattern would also be an option. But for now I'll keep things simpler.

So we need a function to convert nested integer lists into a Hash Map with coordinates. The first step in this process is to match each list of integers with a row number, and each integer within the list with its column number. Infinite lists, ranges and zip are excellent tools here!

hashMapFromNestedLists :: [[Int]] -> HashMap Coord2 Int

hashMapFromNestedLists inputs = ...

where

x = zip [0,1..] (map (zip [0,1..]) inputs)Now in most languages, we would use a nested for-loop. The outer structure would cover the rows, the inner structure would cover the columns. In Haskell, we'll instead do a 2-level fold. The outer layer (the function f) will cover the rows. The inner layer (function g) will cover the columns. Each step updates the Hash Map appropriately.

hashMapFromNestedLists :: [[Int]] -> HashMap Coord2 Int

hashMapFromNestedLists inputs = foldl f HM.empty x

where

x = zip [0,1..] (map (zip [0,1..]) inputs)

f :: HashMap Coord2 Int -> (Int, [(Int, Int)]) -> HashMap Coord2 Int

f prevMap (row, pairs) = foldl (g row) prevMap pairs

g :: Int -> HashMap Coord2 Int -> Coord2 -> HashMap Coord2 Int

g row prevMap (col, val) = HM.insert (row, col) val prevMapAnd now we can pull it all together and parse our input!

solveDay11Easy :: String -> IO (Maybe Int)

solveDay11Easy fp = do

initialState <- parseFile parse2DDigitHashMap fp

...

solveDay11Hard :: String -> IO (Maybe Int)

solveDay11Hard fp = do

initialState <- parseFile parse2DDigitHashMap fp

...Basic Step Running

Now let's get to the core of the algorithm. The function we really need to get right here is a function to update a single step of the process. This will take our grid as an input and produce the new grid as an output, as well as some extra information. Let's start by making another type synonym for OGrid as the "Octopus grid".

type OGrid = HashMap Coord2 IntNow a simple version of this function would have a type signature like this:

runStep :: (MonadLogger m) => OGrid -> m OGrid(As mentioned last time, I'm defaulting to using MonadLogger for most implementation details).

However, we'll include two extra outputs for this function. First, we want an Int for the number of flashes that occurred on this step. This will help us with the first part of the problem, where we are summing the number of flashes given a certain number of steps.

Second, we want a Bool indicating that all of them have flashed. This is easy to derive from the number of flashes and will be our terminal condition flag for the second part of the problem.

runStep :: (MonadLogger m) => OGrid -> m (OGrid, Int, Bool)Now the first thing we can do while stepping is to increment everything. Once we've done that, it is easy to pick out the coordinates that ought be our "initial flashes" - all the items where the value is at least 10.

runStep :: (MonadLogger m) => OGrid -> m (OGrid, Int, Bool)

runStep = ...

where

-- Start by incrementing everything

incrementedGrid = (+1) <$> inputGrid

initialFlashes = fst <$> filter (\(_, x) -> x >= 10) (HM.toList incrementedGrid)Now what do we do with our initial flashes to propagate them? Let's defer this to a helper function, processFlashes. This will be where we perform the BFS step recursively. Using BFS requires a queue and a visited set, so we'll want these as arguments to our processing function. Its result will be the final grid, updated with all the incrementing done by the flashes, as well as the final set of all flashes, including the original ones.

processFlashes :: (MonadLogger m) =>

HashSet Coord2 -> Seq Coord2 -> OGrid -> m (HashSet Coord2, OGrid)In calling this from our runStep function, we'll prepopulate the visited set and the queue with the initial group of flashes, as well as passing the "incremented" grid.

runStep :: (MonadLogger m) => OGrid -> m (OGrid, Int, Bool)

runStep = do

(allFlashes, newGrid) <- processFlashes (HS.fromList initialFlashes) (Seq.fromList initialFlashes) incrementedGrid

...

where

-- Start by incrementing everything

incrementedGrid = (+1) <$> inputGrid

initialFlashes = fst <$> filter (\(_, x) -> x >= 10) (HM.toList incrementedGrid)Now the last thing we need to do is count the total number of flashes and reset all flashes coordinates to 0 before returning. We can also compare the number of flashes to the size of the hash map to see if they all flashed.

runStep :: (MonadLogger m) => OGrid -> m (OGrid, Int, Bool)

runStep inputGrid = do

(allFlashes, newGrid) <- processFlashes (HS.fromList initialFlashes) (Seq.fromList initialFlashes) incrementedGrid

let numFlashes = HS.size allFlashes

let finalGrid = foldl (\g c -> HM.insert c 0 g) newGrid allFlashes

return (finalGrid, numFlashes, numFlashes == HM.size inputGrid)

where

-- Start by incrementing everything

incrementedGrid = (+1) <$> inputGrid

initialFlashes = fst <$> filter (\(_, x) -> x >= 10) (HM.toList incrementedGrid)Processing Flashes

So now we need to do this flash processing! To re-iterate, this is a BFS problem. We have a queue of coordinates that are flashing. In order to process a single flash, we increment its neighbors and, if incrementing puts its energy over 9, add it to the back of the queue to be processed.

So our inputs are the sequence of coordinates to flash, the current grid, and a set of coordinates we've already visited (since we want to avoid "re-flashing" anything).

processFlashes :: (MonadLogger m) =>

HashSet Coord2 -> Seq Coord2 -> OGrid -> m (HashSet Coord2, OGrid)We'll start with a base case. If the queue is empty, we'll return the input grid and the current visited set.

import qualified Data.Sequence as Seq

import qualified Data.HashSet as HS

import qualified Data.HashMap.Strict as HM

processFlashes :: (MonadLogger m) =>

HashSet Coord2 -> Seq Coord2 -> OGrid -> m (HashSet Coord2, OGrid)

processFlashes visited queue grid = case Seq.viewl queue of

Seq.EmptyL -> return (visited, grid)

...Now suppose we have a non-empty queue and we can pull off the top element. We'll start by getting all 8 neighboring coordinates in the grid and incrementing their values. There's no harm in re-incrementing coordinates that have flashed already, because we'll just reset everything

processFlashes :: (MonadLogger m) =>

HashSet Coord2 -> Seq Coord2 -> OGrid -> m (HashSet Coord2, OGrid)

processFlashes visited queue grid = case Seq.viewl queue of

Seq.EmptyL -> return (visited, grid)

top Seq.:< rest -> do

-- Get the 8 adjacent coordinates in the 2D grid

let allNeighbors = getNeighbors8 grid top

-- Increment the value of all neighbors

newGrid = foldl (\g c -> HM.insert c ((g HM.! c) + 1) g) grid allNeighbors

...Then we want to filter this neighbors list down to the neighbors we'll add to the queue. So we'll make a predicate shouldAdd that tells us if a neighboring coordinate is a.) at least energy level 9 (so incrementing it causes a flash) and b.) that it is not yet visited. This lets us construct our new visited set and the final queue.

processFlashes :: (MonadLogger m) =>

HashSet Coord2 -> Seq Coord2 -> OGrid -> m (HashSet Coord2, OGrid)

processFlashes visited queue grid = case Seq.viewl queue of

Seq.EmptyL -> return (visited, grid)

top Seq.:< rest -> do

let allNeighbors = getNeighbors8 grid top

newGrid = foldl (\g c -> HM.insert c ((g HM.! c) + 1) g) grid allNeighbors

neighborsToAdd = filter shouldAdd allNeighbors

newVisited = foldl (flip HS.insert) visited neighborsToAdd

newQueue = foldl (Seq.|>) rest neighborsToAdd

...

where

shouldAdd :: Coord2 -> Bool

shouldAdd coord = grid HM.! coord >= 9 && not (HS.member coord visited)And, the cherry on top, we just have to make our recursive call with the new values.

processFlashes :: (MonadLogger m) =>

HashSet Coord2 -> Seq Coord2 -> OGrid -> m (HashSet Coord2, OGrid)

processFlashes visited queue grid = case Seq.viewl queue of

Seq.EmptyL -> return (visited, grid)

top Seq.:< rest -> do

let allNeighbors = getNeighbors8 grid top

newGrid = foldl (\g c -> HM.insert c ((g HM.! c) + 1) g) grid allNeighbors

neighborsToAdd = filter shouldAdd allNeighbors

newVisited = foldl (flip HS.insert) visited neighborsToAdd

newQueue = foldl (Seq.|>) rest neighborsToAdd

processFlashes newVisited newQueue newGrid

where

shouldAdd :: Coord2 -> Bool

shouldAdd coord = grid HM.! coord >= 9 && not (HS.member coord visited)With processing done, we have completed our function for running a sinigle step.

Easy Solution

Now that we can run a single step, all that's left is to answer the questions! For the first (easy) part, we just want to count the number of flashes that occur over 100 steps. This will follow a basic recursion pattern, where one of the arguments tells us how many steps are left. The stateful values that we're recursing on are the grid itself, which updates each step, and the sum of the number of flashes.

runStepCount :: (MonadLogger m) => Int -> (OGrid, Int) -> (OGrid, Int)Let's start with a base case. When we have 0 steps left, we return the inputs as the result.

runStepCount :: (MonadLogger m) => Int -> (OGrid, Int) -> m (OGrid, Int)

runStepCount 0 results = return results

...The recursive case is also quite easy. We invoke runStep to get the updated grid and the number of flashses, and then recurse with a reduced step count, adding the new flashes to our previous sum.

runStepCount :: (MonadLogger m) => Int -> (OGrid, Int) -> m (OGrid, Int)

runStepCount 0 results = return results

runStepCount i (grid, prevFlashes) = do

(newGrid, flashCount, _) <- runStep grid

runStepCount (i - 1) (newGrid, flashCount + prevFlashes)And then we can call this from our "easy" entrypoint:

solveDay11Easy :: String -> IO (Maybe Int)

solveDay11Easy fp = do

initialState <- parseFile parse2DDigitHashMap fp

(_, numFlashes) <- runStdoutLoggingT $ runStepCount 100 (initialState, 0)

return $ Just numFlashesHard Solution

For the second part of the problem, we want to find the first step where *all octopuses flash**. Obviously once they synchronize the first time, they'll remain synchronized forever after that. So we'll write a slightly different recursive function, this time counting up instead of down.

runTillAllFlash :: (MonadLogger m) => OGrid -> Int -> m Int

runTillAllFlash inputGrid thisStep = ...Each time we run this function, we'll call runStep. The terminal condition is when the Bool flag we get from runStep becomes true. In this case, we return the current step value.

runTillAllFlash :: (MonadLogger m) => OGrid -> Int -> m Int

runTillAllFlash inputGrid thisStep = do

(newGrid, _, allFlashed) <- runStep inputGrid

if allFlashed

then return thisStep

...Otherwise, we just going to recurse, except with an incremented step count.

runTillAllFlash :: (MonadLogger m) => OGrid -> Int -> m Int

runTillAllFlash inputGrid thisStep = do

(newGrid, _, allFlashed) <- runStep inputGrid

if allFlashed

then return thisStep

else runTillAllFlash newGrid (thisStep + 1)And once again, we wrap up by calling this function from our "hard" entrypoint.

solveDay11Hard :: String -> IO (Maybe Int)

solveDay11Hard fp = do

initialState <- parseFile parse2DDigitHashMap fp

firstAllFlash <- runStdoutLoggingT $ runTillAllFlash initialState 1

return $ Just firstAllFlashAnd now we're done! Our program should be able to solve both parts of the problem!

Conclusion

For the next couple articles, I'll be walking through these same problems, except in video format! So stay tuned for that, and make sure you're subscribed to the YouTube channel so you get notifications about it!

And if you're interested in staying up to date with all the latest news on Monday Morning Haskell, make sure to subscribe to our mailing list. This will get you our monthly newsletter, access to our resources page, and you'll also get special offers on all of our video courses!

Advent of Code: Seven Segment Logic Puzzle

We're into the last quarter of the year, and this means Advent of Code is coming up again in a couple months! I'm hoping to do a lot of these problems in Haskell again and this time do up-to-date recaps. To prepare for this, I'm going back through my solutions from last year and trying to update them and come up with common helpers and patterns that will be useful this year.

You can follow me doing these implementation reviews on my stream, and you can take a look at my code on GitHub here!

Most of my blog posts for the next few weeks will recap some of these problems. I'll do written summaries of solutions as well as video summaries to see which are more clear. The written summaries will use the In-Depth Coding style, so get ready for a lot of code! As a final note, you'll notice my frequent use of MonadLogger, as I covered in this article. So let's get started!

Problem Statement

I'm going to start with Day 8 from last year, which I found to be an interesting problem because it was more of a logic puzzle than a traditional programming problem. The problem starts with the general concept of a seven segment display, a way of showing numbers on an electronic display (like scoreboards, for example).

We can label each of the seven segments like so, with letters "a" through "g":

Segments a-g:

aaaa

b c

b c

dddd

e f

e f

ggggIf all seven segments are lit up, this indicates an 8. If only "c" and "f" are lit up, that's 1, and so on.

The puzzle input consists of lines with 10 "code" strings, and 4 "output" strings, separated by a pipe delimiter:

be cfbegad cbdgef fgaecd cgeb fdcge agebfd fecdb fabcd edb | fdgacbe cefdb cefbgd gcbe

edbfga begcd cbg gc gcadebf fbgde acbgfd abcde gfcbed gfec | fcgedb cgb dgebacf gcThe 10 code strings show a "re-wiring" of the seven segment display. On the first line, we see that be is present as a code string. Since only a "one" has length 2, we know that "b" and "e" each refer either to the "c" or "f" segment, since only those segments are lit up for "one". We can use similar lines of logic to fully determine the mapping of code characters to the original segment display.

Once we have this, we can decode each output string on the right side, get a four-digit number, and then add all of these up.

Solution Approach

When I first solved this problem over a year ago, I went through the effort of deriving a general function to decode any string based on the input codes, and then used this function

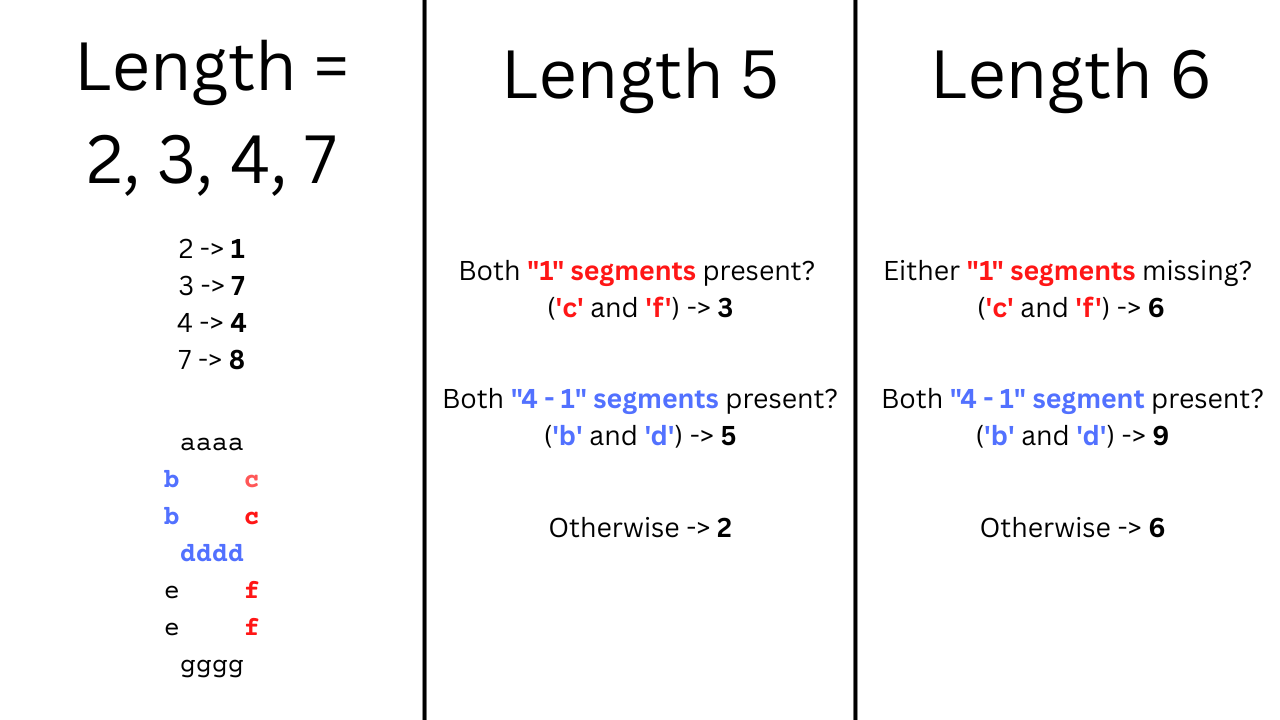

However, upon revisiting the problem, I realized it's quite a bit simpler. The length of the output to decode is obviously the first big branching point (as we'll see, "part 1" of the problem clues you on to this). Four of the numbers have unique lengths of "on" segments:

- 2 Segments = 1

- 3 Segments = 7

- 4 Segments = 4

- 7 Segments = 8

Then, three possible numbers have 5 "on" segments (2, 3, and 5). The remaining three (0, 6, 9) use six segments.

However, when it comes to solving these more ambiguous numbers, the key still lies with the digits 1 and 4, because we can always find the codes referring to these by their length. So we can figure out which two code characters are on the right side (referring to the c and fsegments) and which two segments refer to "four minus one", so segments b and d. We don't immediately know which is which in either pair, but it doesn't matter!

Between our "length 5" outputs (2, 3, 5), only 3 contains both segments from "one". So if that isn't true, we can then look at the "four minus one" segments (b and d), and if both are present, it's a 5, otherwise it's a 2.

We can employ similar logic for the length-6 possibilities. If either "one" segment is missing, it must be 6. Then if both "four minus one" segments are present, the answer is 9. Otherwise it is 0.

If this logic doesn't make sense in paragraphs, here's a picture that captures the essential branches of the logic.

So how do we turn this solution into code?

Utilities

First, let's start with a couple utility functions. These functions capture patterns that are useful across many different problems. The first of these is countWhere. This is a small helper whenever we have a list of items and we want the number of items that fulfill a certain predicate. This is a simple matter of filtering on the predicate and taking the length.

countWhere :: (a -> Bool) -> [a] -> Int

countWhere predicate list = length $ filter predicate listNext we'll have a flexible parsing function. In general, I've been trying to use Megaparsec to parse the problem inputs (though it's often easier to parse them by hand). You can read this series to learn more about parsing in Haskell, and this part specifically for megaparsec.

But a good general helper we can have is "given a file where each line has a specific format, parse the file into a list of outputs." I refer to this function as parseLinesFromFile.

parseLinesFromFile :: (MonadIO m) => ParsecT Void Text m a -> FilePath -> m [a]

parseLinesFromFile parser filepath = do

input <- pack <$> liftIO (readFile filepath)

result <- runParserT (sepEndBy1 parser eol) "Utils.hs" input

case result of

Left e -> error $ "Failed to parse: " ++ show e

Right x -> return xTwo key observations about this function. We take the parser as an input (this type is ParsecT Void Text m a). Then we apply it line-by-line using the flexible combinator sepEndBy1 and the eol parser for "end of line". The combinator means we parse several instances of the parser that are separated and optionally ended by the second parser. So each instance (except perhaps the last) of the input parser then is followed by an "end of line" character (or carriage return).

Parsing the Lines

Now when it comes to the specific problem solution, we always have to start by parsing the input from a file (at least that's how I prefer to do it). The first step of parsing is to determine what we're parsing into. What is the "output type" of parsing the data?

In this case, each line we parse consists of 10 "code" strings and 4 "output" strings. So we can make two types to hold each of these parts - InputCode and OutputCode.

data InputCode = InputCode

{ screen0 :: String

, screen1 :: String

, screen2 :: String

, screen3 :: String

, screen4 :: String

, screen5 :: String

, screen6 :: String

, screen7 :: String

, screen8 :: String

, screen9 :: String

} deriving (Show)

data OutputCode = OutputCode

{ output1 :: String

, output2 :: String

, output3 :: String

, output4 :: String

} deriving (Show)Now each different code string can be captured by the parser some letterChar. If we wanted to be more specific, we could even do some like:

choice [char 'a', char 'b', char 'c', char 'd', char 'e', char 'f', char 'g']Now for each group of strings, we'll parse them using the same sepEndBy1 combinator we used before. This time, the separator is hspace, covering horizontal space characters (including tabs, but not newlines). Between these, we use `string "| " to parse the bar in between the input line. So here's the start of our parser:

parseInputLine :: (MonadLogger m) => ParsecT Void Text m (Maybe (InputCode, OutputCode))

parseInputLine = do

screenCodes <- sepEndBy1 (some letterChar) hspace

string "| "

outputCodes <- sepEndBy1 (some letterChar) hspace

...Both screenCodes and outputCodes are lists, and we want to convert them into our output types. So first, we do some validation and ensure that the right number of strings are in each list. Then we can pattern match and group them properly. Invalid results give Nothing.

parseInputLine :: (MonadLogger m) => ParsecT Void Text m (Maybe (InputCode, OutputCode))

parseInputLine = do

screenCodes <- sepEndBy1 (some letterChar) hspace

string "| "

outputCodes <- sepEndBy1 (some letterChar) hspace

if length screenCodes /= 10

then lift (logErrorN $ "Didn't find 10 screen codes: " <> intercalate ", " (pack <$> screenCodes)) >> return Nothing

else if length outputCodes /= 4

then lift (logErrorN $ "Didn't find 4 output codes: " <> intercalate ", " (pack <$> outputCodes)) >> return Nothing

else

let [s0, s1, s2, s3, s4, s5, s6, s7, s8, s9] = screenCodes

[o1, o2, o3, o4] = outputCodes

in return $ Just (InputCode s0 s1 s2 s3 s4 s5 s6 s7 s8 s9, OutputCode o1 o2 o3 o4)Then we can parse the codes using parseLinesFromFile, applying this in both the "easy" part and the "hard" part of the problem.

solveDay8Easy :: String -> IO (Maybe Int)

solveDay8Easy fp = runStdoutLoggingT $ do

codes <- catMaybes <$> parseLinesFromFile parseInputLine fp

...

solveDay8Hard :: String -> IO (Maybe Int)

solveDay8Hard fp = runStdoutLoggingT $ do

inputCodes <- catMaybes <$> parseLinesFromFile parseInputLine fp

...The First Part

Now to complete the "easy" part of the problem, we have to answer the question: "In the output values, how many times do digits 1, 4, 7, or 8 appear?". As we've discussed, each of these has a unique length. So it's easy to first describe a function that can tell from an output string if it is one of these items:

isUniqueDigitCode :: String -> Bool

isUniqueDigitCode input = length input `elem` [2, 3, 4, 7]Then we can use our countWhere utility to apply this function and figure out how many of these numbers are in each output code.

uniqueOutputs :: OutputCode -> Int

uniqueOutputs (OutputCode o1 o2 o3 o4) = countWhere isUniqueDigitCode [o1, o2, o3, o4]Finally, we take the sum of these, applied across the outputs, to get our first answer:

solveDay8Easy :: String -> IO (Maybe Int)

solveDay8Easy fp = runStdoutLoggingT $ do

codes <- catMaybes <$> parseLinesFromFile parseInputLine fp

let result = sum $ uniqueOutputs <$> (snd <$> codes)

return $ Just resultThe Second Part

Now for the hard part! We have to decode each digit in the output, determine its value, and then get the value on the 4-digit display. The root of this is to decode a single string, given the InputCode of 10 values. So let's write a function that does that. We'll use MaybeT since there are some failure conditions on this function.

decodeString :: (MonadLogger m) => InputCode -> String -> MaybeT m IntAs we've discussed, the logic is easy for certain lengths. If the string length is 2, 3, 4 or 7, we have obvious answers.

decodeString :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decodeString inputCodes output

| length output == 2 = return 1

| length output == 3 = return 7

| length output == 4 = return 4

| length output == 7 = return 8

...Now for length 5 and 6, we'll have separate functions:

decode5 :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decode6 :: (MonadLogger m) => InputCode -> String -> MaybeT m IntThen we can call these from our base function:

decodeString :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decodeString inputCodes output

| length output == 2 = return 1

| length output == 3 = return 7

| length output == 4 = return 4

| length output == 7 = return 8

| length output == 5 = decode5 inputCodes output

| length output == 6 = decode6 inputCodes output

| otherwise = mzeroWe have a failure case of mzero if the length doesn't fall within our expectations for some reason.

Now before we can write decode5 and decode6, we'll write a helper function. This helper will determine the two characters present in the "one" segment as well as the two characters present in the "four minus one" segment.

For some reason I separated the two Chars for the "one" segment but kept them together for "four minus one". This probably isn't necessary. But anyways, here's our type signature:

sortInputCodes :: (MonadLogger m) => InputCode -> MaybeT m (Char, Char, String)

sortInputCodes ic@(InputCode c0 c1 c2 c3 c4 c5 c6 c7 c8 c9) = do

...Let's start with some more validation. We'll sort the strings by length and ensure the length distributions are correct.

sortInputCodes :: (MonadLogger m) => InputCode -> MaybeT m (Char, Char, String)

sortInputCodes ic@(InputCode c0 c1 c2 c3 c4 c5 c6 c7 c8 c9) = do

...

where

[sc0, sc1,sc2,sc3,sc4,sc5,sc6,sc7,sc8,sc9] = sortOn length [c0, c1, c2, c3, c4, c5, c6, c7, c8, c9]

validLengths =

length sc0 == 2 && length sc1 == 3 && length sc2 == 4 &&

length sc3 == 5 && length sc4 == 5 && length sc5 == 5 &&

length sc6 == 6 && length sc7 == 6 && length sc8 == 6 &&

length sc9 == 7If the lengths aren't valid, we'll return mzero as a failure case again. But if they are, we'll pattern match to identify our characters for "one" and the string for "four". By deleting the "one" characters, we'll get a string for "four minus one". Then we can return all our items:

sortInputCodes :: (MonadLogger m) => InputCode -> MaybeT m (Char, Char, String)

sortInputCodes ic@(InputCode c0 c1 c2 c3 c4 c5 c6 c7 c8 c9) = do

if not validLengths

then logErrorN ("Invalid inputs: " <> (pack . show $ ic)) >> mzero

else do

let [sc01, sc02] = sc0

let fourMinusOne = delete sc02 (delete sc01 sc2)

return (sc01, sc02, fourMinusOne)

where

[sc0, sc1,sc2,sc3,sc4,sc5,sc6,sc7,sc8,sc9] = sortOn length [c0, c1, c2, c3, c4, c5, c6, c7, c8, c9]

validLengths =

length sc0 == 2 && length sc1 == 3 && length sc2 == 4 &&

length sc3 == 5 && length sc4 == 5 && length sc5 == 5 &&

length sc6 == 6 && length sc7 == 6 && length sc8 == 6 &&

length sc9 == 7Length 5 Logic

Now we're ready to decode a string of length 5! We start by sorting the inputs, and then picking out the three elements from the list that could be of length 5:

decode5 :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decode5 ic output = do

(c01, c02, fourMinusOne) <- sortInputCodes ic

...So first we'll check if the "one" characters are present, we get 3.

decode5 :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decode5 ic output = do

(c01, c02, fourMinusOne) <- sortInputCodes ic

-- If both from c0 are present, it's a 3

if c01 `elem` output && c02 `elem` output

then return 3

else ...Then if "four minus one" shares both its characters with the output, the answer is 5, otherwise it is 2.

decode5 :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decode5 ic output = do

(c01, c02, fourMinusOne) <- sortInputCodes ic

-- If both from c0 are present, it's a 3

if c01 `elem` output && c02 `elem` output

then return 3

else do

let shared = fourMinusOne `intersect` output

if length shared == 2

then return 5

else return 2Length 6 Logic

The logic for length 6 strings is very similar. I wrote it a little differently in this function, but the idea is the same.

decode6 :: (MonadLogger m) => InputCode -> String -> MaybeT m Int

decode6 ic output = do

(c01, c02, fourMinusOne) <- sortInputCodes ic

-- If not both from c0 are present, it's a 6

if not (c01 `elem` output && c02 `elem` output)

then return 6

else do

-- If both of these characters are present in output, 9 else 0

if all (`elem` output) fourMinusOne then return 9 else return 0Wrapping Up

Now that we can decode an output string, we just have to be able to do this for all strings in our output. We just multiply their values by the appropriate power of 10.

decodeAllOutputs :: (MonadLogger m) => (InputCode, OutputCode) -> MaybeT m Int

decodeAllOutputs (ic, OutputCode o1 o2 o3 o4) = do

d01 <- decodeString ic o1

d02 <- decodeString ic o2

d03 <- decodeString ic o3

d04 <- decodeString ic o4

return $ d01 * 1000 + d02 * 100 + d03 * 10 + d04And now we can complete our "hard" function by decoding all these inputs and taking their sums.

solveDay8Hard :: String -> IO (Maybe Int)

solveDay8Hard fp = runStdoutLoggingT $ do

inputCodes <- catMaybes <$> parseLinesFromFile parseInputLine fp

results <- runStdoutLoggingT $ runMaybeT (mapM decodeAllOutputs inputCodes)

return $ fmap sum resultsConclusion

That's all for this week! You can take a look at all this code on GitHub if you want! Here's the main solution module!

Next time, we'll go through another one of these problems! If you'd like to stay up to date with the latest on Monday Morning Haskell, subscribe to our mailing list! This will give you access to all our subscriber resources!

Haskell and Visual Studio

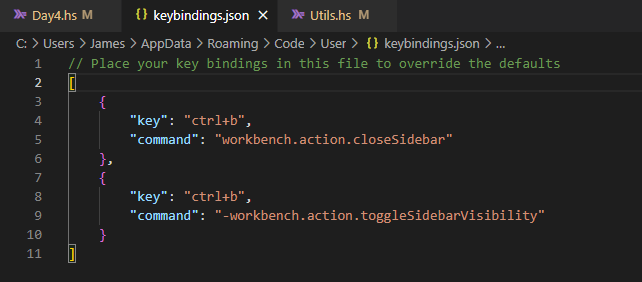

Last time around, we explored how to integrate Haskell with the Vim text editor, which offers a wealth of customization options. With some practice at the keyboard patterns, you can move around files and projects very quickly. But a pure textual editor isn't for everyone. So most of the IDEs out there use graphical interfaces that let you use the mouse.

Today we'll explore one of those options - Visual Studio (aka VS Code). In addition to being graphical, this editor also differs from Vim in that it is a commercial product. As we'll see this brings about some pluses and minuses. One note I'll make is that I'm using VS Code to support Windows Subsystem for Linux, meaning I'm on a Windows machine. A lot of the keyboard shortcuts are different for Mac, so keep that in mind (even beyond simply substituting the "command" key for "control" and "option" for "alt").

Now, let's explore how we can satisfy all the requirements from our original IDE article using this editor!

Basic Features

First off, the basics. Opening new files in new tabs is quite easy. Using "Ctrl+P" brings up a search bar that lets you find anything in your project. Very nice and easy.