Starting out with Haskell Tensor Flow

Last week we discussed the burgeoning growth of AI systems. We saw several examples of how those systems are impacting our lives more and more. I made the case that we ought to focus more on reliability when making architecture choices. After all, people’s lives might be at stake when we right code now. Naturally, I suggested Haskell as a prime candidate for developing reliable AI systems.

So now we’ll actually write some Haskell machine learning code. We'll focus on the Tensor Flow bindings library. I first got familiar with this library back at BayHac in April. I’ve spent the last couple months learning both Tensor Flow as a whole and the Haskell library. In this first article, we’ll go over the basic concepts of Tensor Flow. We'll see how they’re implemented in Python (the most common language for TF). We'll then translate these concepts to Haskell.

Note this series will not be a general introduction to the concept of machine learning. There is a fantastic series on Medium about that called Machine Learning is Fun! If you’re interested in learning the basic concepts, I highly recommend you check out part 1 of that series. Many of the ideas in my own article series will be a lot clearer with that background.

Tensors

Tensor Flow is a great name because it breaks the library down into the two essential concepts. First up are tensors. These are the primary vehicle of data representation in Tensor Flow. Low-dimensional tensors are actually quite intuitive. But there comes a point when you can’t really visualize what’s going on, so you have to let the theoretical idea guide you.

In the world of big data, we represent everything numerically. And when you have a group of numbers, a programmer’s natural instinct is to put those in an array.

[1.0, 2.0, 3.0, 6.7]Now what do you do if you have a lot of different arrays of the same size and you want to associate them together? You make a 2-dimensional array (an array of arrays), which we also refer to as a matrix.

[[1.0, 2.0, 3.0, 6.7],

[5.0, 10.0, 3.0, 12.9],

[6.0, 12.0, 15.0, 13.6],

[7.0, 22.0, 8.0, 5.3]]Most programmers are pretty familiar with these concepts. Tensors take this idea and keep extending it. What happens when you have a lot of matrices of the same size? You can group them together as an array of matrices. We could call this a three-dimensional matrix. But “tensor” is the term we’ll use for this data representation in all dimensions.

Every tensor has a degree. We could start with a single number. This is a tensor of degree 0. Then a normal array is a tensor of degree 1. Then a matrix is a tensor of degree 2. Our last example would be a tensor of degree 3. And you can keep adding these on to each other, ad infinitum.

Every tensor has a shape. The shape is an array representing the dimensions of the tensor. The length of this array will be the degree of the tensor. So a number will have the empty list as its shape. An array will have a list of length 1 containing the length of the array. A matrix will have a list of length 2 containing its number of rows and columns. And so on. There are a few different ways we can represent tensors in code, but we'll get to that in a bit.

Go with the Flow

The second important concept to understand is how Tensor Flow performs computations. Machine learning generally involves simple math operations. A lot of simple math operations. Since the scale is so large, we need to perform these operations as fast as possible. We need to use software and hardware that is optimized for this specific task. This necessitates having a low-level code representation of what’s going on. This is easier to achieve in a language like C, instead of Haskell or Python.

We could have the bulk of our code in Haskell, but perform the math in C using a Foreign Function Interface. But these interfaces have a large overhead, so this is likely to negate most of the gains we get from using C.

Tensor Flow’s solution to this problem is that we first build up a graph describing all our computations. Then once we have described that, we “run” our graph using a “session”. Thus it performs the entire language conversion process at once, so the overhead is lower.

If this sounds familiar, it's because this is how actions tend to work in Haskell (in some sense). We can, for instance, describe an IO action. And this action isn’t a series of commands that we execute the moment they show up in the code. Rather, the action is a description of the operations that our program will perform at some point. It’s also similar to the concept of Effectful programming. We’ll explore that topic in the future on this blog.

So what does our computation graph look like? We'll, each tensor is a node. Then we can make other nodes for "operations", that take tensors as input. For instance, we can “add” two tensors together, and this is another node. We’ll see in our example how we build up the computational graph, and then run it.

One of the awesome features of Tensor Flow is the Tensor Board application. It allows you to visualize your graph of computations. We’ll see how to do this later in the series.

Coding Tensors

So at this point we should start examining how we actually create tensors in our code. We’ll start by looking at how we do this in Python, since the concepts are a little easier to understand that way. There are three types of tensors we’ll consider. The first are “constants”. These represent a set of values that do not change. We can use these values throughout our model training process, and they'll be the same each time. Since we define the values for the tensor up front, there’s no need to give any size arguments. But we will specify the datatype that we’ll use for them.

import tensorflow as tf

node1 = tf.constant(3.0, dtype=tf.float32)

node2 = tf.constant(4.0, dtype=tf.float32)Now what can we actually do with these tensors? Well for a quick sample, let’s try adding them. This creates a new node in our graph that represents the addition of these two tensors. Then we can “run” that addition node to see the result. To encapsulate all our information, we’ll create a “Session”:

import tensorflow as tf

node1 = tf.constant(3.0, dtype=tf.float32)

node2 = tf.constant(4.0, dtype=tf.float32)

additionNode = tf.add(node1, node2)

sess = tf.Session()

result = sess.run(additionNode)

print result

“””

Output:

7.0

“””Next up are placeholders. These are values that we change each run. Generally, we will use these for the inputs to our model. By using placeholders, we'll be able to change the input and train on different values each time. When we “run” a session, we need to assign values to each of these nodes.

We don’t know the values that will go into a placeholder, but we still assign the type of data at construction. We can also assign a size if we like. So here’s a quick snippet showing how we initialize placeholders. Then we can assign different values with each run of the application. Even though our placeholder tensors don’t have values, we can still add them just as we could with constant tensors.

node1 = tf.placeholder(tf.float32)

node2 = tf.placeholder(tf.float32)

adderNode = tf.add(node1, node2)

sess = tf.Session()

result1 = sess.run(adderNode, {node1: 3, node2: 4.5 })

result2 = sess.run(adderNode, {node1: 2.7, node2: 8.9 })

print(result1)

print(result2)

"""

Output:

7.5

11.6

"""The last type of tensor we’ll use are variables. These are the values that will constitute our “model”. Our goal is to find values for these parameters that will make our model fit the data well. We’ll supply a data type, as always. In this situation, we’ll also provide an initial constant value. Normally, we’d want to use a random distribution of some kind. The tensor won’t actually take on its value until we run a global variable initializer function. We’ll have to create this initializer and then have our session object run it before we get going.

w = tf.Variable([3], dtype=tf.float32)

b = tf.Variable([1], dtype=tf.float32)

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)Now let’s use our variables to create a “model” of sorts. For this article we'll make a simple linear model. Let’s create additional nodes for our input tensor and the model itself. We’ll let w be the weights, and b be the “bias”. This means we’ll construct our final value by w*x + b, where x is the input.

w = tf.Variable([3], dtype=tf.float32)

b = tf.Variable([1], dtype=tf.float32)

x = tf.placeholder(dtype=tf.float32)

linear_model = w * x + bNow, we want to know how good our model is. So let’s compare it to y, an input of our expected values. We’ll take the difference, square it, and then use the reduce_sum library function to get our “loss”. The loss measures the difference between what we want our model to represent and what it actually represents.

w = tf.Variable([3], dtype=tf.float32)

b = tf.Variable([1], dtype=tf.float32)

x = tf.placeholder(dtype=tf.float32)

linear_model = w * x + b

y = tf.placeholder(dtype=tf.float32)

squared_deltas = tf.square(linear_model - y)

loss = tf.reduce_sum(squared_deltas)Each line here is a different tensor, or a new node in our graph. We’ll finish up our model by using the built in GradientDescentOptimizer with a learning rate of 0.01. We’ll set our training step as attempting to minimize the loss function.

optimizer = tf.train.GradientDescentOptimizer(0.01)

train = optimizer.minimize(loss)Now we’ll run the session, initialize the variables, and run our training step 1000 times. We’ll pass a series of inputs with their expected outputs. Let's try to learn the line y = 5x - 1. Our expected output y values will assume this.

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)

for i in range(1000):

sess.run(train, {x: [1, 2, 3, 4], y: [4,9,14,19]})

print(sess.run([W,b]))At the end we print the weights and bias, and we see our results!

[array([ 4.99999475], dtype=float32), array([-0.99998516], dtype=float32)]So we can see that our learned values are very close to the correct values of 5 and -1!

Representing Tensors in Haskell

So now at long last, I’m going to get into some of the details of how we apply these tensor concepts in Haskell. Like strings and numbers, we can’t have this one “Tensor” type in Haskell, since that type could really represent some very different concepts. For a deeper look at the tensor types we’re dealing with, check out our in depth guide.

In the meantime, let’s go through some simple code snippets replicating our efforts in Python. Here’s how we make a few constants and add them together. Do note the “overloaded lists” extension. It allows us to represent different types with the same syntax as lists. We use this with both Shape items and Vectors:

{-# LANGUAGE OverloadedLists #-}

import Data.Vector (Vector)

import TensorFlow.Ops (constant, add)

import TensorFlow.Session (runSession, run)

runSimple :: IO (Vector Float)

runSimple = runSession $ do

let node1 = constant [1] [3 :: Float]

let node2 = constant [1] [4 :: Float]

let additionNode = node1 `add` node2

run additionNode

main :: IO ()

main = do

result <- runSimple

print result

{-

Output:

[7.0]

-}We use the constant function, which takes a Shape and then the value we want. We’ll create our addition node and then run it to get the output, which is a vector with a single float. We wrap everything in the runSession function. This encapsulates the initialization and running actions we saw in Python.

Now suppose we want placeholders. This is a little more complicated in Haskell. We’ll be using two placeholders, as we did in Python. We’ll initialized them with the placeholder function and a shape. We’ll take arguments to our function for the input values. To actually pass the parameters to fill in the placeholders, we have to use what we call a “feed”.

We know that our adderNode depends on two values. So we’ll write our run-step as a function that takes in two “feed” values, one for each placeholder. Then we’ll assign those feeds to the proper nodes using the feed function. We’ll put these in a list, and pass that list as an argument to runWithFeeds. Then, we wrap up by calling our run-step on our input data. We’ll have to encode the raw vectors as tensors though.

import TensorFlow.Core (Tensor, Value, feed, encodeTensorData)

import TensorFlow.Ops (constant, add, placeholder)

import TensorFlow.Session (runSession, run, runWithFeeds)

import Data.Vector (Vector)

runPlaceholder :: Vector Float -> Vector Float -> IO (Vector Float)

runPlaceholder input1 input2 = runSession $ do

(node1 :: Tensor Value Float) <- placeholder [1]

(node2 :: Tensor Value Float) <- placeholder [1]

let adderNode = node1 `add` node2

let runStep = \node1Feed node2Feed -> runWithFeeds

[ feed node1 node1Feed

, feed node2 node2Feed

]

adderNode

runStep (encodeTensorData [1] input1) (encodeTensorData [1] input2)

main :: IO ()

main = do

result1 <- runPlaceholder [3.0] [4.5]

result2 <- runPlaceholder [2.7] [8.9]

print result1

print result2

{-

Output:

[7.5]

[11.599999] -- Yay rounding issues!

-}Now we’ll wrap up by going through the simple linear model scenario we already saw in Python. Once again, we’ll take two vectors as our inputs. These will be the values we try to match. Next, we’ll use the initializedVariable function to get our variables. We don’t need to call a global variable initializer. But this does affect the state of the session. Notice that we pull it out of the monad context, rather than using let. (We also did for placeholders.)

import TensorFlow.Core (Tensor, Value, feed, encodeTensorData, Scalar(..))

import TensorFlow.Ops (constant, add, placeholder, sub, reduceSum, mul)

import TensorFlow.GenOps.Core (square)

import TensorFlow.Variable (readValue, initializedVariable, Variable)

import TensorFlow.Session (runSession, run, runWithFeeds)

import TensorFlow.Minimize (gradientDescent, minimizeWith)

import Control.Monad (replicateM_)

import qualified Data.Vector as Vector

import Data.Vector (Vector)

runVariable :: Vector Float -> Vector Float -> IO (Float, Float)

runVariable xInput yInput = runSession $ do

let xSize = fromIntegral $ Vector.length xInput

let ySize = fromIntegral $ Vector.length yInput

(w :: Variable Float) <- initializedVariable 3

(b :: Variable Float) <- initializedVariable 1

…Next, we’ll make our placeholders and linear model. Then we’ll calculate our loss function in much the same way we did before. Then we’ll use the same feed trick to get our placeholders plugged in.

runVariable :: Vector Float -> Vector Float -> IO (Float, Float)

...

(x :: Tensor Value Float) <- placeholder [xSize]

let linear_model = ((readValue w) `mul` x) `add` (readValue b)

(y :: Tensor Value Float) <- placeholder [ySize]

let square_deltas = square (linear_model `sub` y)

let loss = reduceSum square_deltas

trainStep <- minimizeWith (gradientDescent 0.01) loss [w,b]

let trainWithFeeds = \xF yF -> runWithFeeds

[ feed x xF

, feed y yF

]

trainStep

…Finally, we’ll run our training step 1000 times on our input data. Then we’ll run our model one more time to pull out the values of our weights and bias. Then we’re done!

runVariable :: Vector Float -> Vector Float -> IO (Float, Float)

...

replicateM_ 1000

(trainWithFeeds (encodeTensorData [xSize] xInput) (encodeTensorData [ySize] yInput))

(Scalar w_learned, Scalar b_learned) <- run (readValue w, readValue b)

return (w_learned, b_learned)

main :: IO ()

main = do

results <- runVariable [1.0, 2.0, 3.0, 4.0] [4.0, 9.0, 14.0, 19.0]

print results

{-

Output:

(4.9999948,-0.99998516)

-}Conclusion

Hopefully this article gave you a taste of some of the possibilities of Tensor Flow in Haskell. We saw a quick introduction to the fundamentals of Tensor Flow. We saw three different kinds of tensors. We then saw code examples both in Python and in Haskell. Finally, we went over a very quick example of a simple linear model and saw how we could learn values to fit that model. Next week, we’ll do a more complicated learning problem. We’ll use the classic “Iris” flower data set and train a classifier using a full neural network.

If you want more details, you should check out FREE Haskell Tensor Flow Guide. It will walk you through using the Tensor Flow library as a dependency and getting a basic model running!

Perhaps you’re completely new to Haskell but intrigued by the possibilities of using it for machine learning or anything else. You should download our Getting Started Checklist! It has some great resources on installing Haskell and learning the core concepts.

The Future is Functional: Haskell and the AI-Native World

As regular readers of this blog know, I love talking about the future of Haskell as a language. I’m interested in ways we can shape the future of programming in a way that will help Haskell grow. I've mentioned network effects as a major hindrance a couple different times. Companies are reluctant to try Haskell since there aren't that many Haskell developers. As a result, fewer other developers will have the opportunity to get paid to learn Haskell. And the cycle continues.

Many perfectly smart people also have a bias against using Haskell in production code for a business. This stems from the idea that Haskell is an academic language. They see it as unsuited towards “Real World” problems. The best rebuttal to this point is to show the many uses of Haskell in creating systems that people use every day. Now, I can sit here and point the ease of creating web servers in Haskell. I could also point to the excellent mechanisms for designing front-end UIs. But there’s still one vital area in the future of programming that I have yet to address.

This is of course, the world of AI and machine learning. AI is slowly (or not so slowly) becoming a primary concern for pretty much any software based business. The last 5-10 years have seen the rise of “cloud native” architectures and systems. But we will soon be living in age when all major software systems will use AI and machine learning at their core. In short, we are about the enter the AI Native Future, as my company’s founder put it.

This will be the first in a series of articles where I explore the uses of Haskell in writing AI applications. In the coming weeks I’ll be focusing on using the Tensor Flow bindings for Haskell. Tensor Flow allows programmers to build simple but powerful applications. There are many tutorials in Python, but the Haskell library is still in early stages. So I'll go through the core concepts of this library and show their usage in Haskell.

But for me it’s not enough to show that we can use Haskell for AI applications. That’s hardly going to move the needle or change the status quo. My ultimate goal is to prove that it’s the best tool for these kinds of systems. But first, let’s get an idea of where AI is being used, and why it’s so important.

AI Will be Everywhere...

...and this doesn’t seem to be particularly controversial. Year-on-year there are more and more discoveries in AI research. We are now able to solve problems that were scarcely thinkable a few years ago. Advancements in NLP systems like IBM Watson have made it so that chatbots are popping up all over the place. Tensor Flow has put advanced deep learning techniques at the finger tips of every programmer. Systems are getting faster and faster.

On top of that, the implications for the general public are becoming more well known. Self-driving cars are roaming the streets in several major American cities. The idea of autonomous Amazon drones making deliveries seems a near certainty. Millions of people entrust part of their home systems to joint IoT/AI devices like Nest. AI is truly going to be everywhere soon.

AI Needs to be Safe

But the ubiquity of AI presents a large number of concerns as well. Software engineers are going to have a lot more responsibility. Our code will have to make difficult and potentially life-altering decisions. For instance, the design of self-driving cars carries many philosophical implications.

The plot thickens even more when we mix AI with the Internet of Things, another exploding market. In the last year, an attack brought down large parts of the internet using a bot-net of IoT devices. In the world of IoT, security still does not have paramount importance. But soon, more and more people will have cameras, audio recording devices, fire alarms and security systems hooked up to the internet. When this happens, their safety and privacy will depend on IoT security.

The need for safety and security suggest we may need to re-think some software paradigms. "Move fast and break things" is the prevailing mindset in many quarters. But this idea doesn't look so good if "breaking things" means someone's house burns.

Pure, Functional Programming is the Path to Safety

So how does this relate to Haskell? Well let’s consider the tradeoffs we face when we choose what language to develop in. Haskell, with its strong type system and compile-time guarantees is more reliable. We can catch a lot more errors at compile time when compared to languages like Javascript, or Python. In these languages, non-syntactic issues tend to only pop up at runtime. Programmers must lean even more heavily on testing systems to catch possible errors. But testing is difficult, and there’s still plenty of disagreement about the best methodologies.

The flip side of this is that it’s somewhat easier to write code in a language like Javascript. It's easier to cut corners in the type system and have more “dynamic” objects. So while we all want “reliable” software, we’re often willing to compromise to get code off the ground faster. This is the epitome of the "Move fast and break things" mindset.

However, the explosion in the safety concerns of our software has elevated the stakes. If someone’s web browser crashes from Javascript, it's no big deal. The user will reload the page and hopefully not trigger that condition again. If your app stops responding, your user might get frustrated and you’ll lose a customer. But when programming starts penetrating other markets, any error could be catastrophic. If a self driving car encounters a sudden bug and the system crashes, many people could die. So it is our ethical responsibility to figure out ways to make it impossible for our software to encounter these kinds of errors.

Haskell is in many respects a very safe language. This is why it’s trusted by large financial institutions, large data science firms, and even by a company working in autonomous flight control. When your code cannot have arbitrary side effects, it is far easier to prevent it from crashing. It is also easier to secure a system (like an IoT device) when you can prevent leaks from arbitrary effects. Often these techniques are present in Haskell but not other languages.

The field of dependent types is yet another area where we’ll be able to add more security to our programming. They'll enable even more compile-time guarantees of behavior. This can add a great deal of safety when used well. Haskell doesn’t have full support for dependent types yet, but it is in the works. In the meantime there are languages like Idris with first class support.

Of course, when it comes to AI and deep learning, getting these guarantees will be difficult. It's one thing to build a type that ensures you have a vector of a particular length. It's quite another to build a type ensuring that when your car sees a dozen people standing ahead of it, it must brake. But these are the sorts of challenges programmers will need to face in the AI Native Future. And if we want to ensure Haskell’s place in that future, we’ll have to show these results are possible.

Conclusion

It's obvious that AI and machine learning are the big fields of software engineering. They’ll continue to dominate the field for a long time. They can have an incredible impact on our lives. But by allowing this impact, we’re putting more of our safety in the hands of software engineers. This has major implications for how we develop software.

We often have to make tradeoffs between ease of development and reliability. A language like Haskell can offer us a lot of compile time guarantees about the behavior of our program. These guarantees are absent from many other languages. However, achieving these guarantees can introduce more pain into the development process.

But soon our code will be controlling things like self-driving cars, delivery drones, and home security devices. So we have an ethical responsibility to do everything in our power to make our code as reliable as possible. For this reason, Haskell is in a prime position when it comes to the AI Native Future. To take advantage of this, it will require a lot of work. Haskell programmers will have to develop language tools like dependent types to make Haskell even more reliable. We'll also have to contribute to libraries that will make it easy to write machine learning applications in Haskell.

With this in mind, the next few articles on this blog are all going to focus on using Haskell for machine learning. We’ll be starting by going through the basics of the Tensor Flow bindings for Haskell. You can get a sneak peek at some of that content by downloading our Haskell Tensor Flow tutorial!

If you’ve never programmed in Haskell before, you should try it out! We have two great resources for getting started. First, there’s the Getting Started Checklist. It will first walk you through downloading the language. Then it will point you in the directions of some other beginner materials. Second, there’s our Stack mini-course. This will walk you through the Stack tool, which makes it very easy to build projects, organize code, and get dependencies with Haskell.

Coping with (Code) Failures

Exception handling is annoying. It would be completely unnecessary if everything in the world worked the way it's supposed to. But of course that would be a fantasy. Haskell can’t change reality. But its error facilities are a lot better than most languages. This week we'll look at some common error handling patterns. We’ll see a couple clear instances where Haskell has simpler and cleaner code.

Using Nothing

The most basic example we have in Haskell is the Maybe type. This allows us to encapsulate any computation at all with the possibility of failure. Why is this better than similar ideas in other languages? Well let’s take Java for example. It’s easy enough to encapsulate Maybe when you’re dealing with pointer types. You can use the “null” pointer to be your failure case.

public MyObject squareRoot(int x) {

if (x < 0) {

return nil;

} else {

return MyObject(Math.sqrt(x));

}

}But this has a few disadvantages. First of all, null pointers (in general) look the same as regular pointers to the type checker. This means you get no compile time guarantees that ANY of the pointers you’re dealing with aren’t null. Imagine if we had to wrap EVERY Haskell value in a Maybe. We would need to CONSTANTLY unwrap them or else risk tripping a “null-pointer-exception”. In Haskell, once we’ve handled the Nothing case once, we can pass on a pure value. This allows other code to know that it will not throw random errors. Consider this example. We check that our pointer in non-null once already in function1. Despite this, good programming practice dictates that we perform another check in function2.

public void function1(MyObject obj) {

if (obj == null) {

// Deal with error

} else {

function2(obj);

}

}

public void function2(MyObject obj) {

if (obj == null) {

// ^^ We should be able to make this call redundant

} else {

// …

}

}The second sticky point comes up when we’re dealing with non-pointer, primitive values. We often don't have a good way to handle these cases. Suppose your function returns an int, but it might fail. How do you represent failure? It’s not uncommon to see side cases like this handled by using a “sentinel” value, like 0 or -1.

But if the range of your function spans all the integers, you’re a little bit stuck there. The code might look cleaner if you use an enumerated type, but this doesn’t avoid the problem. The same problem can even crop up with pointer values if null is valid in the particular context.

public int integerSquareRoot(int x) {

if (x < 0) {

return -1;

} else {

return Math.round(Math.sqrt(x));

}

}

public void (int a) {

int result = integerSquareRoot(a);

if (result == -1) {

// Deal with error

} else {

// Use correct value

}

}Finally, monadic composition with Maybe is much more natural in Haskell. There are many examples of this kind of spaghetti in Java code:

public Result computation1(MyObject value) {

…

}

public Result computation2(Result res) {

…

}

public int intFromResult(Result res) {

…

}

public int spaghetti(MyObject value) {

if (value != null) {

result1 = computation1(value);

if (result1 != null) {

result2 = computation2(result1);

if (result2 != null) {

return intFromResult(result2);

}

}

}

return -1;

}Now if we’re being naive, we might end up with a not-so-pretty version ourselves:

computation1 :: MyObject -> Maybe Result

computation2 :: Result -> Maybe Result

intFromResult :: Result -> Int

spaghetti :: Maybe MyObject -> Maybe Int

spaghetti value = case value of

Nothing -> Nothing

Just realValue -> case computation1 realValue of

Nothing -> Nothing

Just result1 -> case computation2 result1 of

Nothing -> Nothing

Just result2 -> return $ intFromResult result2But as we discussed in our first Monads article, we can make this much cleaner. We'll compose our actions within the Maybe monad:

cleanerVersion :: Maybe MyObject -> Maybe Int

cleanerVersion value = do

realValue <- value

result1 <- computation1 realValue

result2 <- computation2 result1

return $ intFromResult result2Using Either

Now suppose we want to make our errors contain a bit more information. In the example above, we’ll output Nothing if it fails. But code calling that function will have no way of knowing what the error actually was. This might hinder our code's ability to correct the error. We'll also have no way of reporting a specific failure to the user. As we’ve explored, Haskell’s answer to this is the Either monad. This allows us to attach a value of any type as a possible failure. In this case, we'll change the type of each function. We would then update the functions to use a descriptive error message instead of returning Nothing.

computation1 :: MyObject -> Either String Result

computation2 :: Result -> Either String Result

intFromResult :: Result -> Int

eitherVersion :: Either String MyObject -> Either String Int

eitherVersion value = do

realValue <- value

result1 <- computation1 realValue

result2 <- computation2 result1

return $ intFromResult result2Now suppose we want to try to make this happen in Java. How do we do this? There are a few options I’m aware of. None of them are particularly appetizing.

- Print an error message when the failure condition pops up.

- Update a global variable when the failure condition pops up.

- Create a new data type that could contain either an error value or a success value.

- Add a parameter to the function whose value is filled in with an error message if failure occurs.

The first couple rely on arbitary side effects. As Haskell programmers we aren’t fans of those. The third option would require messing with Java’s template types. These are far more difficult to work with than Haskell’s parameterized types. If we don't take this approach, we'd need a new type for every different return value.

The last method is a bit of an anti-pattern, making up for the fact that tuples aren’t a first class construct in Java. It’s quite counter-intuitive to check one of your input values for what do as an an output result. So with these options, give me Haskell any day.

Using Exceptions and Handlers

Now that we understand the more “pure” ways of handling error cases in our code, we can deal with exceptions. Exceptions show up in almost every major programming language; Haskell is no different. Haskell has the SomeException type that encapsulates possible failure conditions. It can wrap any type that is a member of the Exception typeclass. You'll generally be creating your own exception types.

Generally, we throw exceptions when we want to state that a path of code execution has failed. Instead of returning some value to the calling function, we'll allow completely different code to handle the error. If this sounds convoluted, that’s because it kind’ve is. In general you want to prefer keeping the control flow as clear as possible. Sometimes though we cannot avoid it.

So let’s suppose we’re calling a function we know might throw a particular exception. We can “handle” that exception by attaching a handler. In Java, you do this pattern like so:

public int integerSquareRoot(int value) throws NegativeSquareRootException {

...

}

public int mathFunction(int x) {

try {

return 2 * squareRoot(x);

} catch (NegativeSquareRootException e) {

// Deal with invalid result

}

}To handle exceptions in this manner in Haskell, you have to have access to the IO monad. The most general way to handle exceptions is to use the catch function. When you call the action that might throw the exception, you include a “handler” function. This function will take the exception as an argument and deal with the case. If we want to write the above example in Haskell, we should first define our exception type. We only need to derive Show to also derive an instance for the Exception typeclass:

import Control.Exception (Exception)

data MyException = NegativeSquareRootException

deriving (Show)

instance Exception MyExceptionNow we can write a pure function that will throw this exception in the proper circumstances.

import Control.Exception (Exception, throw)

integerSquareRoot :: Int -> Int

integerSquareRoot x

| x < 0 = throw NegativeSquareRootException

| otherwise = undefinedWhile we can throw the exception from pure code, we need to be in the IO monad to catch it. We’ll do this with the catch function. We’ll use a handler function that will only catch the specific error we’re expecting. It will print the error as a message and then return a dummy value.

import Control.Exception (Exception, throw, catch)

…

mathFunction :: Int -> IO Int

mathFunction input = do

catch (return $ integerSquareRoot input) handler

where

handler :: MyException -> IO Int

handler NegativeSquareRootException =

print "Can't call square root on a negative number!" >> return (-1)MonadThrow

We can also generalize this process a bit to work in different monads. The MonadThrow typeclass allows us to specify different exceptional behaviors for different monads. For instance, Maybe throws exceptions by using Nothing. Either uses Left, and IO will use throwIO. When we’re in a general MonadThrow function, we throw exceptions with throwM.

callWithMaybe :: Maybe Int

callWithMaybe = integerSquareRoot (-5) -- Gives us `Nothing`

callWithEither :: Either SomeException Int

callWithEither = integerSquareRoot (-5) -- Gives us `Left NegativeSquareRootException`

callWithIO :: IO Int

callWithIO = integerSquareRoot (-5) -- Throws an error as normal

integerSquareRoot :: (MonadThrow m) => Int -> m Int

integerSquareRoot x

| x < 0 = throwM NegativeSquareRootException

| otherwise = ...There is some debate about whether the extra layers of abstraction are that helpful. There is a strong case to be made that if you’re going to be using exceptional control flow, you should be using IO anyway. But using MonadThrow can make your code more extensible. Your function might be usable in more areas of your codebase. I’m not too opinionated on this topic (not yet at least). But there are certainly some strong opinions within the Haskell community.

Summary

Error handling is tricky business. A lot of the common programming patterns around error handling are annoying to write. Luckily, Haskell has several different means of doing this. In Haskell, you can express errors using simple mechanisms like Maybe and Either. Their monadic behavior gives you a high degree of composability. You can also throw and catch exceptions like you can in other languages. But Haskell has some more general ways to do this. This allows you to be agnostic to how functions within your code handle errors.

New to Haskell? Amazed by its awesomeness and want to try? Download our Getting Started Checklist! It has some awesome tools and instructions for getting Haskell on your computer and starting out.

Have you tried Haskell but want some more practice? Check out our Recursion Workbook for some great content and 10 practice problems!

And stay tuned to the Monday Morning Haskell blog!

Getting the User's Opinion: Options in Haskell

GUI's are hard to create. Luckily for us, we can often get away with making our code available through a command line interface. As you start writing more Haskell programs, you'll probably have to do this at some point.

This article will go over some of the ins and outs of CLI’s. In particular, we’ll look at the basics of handling options. Then we'll see some nifty techniques for actually testing the behavior of our CLI.

A Simple Sample

To motivate the examples in this article, let’s design a simple program We’ll have the user input a message. Then we’ll print the message to a file a certain number of times and list the user’s name as the author at the top. We’ll also allow them to uppercase the message if they want. So we’ll get five pieces of input from the user:

- The filename they want

- Their name to place at the top

- Whether they want to uppercase or not

- The message

- The repetition number

We’ll use arguments and options for the first three pieces of information. Then we'll have a command line prompt for the other two. For instance, we’ll insist the user pass the expected file name as an argument. Then we’ll take an option for the name the user wants to put at the top. Finally, we’ll take a flag for whether the user wants the message upper-cased. So here are a few different invocations of the program.

>> run-cli “myfile.txt” -n “John Doe”

What message do you want in the file?

Sample Message

How many times should it be repeated?

5This will print the following output to myfile.txt:

From: John Doe

Sample Message

Sample Message

Sample Message

Sample Message

Sample MessageHere’s another run, this time with an error in the input:

>> run-cli “myfile2.txt” -n “Jane Doe” -u

What message do you want in the file?

A new message

How many times should it be repeated?

asdf

Sorry, that isn't a valid number. Please enter a number.

3This file will look like:

From: Jane Doe

A NEW MESSAGE

A NEW MESSAGE

A NEW MESSAGEFinally, if we don’t get the right arguments, we should get a usage error:

>> run-cli

Missing: FILENAME -n USERNAME

Usage: CLIPractice-exe FILENAME -n USERNAME [-u]

Comand Line Sample ProgramGetting the Input

So the most important aspect of the program is getting the message and repetitions. We’ll ignore the options for now. We’ll print a couple messages, and then use the getLine function to get their input. There’s no way for them to give us a bad message, so this section is easy.

getMessage :: IO String

getMessage = do

putStrLn "What message do you want in the file?"

getLineBut they might try to give us a number we can’t actually parse. So for this task, we’ll have to set up a loop where we keep asking the user for a number until they give us a good value. This will be recursive in the failure case. If the user won’t enter a valid number, they’ll have no choice but to terminate the program by other means.

getRepetitions :: IO Int

getRepetitions = do

putStrLn "How many times should it be repeated?"

getNumber

getNumber :: IO Int

getNumber = do

rep <- getLine

case readMaybe rep of

Nothing -> do

putStrLn "Sorry, that isn't a valid number. Please enter a number."

getNumber

Just i -> return iOnce we’re doing reading the input, we’ll print the output to a file. In this instance, we hard-code all the options for now. Here’s the full program.

import Data.Char (toUpper)

import System.IO (writeFile)

import Text.Read (readMaybe)

runCLI :: IO ()

runCLI = do

let fileName = "myfile.txt"

let userName = "John Doe"

let isUppercase = False

message <- getMessage

reps <- getRepetitions

writeFile fileName (fileContents userName message reps isUppercase)

fileContents :: String -> String -> Int -> Bool -> String

fileContents userName message repetitions isUppercase = unlines $

("From: " ++ userName) :

(replicate repetitions finalMessage)

where

finalMessage = if isUppercase

then map toUpper message

else messageParsing Options

Now we have to deal with the question of how we actually parse the different options. We can do this by hand with the getArgs function, but this is somewhat error prone. A better option in general is to use the Options.Applicative library. We’ll explore the different possibilities this library allows. We’ll use three different helper functions for the three pieces of input we need.

The first thing we’ll do is build a data structure to hold the different options we want. We want to know the file name to store at, the name at the top, and the uppercase status.

data CommandOptions = CommandOptions

{ fileName :: FilePath

, userName :: String

, isUppercase :: Bool }Now we need to parse each of these. We’ll start with the uppercase value. The most simple parser we have is the flag function. It tells us if a particular flag (we’ll call it -u) is present, we’ll uppercase the message, otherwise not. It gets coded like this with the Options library:

uppercaseParser :: Parser Bool

uppercaseParser = flag False True (short 'u')Notice we use short in the final argument to denote the flag character. We could also use the switch function, since this flag is only a boolean, but this version is more general.

Now we’ll move on to the argument for the filename. This uses the argument helper function. We’ll use a string parser (str) to ensure we get the actual string. We won’t worry about the filename having a particular format here. Notice we add some metadata to this argument. This tells the user what they are missing if they don’t use the proper format.

fileNameParser :: Parser String

fileNameParser = argument str (metavar "FILENAME")Finally, we’ll deal with the option of what name will go at the top. We could also do this as an argument, but let’s see what the option is like. An argument is a required positional parameter. An option on the other hand comes after a particular flag. We also add metadata here for a better error message as well. The short piece of our metadata ensures it will use the option character we want.

userNameParser :: Parser FilePath

userNameParser = option str (short 'n' <> metavar "USERNAME")Now we have to combine these different parsers and add a little more info about our program.

import Options.Applicative (execParser, info, helper, Parser, fullDesc,

progDesc, short, metavar, flag, argument, str, option)

parseOptions :: IO CommandOptions

parseOptions = execParser $ info (helper <*> commandOptsParser) commandOptsInfo

where

commandOptsParser = CommandOptions <$> fileNameParser <*> userNameParser <*> uppercaseParser

commandOptsInfo = fullDesc <> progDesc "Command Line Sample Program"

-- Revamped to take options

runCLI :: CommandOptions -> IO ()

runCLI commandOptions = do

let file = fileName commandOptions

let user = userName commandOptions

let uppercase = isUppercase commandOptions

message <- getMessage

reps <- getRepetitions

writeFile file (fileContents user message reps uppercase)And now we’re done! We build our command object using these three different parsers. We chain the operations together using applicatives! Then we pass the result to our main program. If you aren’t too familiar with functors, and applicatives, we went over these a while ago on the blog. Refresh your memory!

IO Testing

Now we have our program working, we need to ask ourselves how we test its behavior. We can do manual command line tests ourselves, but it would be nice to have an automated solution. The key to this is the Handle abstraction.

Let’s first look at some basic file handling types.

openFile :: FilePath -> IO Handle

hGetLine :: Handle -> IO String

hPutStrLn :: Handle -> IO ()

hClose :: Handle -> IO ()Normally when we write something to a file, we open a handle for it. We use the handle (instead of the string literal name) for all the different operations. When we’re done, we close the handle.

The good news is that the stdin and stdout streams are actually the exact same Handle type under the hood!

stdin :: Handle

stdout :: HandleHow does this help us test? The first step is to abstract away the handles we’re working with. Instead of using print and getLine, we’ll want to use hGetLine and hPutStrLn. Then we take these parameters as arguments to our program and functions. Let’s look at our reading functions:

getMessage :: Handle -> Handle -> IO String

getMessage inHandle outHandle = do

hPutStrLn outHandle "What message do you want in the file?"

hGetLine inHandle

getRepetitions :: Handle -> Handle -> IO Int

getRepetitions inHandle outHandle = do

hPutStrLn outHandle "How many times should it be repeated?"

getNumber inHandle outHandle

getNumber :: Handle -> Handle -> IO Int

getNumber inHandle outHandle = do

rep <- hGetLine inHandle

case readMaybe rep of

Nothing -> do

hPutStrLn outHandle "Sorry, that isn't a valid number. Please enter a number."

getNumber inHandle outHandle

Just i -> return iOnce we’ve done this, we can make the input and output handles parameters to our program as follows. Our wrapper executable will pass stdin and stdout:

-- Library File:

runCLI :: Handle -> Handle -> CommandOptions -> IO ()

runCLI inHandle outHandle commandOptions = do

let file = fileName commandOptions

let user = userName commandOptions

let uppercase = isUppercase commandOptions

message <- getMessage inHandle outHandle

reps <- getRepetitions inHandle outHandle

writeFile file (fileContents user message reps uppercase)

-- Executable File

main :: IO ()

main = do

options <- parseOptions

runCLI stdin stdout optionsNow our library API takes the handles as parameters. This means in our testing code, we can pass whatever handle we want to test the code. And, as you may have guessed, we’ll do this with files, instead of stdin and stdout. We’ll make one file with our expected terminal output:

What message do you want in the file?

How many times should it be repeated?We’ll make another file with our input:

Sample Message

5And then the file we expect to be created:

From: John Doe

Sample Message

Sample Message

Sample Message

Sample Message

Sample MessageNow we can write a test calling our library function. It will pass the expected arguments object as well as the proper file handles. Then we can compare the output of our test file and the output file.

import Lib

import System.IO

import Test.HUnit

main :: IO ()

main = do

inputHandle <- openFile "input.txt" ReadMode

outputHandle <- openFile "terminal_output.txt" WriteMode

runCLI inputHandle outputHandle options

hClose inputHandle

hClose outputHandle

expectedTerminal <- readFile "expected_terminal.txt"

actualTerminal <- readFile "terminal_output.txt"

expectedFile <- readFile "expected_output.txt"

actualFile <- readFile "testOutput.txt"

assertEqual "Terminal Output Should Match" expectedTerminal actualTerminal

assertEqual "Output File Should Match" expectedFile actualFile

options :: CommandOptions

options = CommandOptions "testOutput.txt" "John Doe" FalseAnd that’s it! We can also use this process to add tests around the error cases, like when the user enters invalid numbers.

Summary

Writing a command line interface isn't always the easiest task. Getting a user’s input sometimes requires creating loops if they won’t give you the information you want. Then dealing with arguments can be a major pain. The Options.Applicative library contains many option parsing tools. It helps you deal with flags, options, and arguments. When you're ready to test your program, you'll want to abstract the file handles away. You can use stdin and stdout from your main executable. But then when you test, you can use files as your input and output handles.

Want to try writing a CLI but don't know Haskell yet? No sweat! Download our Getting Started Checklist and get going learning the language!

When you're making a full project with executables and test suites, you need to keep organized! Take our FREE Stack mini-course to learn how to organize your Haskell with Stack.

Cleaning Up Our Projects with Hpack!

About a month ago, we released our FREE Stack mini-course. If you've never used Stack before, you should totally check out that course! This article will give you a sneak peak at some of the content in the course.

But if you're already familiar with the basics of Stack and don't think you need the course, don't worry! In this article we'll be going through another cool tool to streamline your workflow!

Most any Haskell project you create will use Cabal under the hood. Cabal performs several important tasks for you. It downloads dependencies for you and locates the code you wrote within the file system. It also links all your code so GHC can compile it. In order for Cabal to do this, you need to create a .cabal file describing all these things. It needs to know for instance what libraries each section of your code depends on. You'll also have to specify where the source directories are on your file system.

The organization of a .cabal file is a little confusing at times. The syntax can be quite verbose. We can make our lives simpler though if we use the "Hpack" tool. Hpack allows you to specify your project organization in a more concise format. Once you’ve specified everything in Hpack’s format, you can generate the .cabal file with a single command.

Using Hpack

The first step to using hpack if of course to download it. This is simple, as you long as you have installed Stack on your system. Use the command:

stack install hpackThis will install Hpack system wide so you can use it in all your projects. The next step is to specify your code’s organization in a file called package.yaml. Here’s a simple example:

name: HpackExampleProject

version: 0.1.0.0

ghc-options: -Wall

dependencies:

- base

library:

source-dirs: src/

executables:

HpackExampleProject-exe:

main: Main.hs

source-dirs: app/

dependencies:

HpackExampleProject

tests:

HpackExampleProject-test:

main: Spec.hs

source-dirs: test/

dependencies:

HpackExampleProjectThis example will generate a very simple cabal file. It'll look a lot like the default template of running stack new HpackExampleProject. There are a few basic fields, like the name, version and compiler options for our project. We can specify details about our code library, executables, and any test suites we have, just as we can in a .cabal file. Each of these components can have their own dependencies. We can also specify global dependencies.

Once we have created this file, all we need to do is run the hpack command from the directory containing this file. This will generate our .cabal file:

>> hpack

generated HpackExampleProject.cabalWhat problems does Hpack solve?

One big problem hpack solves is module inference. Your .cabal file should specify all Haskell modules which are part of your library. You'll always have two groups: “exposed” modules and “other” modules. It can be quite annoying to list every one of these modules, and the list can get quite long as your library gets bigger! Worse, you'll sometimes get confusing errors when you create a new module but forget to add it to the .cabal file. With Hpack, you don't need to remember to add most new files! It looks at your file system and determines what modules are present for you. Suppose you have organized your files like so in your source directory:

src/Lib.hs

src/API.hs

src/Internal/Helper.hs

src/Internal/Types.hsUsing the normal .cabal approach, you would need to list these modules by hand. But without listing anything in the package.yaml file, you’ll get all your modules listed in the .cabal file:

exposed-modules:

API

Internal.Helper

Internal.Types

LibNow, you might not want all your modules exposed. You can make a simple modification to the package file:

library:

source-dirs: src/

exposed-modules:

- API

- LibAnd hpack will correct the .cabal file.

exposed-modules:

API

Lib

other-modules:

Internal.Helper

Internal.TypesFrom this point, Hpack will infer all new Haskell modules as “other” modules. You'll only need to list "exposed" modules in package.yaml. There's only one thing to remember! You need to run hpack each time you add new modules, or else Cabal will not know where your code is. This is still much easier than modifying the .cabal file each time. The .cabal file itself will still contain a long list of module names. But most of them won’t be present in the package.yaml file, which is your main point of interaction.

Another big benefit of using hpack is global dependencies. Notice in the example how we have a “dependencies” field above all the other sections. This means our library, executables, and test-suites will all get base as a dependency for free. Without hpack, we would need to specify base as a dependency in each individual section.

There is also plenty of other syntactic sugar available with hpack. One simple example is the github specification. You can put the following single line in your package file:

github: username/reponameAnd you’ll get the following lines for free in your .cabal file.

homepage: https://github.com/username/reponame#readme

bug-reports: https://github.com/username/reponame/issuesSummary

Once you move beyond toy projects, maintenance of your package will be non-trivial. If you use hpack, you’ll have an easier time organizing your first big project. The syntax is cleaner. The organization is more intuitive. Finally, you will save yourself the stress of performing many repetitive tasks. Constant edits to the .cabal file will interrupt your flow and build process. So avoiding them should make you a lot more productive.

Now if you haven't used Stack or Cabal at all before, there was a lot to grasp here. But hopefully you're convinced that there are easy ways to organize your Haskell code! If you're intrigued at learning how, sign up for our FREE Stack mini-course! You'll learn all about the simple approaches to organizing a Haskell project.

If you've never used Haskell at all and are totally confused by all this, no need to fret! Download our Getting Started Checklist and you'll be well on your way to learning Haskell!

Playing Match Maker

In last week’s article we saw an introduction to the Functional Graph Library. This is a neat little library that allows us to build graphs in Haskell. It then makes it very easy to solve basic graph problems. For instance, we could plug our graph into a function that would give us the shortest path. In another example, we found the minimum spanning tree of our graph with a single function call.

Those examples were rather contrived though. Our “input” was already in a graph format more or less, so we didn’t have to think much to convert it. Then we solved arbitrary problems without providing any real context. In programming graph algorithms often come up when you’re least expecting it! We’ll prove this with a sample problem.

Motivating Example

Suppose we’re building a house. We have several people who are working on the house, and they all have various tasks to do. The need certain tools to do these tasks. As long as a person gets a tool for one of the jobs they’re working on, they can make progress. Of course, we have a limited supply of tools. So suppose we have this set of tools:

Hammer

Hammer

Power Saw

Ladder

Ladder

Ladder

Caulking GunAnd now we have the following people working on this house who all have the following needs:

Jason, Hammer, Ladder, Caulking Gun

Amanda, Hammer

Kristina, Caulking Gun

Chad, Ladder

Josephine, Power Saw

Chris, Power Saw, Ladder

Dennis, Caulking Gun, HammerWe want to find an assignment of people to tools such that the highest number of people has at least one of their tools. In this situation we can actually find an assignment that gets all seven people a tool:

Jason - Ladder

Amanda - Hammer

Kristina - Caulking Gun

Chad - Ladder

Josephine - Power Saw

Chris - Ladder

Dennis - HammerWe’ll read our problem in from a handle like we did last time, and assume we first read the number of tools, then people. Our output will be the list of tools and then a map from each person’s name to the list of tools they can use.

module Tools where

import Control.Monad (replicateM)

import Data.List.Split (splitOn)

import System.IO (hGetLine, Handle)

readInput :: Handle -> IO ([String], [(String, [String])])

readInput handle = do

numTools <- read <$> hGetLine handle

numPeople <- read <$> hGetLine handle

tools <- replicateM numTools (hGetLine handle)

people <- replicateM numPeople (readPersonLine handle)

return (tools, people)

readPersonLine :: Handle -> IO (String, [String])

readPersonLine handle = do

line <- hGetLine handle

let components = splitOn ", " line

return (head components, tail components)Some Naive Solutions

Now our first guess might be to try a greedy algorithm. We’ll iterate through the list of tools, find the first person in the list who can use that tool, and recurse on the rest. This might look a little like this:

solveToolsGreedy :: Handle -> IO Int

solveToolsGreedy handle = do

(tools, personMap) <- readInput handle

return $ findMaxMatchingGreedy tools (Map.toList personMap)

findMaxMatchingGreedy :: [String] -> [(String, [String])] -> Int

findMaxMatchingGreedy [] _ = 0 -- No more tools to match

findMaxMatchingGreedy (tool : rest) personMap = case break (containsTool tool) personMap of

(allPeople, []) -> findMaxMatchingGreedy rest personMap -- Can't match this tool

(somePeople, (_ : otherPeople)) -> 1 + findMaxMatchingGreedy rest (somePeople ++ otherPeople)

containsTool :: String -> (String, [String]) -> Bool

containsTool tool pair = tool `elem` (snd pair)Unfortunately, this could lead to some sub-optimal outcomes. In this case, our greed might cause us to assign the caulking gun to Jason, and then Kristina won’t be able to use any tools.

So now let’s try and fix this by during 2 recursive calls! We’ll find the first person we can assign the tool to (or otherwise drop the tool). Once we’ve done this, we’ll imagine two scenarios. In case 1, this person will use the tool, so we can remove the tool and the person from our lists. Then we'll recurse on the remainder, and add 1. In case 2, this person will NOT use the tool, so we’ll recurse except REMOVE the tool from that person’s list.

findMaxMatchingSlow :: [String] -> [(String, [String])] -> Int

findMaxMatchingSlow [] _ = 0

findMaxMatchingSlow allTools@(tool : rest) personMap =

case break (containsTool tool) personMap of

(allPeople, []) -> findMaxMatchingGreedy rest personMap -- Can't match this tool

(somePeople, (chosen : otherPeople)) -> max useIt loseIt

where

useIt = 1 + findMaxMatchingSlow rest (somePeople ++ otherPeople)

loseIt = findMaxMatchingSlow allTools newList

newList = somePeople ++ (modifiedChosen : otherPeople)

modifiedChosen = dropTool tool chosen

dropTool :: String -> (String, [String]) -> (String, [String])

dropTool tool (name, validTools) = (name, delete tool validTools)The good news is that this will get us the optimal solution! It solves our simple case quite well! The bad news is that it will take too long on more difficult cases. A naive use-it-or-lose-it algorithm like this will take exponential time (O(2^n)). This means even for modest input sizes (~100) we’ll be waiting for a loooong time. Anything much larger takes prohibitively long. Plus, there’s no way for us to memoize the solution here.

Graphs to the Rescue!

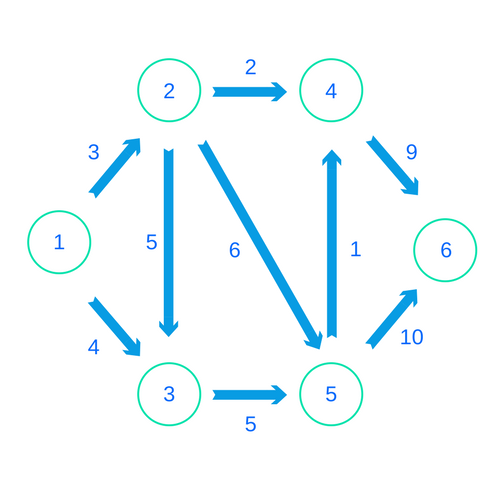

So at this point, are we condemned to choose between a fast inaccurate algorithm and a correct but slow one? In this case the answer is no! This problem is actually best solved by using a graph algorithm! This is an example of what’s called a “bipartite matching” problem. We’ll create a graph with two sets of nodes. On the left, we’ll have a node for each tool. On the right, we’ll have a node for each person. The only edges in our graph will go from nodes on the left towards nodes on the right. A “tool” node has an edge to a “person” node if that person can use the tool. Here’s a partial representation of our graph (plus or minus my design skills). We’ve only drawn in the edges related to Amanda, Christine and Josephine so far.

Now we want to find the “maximum matching” in this graph. That is, we want the largest set of edges such that no two edges share a node. The way to solve this problem using graph algorithms is to turn it into yet ANOTHER graph problem! We’ll add a node on the far left, called the “source” node. We’ll connect it to every “tool” node. Now we’ll add a node on the far right, called the “sink” node. It will receive an edge from every “person” node. All the edges in this graph have a distance of 1.

Again, most of the middle edges are missing here.

The size of the maximum matching in this case is equal to the “max flow” from the source node to the sink node. This is a somewhat advanced concept. But imagine there is water gushing out of the source node and that every edge is a “pipe” whose value (1) is the capacity. We want the largest amount of water that can go through to the sink at once.

Last week we saw built-in functions for shortest path and min spanning tree. FGL also has an out-of-the-box solution for max flow. So our main goal now is to take our objects and construct the above graph.

Preparing Our Solution

A couple weeks ago, we created a segment tree that was very specific to the problem. This time, we’ll show what it’s like to write a more generic algorithm. Throughout the rest of the article, you can imagine that a is a tool, and b is a person. We’ll write a general maxMatching function that will take a list of a’s, a list of b’s, AND a predicate function. This function will determine whether an a object and a b object should have an edge between them. We’ll use the containsTool function from above as our predicate. Then we'll call our general function.

findMaxMatchingBest :: [String] -> [(String, [String])] -> Int

findMaxMatchingBest tools personMap = findMaxMatching containsTool tools personMap

…(different module)

findMaxMatching :: (a -> b -> Bool) -> [a] -> [b] -> Int

findMaxMatching predicate as bs = ...Building Our Graph

To build our graph, we’ll have to decide on our labels. Once again, we’ll only label our edges with integers. In fact, they’ll all have a “capacity” label of 1. But our nodes will be a little more complicated. We’ll want to associate the node with the object, and we have a heterogeneous (and polymorphic) set of items. We’ll make this NodeLabel type that could refer to any of the four types of nodes:

data NodeLabel a b =

LeftNode a |

RightNode b |

SourceNode |

SinkNodeNext we’ll start building our graph by constructing the inner part. We’ll make the two sets of nodes as well as the edges connecting them. We’ll assign the left nodes to the indices from 1 up through the size of that list. And then the right nodes will take on the indices from one above the first list's size through the sum of the list sizes.

createInnerGraph

:: (a -> b -> Bool)

-> [a]

-> [b]

-> ([LNode (NodeLabel a b)], [LNode (NodeLabel a b)], [LEdge Int])

createInnerGraph predicate as bs = ...

where

sz_a = length as

sz_b = length bs

aNodes = zip [1..sz_a] (LeftNode <$> as)

bNodes = zip [(sz_a + 1)..(sz_a + sz_b)] (RightNode <$> bs)Next we’ll also make tuples matching the index to the item itself without its node label wrapper. This will allow us to call the predicate on these items. We’ll then get all the edges out by using a list comprehension. We'll pull each pairing and determining if the predicate holds. If it does, we’ll add the edge.

where

...

indexedAs = zip [1..sz_a] as

indexedBs = zip [(sz_a + 1)..(sz_a + sz_b)] bs

nodesAreConnected (_, aItem) (_, bItem) = predicate aItem bItem

edges = [(fst aN, fst bN, 1) | aN <- indexedAs, bN <- indexedBs, nodesAreConnected aN bN]Now we’ve got all our pieces, so we combine them to complete the definition:

createInnerGraph predicate as bs = (aNodes, bNodes, edges)Now we’ll construct the “total graph”. This will include the source and sink nodes. It will include the indices of these nodes in the return value so that we can use them in our algorithm:

totalGraph :: (a -> b -> Bool) -> [a] -> [b]

-> (Gr (NodeLabel a b) Int, Int, Int)Now we’ll start our definition by getting all the pieces out of the inner graph as well as the size of each list. Then we’ll assign the index for the source and sink to be the numbers after these combined sizes. We’ll also make the nodes themselves and give them the proper labels.

totalGraph predicate as bs = ...

where

sz_a = length as

sz_b = length bs

(leftNodes, rightNodes, middleEdges) = createInnerGraph predicate as bs

sourceIndex = sz_a + sz_b + 1

sinkIndex = sz_a + sz_b + 2

sourceNode = (sourceIndex, SourceNode)

sinkNode = (sinkIndex, SinkNode)Now to finish this definition, we’ll first create edges from the source out to the right nodes. Then we'll make edges from the left nodes to the sink. We’ll also use list comprehensions there. Then we’ll combine all our nodes and edges into two lists.

where

...

sourceEdges = [(sourceIndex, lIndex, 1) | lIndex <- fst <$> leftNodes]

sinkEdges = [(rIndex, sinkIndex, 1) | rIndex <- fst <$> rightNodes]

allNodes = sourceNode : sinkNode : (leftNodes ++ rightNodes)

allEdges = sourceEdges ++ middleEdges ++ sinkEdgesFinally, we’ll complete the definition by making our graph. As we noted, we'll also return the source and sink indices:

totalGraph predicate as bs = (mkGraph allNodes allEdges, sourceIndex, sinkIndex)

where

...The Grand Finale

OK one last step! We can now fill in our findMaxMatching function. We’ll first get the necessary components from building the graph. Then we’ll call the maxFlow function. This works out of the box, just like sp and msTree from the last article!

import Data.Graph.Inductive.Graph (LNode, LEdge, mkGraph)

import Data.Graph.Inductive.PatriciaTree (Gr)

import Data.Graph.Inductive.Query.MaxFlow (maxFlow)

findMaxMatching :: (a -> b -> Bool) -> [a] -> [b] -> Int

findMaxMatching predicate as bs = maxFlow graph source sink

where

(graph, source, sink) = totalGraph predicate as bsAnd we’re done! This will always give us the correct answer and it runs very fast! Take a look at the code on Github if you want to experiment with it!

Conclusion

Whew algorithms are exhausting aren’t they? That was a ton of code we just wrote. Let’s do a quick review. So this time around we looked at an actual problem that was not an obvious graph problem. We even tried a couple different algorithmic approaches. They both had issues though. Ultimately, we found that a graph algorithm was the solution, and we were able to implement it with FGL.

If you want to use FGL (or most any awesome Haskell library), it would help a ton if you learned how to use Stack! This great tool wraps project organization and package management into one tool. Check out our FREE Stack mini-course and learn more!

If you’ve never programmed in Haskell at all, then what are you waiting for? It’s super fun! You should download our Getting Started Checklist for some tips and resources on starting out!

Stay tuned next week for more on the Monday Morning Haskell Blog!

Graphing it Out

In the last two articles we dealt with some algorithmic issues. We were only able to solve these by improving the data structures in our code. First we used Haskell's built in array type for fast indexing. Then when we needed a segment tree, and we decided to make it from scratch. But we can’t roll our own data structure for every problem we encounter. So it’s good to have some systems we can use for more of these advanced topics.

One of the most important categories of data structures we’ll need for algorithms is graphs. Graphs are quite powerful when it comes to representing complicated problems. They are very useful for expressing relationships between data points. In this article, we'll see two types of graph problems. We’ll learn all about a library called the Functional Graph Library (FGL) that is available on Hackage for us to use. We’ll then take a stab at constructing graphs using the library. Finally, we’ll see how simple it is to solve these algorithms using this library once we’ve made our graphs.

For a complete set of the code that we’ll use in this article, check out this Github repository. It’ll show you how you can use Stack to bring the Functional graph library into your code.

If you’ve never used Stack before, it’s an indispensible tool for creating programs in Haskell. You should try out our Stack mini-course and learn more about it.

Graphs 101

For those of you who aren’t familiar with graphs and graph algorithms, I’ll explain a few of the basics here. If you’re very familiar with these already, you can skip this section. A graph contains a series of objects and encodes various relationships between these objects. For each object in our set, we have a “node” in our graph. These are like data points. Then to represent every relationship, we create an “edge” between two different nodes. We often give some kind of value to this edge as a piece of information about the relationship. In this article, we’ll imagine our nodes as places on a map, with the edges representing legal routes between locations. The label of each edge is the distance.

Edges can be both “directed” and “undirected”. Directed edges describe a 1-way relationship between the nodes. Undirected edges describe a 2-way relationship. Here's an example of a graph with directed edges:

Making Graphs with FGL

So whenever we’re making a graph with FGL, we’ll use a two-step process. First, we’ll create the “nodes” of our graph. Then we’ll encode the edges. Let’s suppose we’re reading an input stream. The stream will first give us the number of nodes and then edges in our graph. Then we’ll read a bunch of 3-tuples line-by-line. The first two numbers will refer to the "from" node and the "to" node. The third number will represent the distance. Here’s what this stream might look like for the graph pictured above:

6

9

1 2 3

1 3 4

2 3 5

2 4 2

2 5 6

3 5 5

4 6 9

5 4 1

5 6 10We’ll read this input stream like so:

import Control.Monad (replicateM)

import System.IO (Handle, hGetLine)

data EdgeSpec = EdgeSpec

{ fromNode :: Int

, toNode :: Int

, distance :: Int

}

readInputs :: Handle -> IO (Int, [EdgeSpec])

readInputs handle = do

numNodes <- read <$> hGetLine handle

numEdges <- (read <$> hGetLine handle)

edges <- replicateM numEdges (readEdge handle)

return (numNodes, edges)

readEdge :: Handle -> IO EdgeSpec

readEdge handle = do

input <- hGetLine handle

let [f_s, t_s, d_s] = words input

return $ EdgeSpec (read f_s) (read t_s) (read d_s)Our goal will be to encode this graph in the format of FGL. In this library, every node has an integer identifier. Nodes can either be “labeled” or “unlabeled”. This label, if it exists, is separate from the integer identifier. The function we’ll use requires our nodes to have labels, but we won’t need this extra information. So we’ll use a newtype to wrap the same integer identifier.

Once we know the number of nodes, it’s actually quite easy to create them all. We'll make labels from every number from 1 up through the length. Then we represent each node by the tuple of its index and label. Let’s start a function for creating our graph:

import Data.Graph.Inductive.Graph (mkGraph)

import Data.Graph.Inductive.PatriciaTree (Gr)

…

newtype NodeLabel = NodeLabel Int

type Distance = Int

genGraph :: (Int, [EdgeSpec]) -> Gr NodeLabel Distance

genGraph (numNodes, edgeSpecs) = mkGraph nodes edges

where

nodes = (\i -> (i, NodeLabel i))

<$> [1..numNodes]

edges = ...The graph we're making uses a "Patricia Tree" encoding under the hood. We won't go into details about that. We'll just call a simple mkGraph function exposed by the library. We'll make our return value the graph type Gr parameterized by our node label type and our edge label type. As we can see, we’ll use a type synonym Distance for integers to label our edges.

For now let’s get to the business of creating our edges. The format we specified with EdgeSpec works out that we don’t have to do much work. Just as the labeled node type is a synonym for a tuple, the labeled edge is a 3-tuple. It contains the indices of the “from” node, the “to” node, and then the distance label. In this case we’ll use directed edges. We do this for every edge spec, and then we’re done!

genGraph :: (Int, [EdgeSpec]) -> Gr NodeLabel Distance

genGraph (numNodes, edgeSpecs) = mkGraph nodes edges

where

nodes = (\i -> (i, NodeLabel i))

<$> [1..numNodes]

edges = (\es -> (fromNode es, toNode es, distance es))

<$> edgeSpecsUsing Graph Algorithms

Now suppose we want to solve a particular graph problem. First we’ll tackle shortest path. If we remember from above, the shortest path from node 1 to node 6 on our graph actually just goes along the top, from 1 to 2 to 4 to 6.

How can we solve this from Haskell? We’ll first we’ll use our functions from above to read in the graph. Then we’ll imagine reading in two more numbers for the start and end nodes.

solveSP :: Handle -> IO ()

solveSP handle = do

inputs <- readInputs handle

start <- read <$> hGetLine handle

end <- read <$> hGetLine handle

let gr = genGraph inputsNow with FGL we can simply make a couple library calls and we’ll get our results! We’ll use the Query.SP module, which exposes functions to find the shortest path and its length:

import Data.Graph.Inductive.Query.SP (sp, spLength)

solveSP :: Handle -> IO ()

solveSP handle = do

inputs <- readInputs handle

start <- read <$> hGetLine handle

end <- read <$> hGetLine handle

let gr = genGraph inputs

print $ sp start end gr

print $ spLength start end grWe’ll get our output, which contains a representation of the path as well as the distance. Imagine “input.txt” contains our sample input above, except with two more lines for the start and end nodes “1” and “6”:

>> find-shortest-path < input.txt

[1,2,4,6]

14We could change our file to instead go from 3 to 6, and then we’d get:

>> find-shortest-path < input2.txt

[3,5,4,6]

15Cool!

Minimum Spanning Tree

Now let’s imagine a different problem. Suppose our nodes are internet hubs. We only want to make sure they’re all connected to each other somehow. We’re going to pick a subset of the edges that will create a “spanning tree”, connecting all our nodes. Of course, we want to do this in the cheapest way, by using the smallest total “distance” from the edges. This will be our “Minimum Spanning Tree”. First, let’s remove the directions on all the arrows. We can visualize this solution by looking at this picture, and we’ll see that we can connect our nodes at a total cost of 19.

The great news is that it’s not much more work to code this! First, we’ll adjust our graph construction a bit. To have an “undirected” graph in this scenario, we can make our arrows bi-directional like so:

genUndirectedGraph :: (Int, [EdgeSpec]) -> Gr NodeLabel Distance

genUndirectedGraph (numNodes, edgeSpecs) = mkGraph nodes edges

where

nodes = (\i -> (i, NodeLabel i))