Quick and Simple Benchmarking

As a programmer, you have to constantly be asking, "How Good is my Code?". There are a lot of different ways code can be "good" or "bad", but one of the key indicators in many cases is the time performance of your code. How long does it take your code to run on particular inputs?

Haskell isn't generally regarded as one of the fastest languages out there; it's not designed specifically with performance in mind. Languages like C, C++, and, at a higher level, Rust, are specifically geared for writing code that runs faster. But this doesn't mean performance is irrelevant in Haskell. As long as you're writing a real world application that people are going to use, you need to be concerned with the user experience, and no user wants to be waiting around for several seconds on simple operations because you didn't bother to optimize your code.

So we want ways to measure the performance of our code, a process also known as benchmarking. The Criterion Library is one of the main tools Haskell offers to help you do this. I've written about this library before in my series on Testing in Haskell.

But the library is a bit difficult to jump into if you're just a beginner or if you're doing something lightweight. It can also take a lot longer to run than you were expecting, since it runs your code many times to get a statistically significant average. So in this article we're going to go over a more lightweight way to benchmark your code. It turns out though that this is a little trickier in Haskell than in other languages, so let's learn some of these nuances.

Timing in Python

Timing a function in Python is rather straightforward. Here's some code we can use to benchmark an operation op on a particular input_arg:

# time_py.py

import time

def time_op(op, input_arg):

t1 = time.time()

result = op(input_arg)

t2 = time.time()

return t2 - t1We get the initial time t1, we perform the operation, and then we get the current time again, and we return the difference.

As a very basic example, we can compare running the sorted function on a group of lists of escalating lengths:

xs = [10, 100, 1000, 10000, 100000, 1000000, 10000000, 100000000]

for x in xs:

a = time_op(sorted, xs)

print(a)And running this command, we see that the amount of time increases for the number of elements in the list:

>> python3 time_py.py

2.6226043701171875e-06

1.1920928955078125e-06

5.245208740234375e-06

5.0067901611328125e-05

0.0007715225219726562

0.008384466171264648

0.08414745330810547

0.8780522346496582Small list sizes don't take very long, and increasing the size of the list 10x increases the time by 10-20x, consistent with the n log n nature of sorting.

Notably, these values don't feel like they reflect the total time in our program. This program takes 5-10 seconds to run on my machine, quite a bit more than the cumulative time shown here. But the values are at least directionally correct, indicating a longer time for larger operations.

First Haskell Attempt

Let's try repeating this code in Haskell. We'll need to work in the IO monad, since the equivalent to time.time() is a call to getCurrentTime. Here's what our code might look like:

import Control.Monad (forM_)

import qualified Data.List as L

import Data.Time.Clock

timeOp :: (a -> b) -> a -> IO NominalDiffTime

timeOp op input = do

t1 <- getCurrentTime

let result = op input

t2 <- getCurrentTime

return $ diffUTCTime t2 t1

main :: IO ()

main = forM_ sizes $ \x -> do

let input = [1..x]

diff <- timeOp L.sort input

print diff

sizes :: [Int]

sizes =

[ 10, 100, 1000, 10000

, 100000, 1000000

, 10000000, 100000000

]When we run this program, we get some unhelpful results though…

0.000000576s

0.000000767s

0.000000684s

0.000000658s

0.000000751s

0.000000717s

0.00000075s

0.000000678sAll these times appear the same! What's happening?

Laziness at Work

What's happening, of course, is that Haskell's laziness is kicking in. Our program never demonstrates a need to use the values in our sorted lists. So it never actually evaluates these lists and performs the sorting!

So let's change things up a bit by returning our result along with the time, and printing the last value of the result list which will force the evaluation of the whole sorted list.

timeOp :: (a -> b) -> a -> IO (b, NominalDiffTime)

timeOp op input = do

t1 <- getCurrentTime

let result = op input

t2 <- getCurrentTime

return $ (result, diffUTCTime t2 t1)

main :: IO ()

main = forM_ sizes $ \x -> do

let input = [1..x]

(result, diff) <- timeOp L.sort input

print (diff, last result)Our result now looks like this:

(0.000000672s,10)

(0.00000061s,100)

(0.000000363s,1000)

(0.00000042s,10000)

(0.000000661s,100000)

(0.000001089s,1000000)

(0.000001185s,10000000)

(0.000001256s,100000000)Unfortunately, this is still not what we're looking for! Though there's a bit of increase in time, it's nowhere near the 10-20x increase we would like to see.

Haskell's laziness is still biting us. Our program is evaluating the list, but it's not evaluating the list when we would like it too. We want the whole evaluation to occur between our timing calls, but this doesn't happen. The complete list is only evaluated at the end of our loop, when we're about to print it out.

Printing Solutions

This can clue us in to some potential solutions though. Since printing is one of the sure-fire ways to ensure an expression is fully evaluated, we can add a Show constraint and print the value out during the evaluation function. This removes the need to return this result from our function:

timeOp :: (Show b) => (a -> b) -> a -> IO NominalDiffTime

timeOp op input = do

t1 <- getCurrentTime

let result = op input

print result

t2 <- getCurrentTime

return $ diffUTCTime t2 t1

main :: IO ()

main = forM_ sizes $ \x -> do

let input = [1..x]

diff <- timeOp L.sort input

print diffWhile technically this works, if we have non-trival result values to print (like a ten-million item list), then all the important data gets lost in this spam. Plus, our timing values now include the time it takes to print, which can end up being much larger!

We can get around this spam by instead printing the values to the /dev/null file, which simply discards all this information.

timeOp :: (Show b) => (a -> b) -> a -> IO NominalDiffTime

timeOp op input = do

t1 <- getCurrentTime

let result = op input

withFile "/dev/null" WriteMode (\h -> hPutStrLn h (show result))

t2 <- getCurrentTime

return $ diffUTCTime t2 t1With a sufficiently large amount of print information though, it is still possible that you can crash your program by printing to /dev/null! I had to remove the one hundred million list size, but then I got the following output:

0.000048873s

0.000017368s

0.000058216s

0.00057228s

0.006825458s

0.09262504s

2.521403946sNow we're getting values that seem accurate! But let's keep trying to improve how this function works!

Since printing large values can still crash our program, perhaps the more sensible choice is to provide a custom printing function instead of relying on the native Show definition. For our example, we can print the "last" value of the list, like we used before. This fully evaluates the result, without printing a large amount of information.

timeOp :: (a -> b) -> a -> (b -> String) -> IO NominalDiffTime

timeOp op input toStr = do

t1 <- getCurrentTime

withFile "/dev/null" WriteMode (\h -> hPutStrLn h (toStr result))

t2 <- getCurrentTime

return $ diffUTCTime t2 t1

main :: IO ()

main = forM_ sizes $ \x -> do

let input = [1..x]

diff <- timeOp L.sort input (show . last)

print diffThis works, even running the largest size!

0.000153407s

0.000015817s

0.000028232s

0.000279035s

0.002366471s

0.098812637s

1.468213335s

17.183272162sNotice though that the 10-million size has changed from 2.52 seconds to 1.46 seconds. Percentage-wise, that's actually a large error! There can be a fair amount of variation from run to run. This is why Criterion does many runs. However, our approach is still useful in letting us estimate orders of magnitude on our timing, which is often all you really need in assessing a solution.

Strict IO Library

Now, Haskell does have tools besides printing to fully evaluate expressions and otherwise introduce strictness into our code. One of these is the seq function'. This takes two arguments and simply returns the second, but still strictly evaluates the first.

-- Returns the second argument, but strictly evaluates the first

seq :: a -> b -> bThis is actually used under the hood of the foldl' function. This does a strict left-fold, avoiding the memory leak issues in the regular version of foldl:

foldl' f z [] = z

foldl' f z (x:xs) =

let z' = z `f` x

-- Use 'seq' to evaluate z' early!

in seq z' $ foldl' f z' xsYou can also make particular fields on a data type strict, so that they are fully evaluated immediately when an item of that type is constructed. You indicate this with a bang operator in front of the type in the data definition.

-- The fields will be strictly evaluated

data MyStrictType = MyStrictType !Int !StringWhat I found most useful for our particular use case though is an older library called Strict IO. This provides a number of utilities for performing IO actions strictly instead of lazily. It introduces its own module SIO to do this. We'll use 3 different functions from there:

-- Wrap an IO action taking no arguments and make it strict

wrap0 :: IO a -> SIO a

-- Wrap a pure value, evaluating it strictly

return' :: a -> SIO a

-- Run a strict IO action within the normal IO monad

run :: SIO a -> IO aWith these three pieces, our way forward is simple. We use wrap0 on our calls to getCurrentTime to ensure they happen immediately. Then we use return' to wrap the evaluation of our operation. And finally, we run our timeOp function from the IO monad.

import Control.DeepSeq (NFData)

import qualified System.IO.Strict as S

import qualified System.IO.Strict.Internals as S

timeOp :: (NFData b) => (a -> b) -> a -> S.SIO NominalDiffTime

timeOp op input = do

t1 <- S.wrap0 getCurrentTime

result <- S.return' (op input)

t2 <- S.wrap0 getCurrentTime

return $ (result, diffUTCTime t2 t1)

main :: IO ()

main = forM_ sizes $ \x -> do

let input = [1..x]

diff <- S.run (timeOp L.sort input)

print diffAnd this gives us the timing values we want, with no need for relying on print as an evaluation crutch.

0.00000917s

0.000011727s

0.000049986s

0.000489229s

0.005072207s

0.102675824s

1.722589101s

20.870199397sThis means we don't need any extra "show"-ing functions as arguments to our timing operation. All we need is an NFData constraint on our evaluated type. This exists for most basic types as long as we import Control.DeepSeq from the deepseq package.

As a weakness though, the strict-io package is not part of recent Stack resolver sets, so you'll need it as an extra-dep in stack.yaml. I have not found a more recent or "official" alternative though. Also, the wrap0 function comes from a module labeled as Internals. The library was mainly geared towards basic IO actions like file reading, and not getting the current time, so wrap0 wasn't intended to be part of the public API.

Conclusion

Now that we have a lighter-weight means of benchmarking our code (as compared to Criterion), we can explore ways that this helps us learn about problem solving! Come back next week and we'll see how!

And make sure to subscribe to our mailing list for more Haskell updates! If you're interested in problem solving content, there will be quite a lot of that coming up!

Big News Soon!

If you’ve ever been a follower of this blog, you may have noticed that I have not been posting much this year - basically nothing in the last 5 months or so. This year I experienced the birth of my first child, and subsequently moved 2500 miles with my family. So unfortunately I haven’t been able to prioritize my weekly blog content.

But I haven’t been completely staying away from Haskell! In fact, one of the other items I decided to prioritize is a new, upcoming course - the largest and most detailed I’ve done since Practical Haskell!

It’s not quite ready for publishing yet, but will be coming soon! So keep your eyes on this space!

Spring Sale: Final Day!

Today is the final day to subscribe and get 20% off any of our paid courses! Here are the potential sale prices you might get:

- Haskell From Scratch | Our Comprehensive Beginners Course | $79.20

- Practical Haskell | Learn about Useful Web Libraries and Project Concepts | 119.20

- Making Sense of Monads - Learn Haskell's Key Concept | $23.20

- Effectful Haskell - Take a Step further with Monadic Effect Systems | 31.20

- Haskell Brain - Combine Tensor Flow and Haskell | 31.20

In addition, you can also take a look at our new free course, Setup.hs. This course teaches you how to set up your basic Haskell toolchain, including IDE integrations!

So if you want that 20% discount code, make sure to subscribe to our mailing list before the end of the day!

This is How to Build Haskell with GNU Make (and why it's worth trying)

In a previous article I showed the GHC commands you need to compile a basic Haskell executable without explicitly using the source files from its dependencies. But when you're writing your own Haskell code, 99% of the time you want to be using a Haskell build system like Stack or Cabal for your compilation needs instead of writing your own GHC commands. (And you can learn how to use Stack in my new free course, Setup.hs).

But part of my motivation for solving that problem was that I wanted to try an interesting experiment:

How can I build my Haskell code using GNU Make?

GNU Make is a generic build system that allows you to specify components of your project, map out their dependencies, and dictate how your build artifacts are generated and run.

I wanted to structure my source code the same way I would in a Cabal-style application, but rely on GNU Make to chain together the necessary GHC compilation commands. I did this to help gain a deeper understanding of how a Haskell build system could work under the hood.

In a Haskell project, we map out our project structure in the .cabal file. When we use GNU Make, our project is mapped out in the makefile. Here's the Makefile we'll ultimately be constructing:

GHC = ~/.ghcup/ghc/9.2.5/bin/ghc

BIN = ./bin

EXE = ${BIN}/hello

LIB_DIR = ${BIN}/lib

SRCS = $(wildcard src/*.hs)

LIB_OBJS = $(wildcard ${LIB_DIR}/*.o)

library: ${SRCS}

@mkdir -p ${LIB_DIR}

@${GHC} ${SRCS} -hidir ${LIB_DIR} -odir ${LIB_DIR}

generate_run: app/Main.hs library

@mkdir -p ${BIN}

@cp ${LIB_DIR}/*.hi ${BIN}

@${GHC} -i${BIN} -c app/Main.hs -hidir ${BIN} -odir ${BIN}

@${GHC} ${BIN}/Main.o ${LIB_OBJS} -o ${EXE}

run: generate_run

@${EXE}

TEST_DIR = ${BIN}/test

TEST_EXE = ${TEST_DIR}/run_test

generate_test: test/Spec.hs library

@mkdir -p ${TEST_DIR}

@cp ${LIB_DIR}/*.hi ${TEST_DIR}

@${GHC} -i${TEST_DIR} -c test/Spec.hs -hidir ${TEST_DIR} -odir ${TEST_DIR}

@${GHC} ${TEST_DIR}/Main.o ${LIB_OBJS} -o ${TEST_EXE}

test: generate_test

@${TEST_EXE}

clean:

rm -rf ./binOver the course of this article, we'll build up this solution piece-by-piece. But first, let's understand exactly what Haskell code we're trying to build.

Our Source Code

We want to lay out our files like this, separating our source code (/src directory), from our executable code (/app) and our testing code (/test):

.

├── app

│ └── Main.hs

├── makefile

├── src

│ ├── MyFunction.hs

│ └── TryStrings.hs

└── test

└── Spec.hsHere's the source code for our three primary files:

-- src/MyStrings.hs

module MyStrings where

greeting :: String

greeting = "Hello"

-- src/MyFunction.hs

module MyFunction where

modifyString :: String -> String

modifyString x = base <> " " <> base

where

base = tail x <> [head x]

-- app/Main.hs

module Main where

import MyStrings (greeting)

import MyFunction (modifyString)

main :: IO ()

main = putStrLn (modifyString greeting)And here's what our simple "Spec" test looks like. It doesn't use a testing library, it just prints different messages depending on whether or not we get the expected output from modifyString.

-- test/Spec.hs

module Main where

import MyFunction (modifyString)

main :: IO ()

main = do

test "abcd" "bcda bcda"

test "Hello" "elloH elloH"

test :: String -> String -> IO ()

test input expected = do

let actual = modifyString input

putStr $ "Testing case: " <> input <> ": "

if expected /= actual

then putStrLn $ "Incorrect result! Expected: " <> expected <> " Actual: " <> actual

else putStrLn "Correct!"The files are laid out the way we would expect for a basic Haskell application. We have our "library" code in the src directory. We have a single "executable" in the app directory. And we have a single "test suite" in the test directory. Instead of having a Project.cabal file at the root of our project, we'll have our makefile. (At the end, we'll actually compare our Makefile with an equivalent .cabal file).

But what does the Makefile look like? Well it would be overwhelming to construct it all at once. Let's begin slowly by treating our executable as a single file application.

Running a Single File Application

So for now, let's adjust Main.hs so it's an independent file without any dependencies on our library modules:

-- app/Main.hs

module Main where

main :: IO ()

main = putStrLn "Hello"The simplest way to run this file is runghc. So let's create our first makefile rule that will do this. A rule has a name, a set of prerequisites, and then a set of commands to run. We'll call our rule run, and have it use runghc on app/Main.hs. We'll also include the app/Main.hs as a prerequisite, since the rule will run differently if that file changes.

run: app/Main.hs

runghc app/Main.hsAnd now we can run this run using make run, and it will work!

$ make run

runghc app/Main.hs

HelloNotice that it prints the command we're running. We can change this by using the @ symbol in front of the command in our Makefile. We'll do this with almost all our commands:

run: app/Main.hs

@runghc app/Main.hsAnd it now runs our application without printing the command.

Using runghc is convenient, but if we want to use dependencies from different directories, we'll eventually need to use multiple stages of compilation. So we'll want to create two distinct rules. One that generates the executable using ghc, and another that actually runs the generated executable.

So let's create a generate_run rule that will produce the build artifacts, and then run will use them.

generate_run: app/Main.hs

@ghc app/Main.hs

run: generate_run

@./app/MainNotice that run can depend on generate_run as a prerequisite, instead of the source file now. This also generates three build artifacts directly in our app directory: the interface file Main.hi, the object file Main.o, and the executable Main.

It's bad practice to mix build artifacts with source files, so let's use GHC's arguments (-hidir, -odir and -o) to store these artifacts in their own directory called bin.

generate_run: app/Main.hs

@mkdir -p ./bin

@ghc app/Main.hs -hidir ./bin -odir ./bin -o ./bin/hello

run: generate_run

@./bin/helloWe can then add a third rule to "clean" our project. This would remove all binary files so that we can do a fresh recompilation if we want.

clean:

rm -rf ./binFor one final flourish in this section, we can use some variables. We can make one for the GHC compiler, referencing its absolute path instead of a symlink. This would make it easy to switch out the version if we wanted. We'll also add a variable for our bin directory and the hello executable, since these are used multiple times.

# Could easily switch versions if desired

# e.g. GHC = ~/.ghcup/ghc/9.4.4/bin/ghc

GHC = ~/.ghcup/ghc/9.2.5/bin/ghc

BIN = ./bin

EXE = ${BIN}/hello

generate_run: app/Main.hs

@mkdir -p ${BIN}

@${GHC} app/Main.hs -hidir ${BIN} -odir ${BIN} -o ${EXE}

run: generate_run

@${EXE}

clean:

rm -rf ./binAnd all this still works as expected!

$ generate_run

[1 of 1] Compiling Main (app/Main.hs, bin/Main.o)

Linking ./bin/hello

$ make run

Hello

$ make clean

rm -rf ./binSo we have some basic rules for our executable. But remember our goal is to depend on a library. So let's add a new rule to generate the library objects.

Generating a Library

For this step, we would like to compile src/MyStrings.hs and src/MyFunction.hs. Each of these will generate an interface file (.hi) and an object file (.o). We want to place these artifacts in a specific library directory within our bin folder.

We'll do this by means of a new rule, library, which will use our two source files as its prerequisites. It will start by creating the library artifacts directory:

LIB_DIR = ${BIN}/lib

library: src/MyStrings.hs src/MyFunction.hs

@mkdir -p ${LIB_DIR}

...But now the only thing we have to do is use GHC on both of our source files, using LIB_DIR as the destination point.

LIB_DIR = ${BIN}/lib

library: src/MyStrings.hs src/MyFunction.hs

@mkdir -p ${LIB_DIR}

@ghc src/MyStrings.hs src/MyFunction.hs -hidir ${LIB_DIR} -odir ${LIB_DIR}Now when we run the target, we'll see that it produces the desired files:

$ make library

$ ls ./bin/lib

MyFunction.hi MyFunction.o MyStrings.hi MyStrings.oRight now though, if we added a new source file, we'd have to modify the rule in two places. We can fix this by adding a variable that uses wildcard to match all our source files in the directory (src/*.hs).

LIB_DIR = ${BIN}/lib

SRCS = $(wildcard src/*.hs)

library: ${SRCS}

@mkdir -p ${LIB_DIR}

@${GHC} ${SRCS} -hidir ${LIB_DIR} -odir ${LIB_DIR}While we're learning about wildcard, let's make another variable to capture all the produced object files. We'll use this in the next section.

LIB_OBJS = $(wildcard ${LIB_DIR}/*.o)So great! We're producing our library artifacts. How do we use them?

Linking the Library

In this section, we'll link our library code with our executable. We'll begin by assuming our Main file has gone back to its original form with imports, instead of the simplified form:

-- app/Main.hs

module Main where

import MyStrings (greeting)

import MyFunction (modifyString)

main :: IO ()

main = putStrLn (modifyString greeting)We when try to generate_run, compilation fails because it cannot find the modules we're trying to import:

$ make generate_run

...

Could not find module 'MyStrings'

...

Could not find module 'MyFunction'As we went over in the previous article, the general approach to compiling the Main module with its dependencies has two steps:

1. Compile with the -c option (to stop before the linking stage) using -i to point to a directory containing the interface files.

2. Compile the generated Main.o object file together with the library .o files to produce the executable.

So we'll be modifying our generate_main rule with some extra steps. First of course, it must now depend on the library rule. Then our first new command will be to copy the .hi files from the lib directory into the top-level bin directory.

generate_run: app/Main.hs library

@mkdir -p ./bin

@cp ${LIB_DIR}/*.hi ${BIN}

...We could have avoided this step by generating the library artifacts in bin directly. I wanted to have a separate location for all of them though. And while there may be some way to direct the next command to find the headers in the lib directory, none of the obvious ways worked for me.

Regardless, our next step will be to modify the ghc call in this rule to use the -c and -i arguments. The rest stays the same:

generate_run: app/Main.hs library

@mkdir -p ./bin

@cp ${LIB_DIR}/*.hi ${BIN}

@${GHC} -i${BIN} -c app/Main.hs -hidir ${BIN} -odir ${BIN}

...Finally, we invoke our final ghc call, linking the .o files together. At the command line, this would look like:

$ ghc ./bin/Main.o ./bin/lib/MyStrings.o ./bin/lib/MyFunction.o -o ./bin/helloRecalling our LIB_OBJS variable from up above, we can fill in the rule in our Makefile like so:

LIB_OBJS = $(wildcard ${LIB_DIR}/*.o)

generate_run: app/Main.hs library

@mkdir -p ./bin

@cp ${LIB_DIR}/*.hi ${BIN}

@${GHC} -i${BIN} -c app/Main.hs -hidir ${BIN} -odir ${BIN}

@${GHC} ${BIN}/Main.o ${LIB_OBJS} -o ${EXE}And now our program will work as expected! We can clean it and jump straight to the make run rule, since this will run its prerequisites make library and make generate_run automatically.

$ make clean

rm -rf ./bin

$ make run

[1 of 2] Compiling MyFunction (src/MyFunction.hs, bin/lib/MyFunction.o)

[2 of 2] Compiling MyStrings (src/MyStrings.hs, bin/lib/MyStrings.o)

elloH elloHSo we've covered the library and an executable, but most Haskell projects have at least one test suite. So how would we implement that?

Adding a Test Suite

Well, a test suite is basically just a special executable. So we'll make another pair of rules, generate_test and test, that will mimic generate_run and run. Very little changes, except that we'll make another special directory within bin for our test artifacts.

TEST_DIR = ${BIN}/test

TEST_EXE = ${TEST_DIR}/run_test

generate_test: test/Spec.hs library

@mkdir -p ${TEST_DIR}

@cp ${LIB_DIR}/*.hi ${TEST_DIR}

@${GHC} -i${TEST_DIR} -c test/Spec.hs -hidir ${TEST_DIR} -odir ${TEST_DIR}

@${GHC} ${TEST_DIR}/Main.o ${LIB_OBJS} -o ${TEST_EXE}

test: generate_test

@${TEST_EXE}Of note here is that at the final step, we're still using Main.o instead of Spec.o. Since it's an executable module, it also compiles as Main.

But we can then use this to run our tests!

$ make clean

$ make test

[1 of 2] Compiling MyFunction (src/MyFunction.hs, bin/lib/MyFunction.o)

[2 of 2] Compiling MyStrings (src/MyStrings.hs, bin/lib/MyStrings.o)

Testing case: abcd: Correct!

Testing case: Hello: Correct!So now we have all the different components we'd expect in a normal Haskell project. So it's interesting to consider how our makefile definition would compare against an equivalent .cabal file for this project.

Comparing to a Cabal File

Suppose we want to call our project HaskellMake and store its configuration in HaskellMake.cabal. We'd start our Cabal file with four metadata lines:

cabal-version: 1.12

name: HaskellMake

version: 0.1.0.0

build-type: SimpleNow our library would expose its two modules, using the src directory as its root. The only "dependency" is the Haskell base packages. Finally, default-language is a required field.

library

exposed-modules:

MyStrings

, MyFunction

hs-source-dirs:

src

build-depends:

base

default-language: Haskell2010The executable would similarly describe where the files are located and state a base dependency as well as a dependency on the library itself.

executable hello

main-is: Main.hs

hs-source-dirs:

app

build-depends:

base

, HaskellMake

default-language: Haskell2010Finally, our test suite would look very similar to the executable, just with a different directory and filename.

test-suite make-test

type: exitcode-stdio-1.0

main-is: Spec.hs

hs-source-dirs:

test

build-depends:

base

, HaskellMake

default-language: Haskell2010And, if we add a bit more boilerplate, we could actually then compile our code with Stack! First we need a stack.yaml specifying the resolver and the package location:

# stack.yaml

resolver: lts-20.12

packages:

- .Then we need Setup.hs:

-- Setup.hs

import Distribution.Simple

main = defaultMainAnd now we could actually run our code!

$ stack build

$ stack exec hello

elloH elloH

$ stack test

Testing case: abcd: Correct!

Testing case: Hello: Correct!Now observant viewers will note that we don't use any Hackage dependencies in our code - only base, which GHC always knows how to find. It would require a lot of work for us to replicate dependency management. We could download a .zip file with curl easily enough, but tracking the whole dependency tree would be extremely difficult.

And indeed, many engineers have spent a lot of time getting this process to work well with Stack and Cabal! So while it would be a useful exercise to try to do this manually with a simple dependency, I'll leave that for a future article.

When comparing the two file definitions, Undoubtedly, the .cabal definition is more concise and human readable, but it hides a lot of implementation details. Most of the time, this is a good thing! This is exactly what we expect from tools in general; they should allow us to work more quickly without having to worry about details.

But there are times where we might, on our time, want to occasionally try out a more adventurous path like we've done in this article that avoids relying too much on modern tooling. So why was this article a "useful exercise"™?

What's the Point?

So obviously, there's no chance this Makefile approach is suddenly going to supplant Cabal and Stack for building Haskell projects. Stack and Cabal are "better" for Haskell precisely because they account for the intricacies of Haskell development. In fact, by their design, GHC and Cabal both already incorporate some key ideas and features from GNU Make, especially with avoiding re-work through dependency calculation.

But there's a lot you can learn by trying this kind of exercise.

First of all, we learned about GNU Make. This tool can be very useful if you're constructing a program that combines components from different languages and systems. You could even build your Haskell code with Stack, but combine it with something else in a makefile.

A case and point for this is my recent work with Haskell and AWS. The commands for creating a docker image, authenticating to AWS and deploying it are lengthy and difficult to remember. A makefile can, at the very least, serve as a rudimentary aliasing tool. You could run make deploy and have it automatically rebuild your changes into a Docker image and deploy that to your server.

But beyond this, it's important to take time to deliberately understand how our tools work. Stack and Cabal are great tools. But if they seem like black magic to you, then it can be a good idea to spend some time understanding what is happening at an internal level - like how GHC is being used under the hood to create our build artifacts.

Most of the fun in programming comes in effectively applying tools to create useful programs quickly. But if you ever want to make good tools in the future, you have to understand what's happening at a deeper level! At least a couple times a year, you should strive to go one level deeper in your understanding of your programming stack.

For me this time, it was understanding just a little more about GHC. Next time I might dive into dependency management, or a different topic like the internal workings of Haskell data structures. These kinds of topics might not seem immediately applicable in your day job, but you'll be surprised at the times when deeper knowledge will pay dividends for you.

Getting Better at Haskell

But enough philosophizing. If you're completely new to Haskell, going "one level deeper" might simply mean the practical ability to use these tools at a basic level. If your knowledge is more intermediate, you might want to explore ways to improve your development process. These thoughts can lead to questions like:

1. What's the best way to set up my Haskell toolchain in 2023?

2. How do I get more efficient and effective as a Haskell programmer?

You can answer these questions by signing up for my new free course Setup.hs! This will teach how to install your Haskell toolchain with GHCup and get you started running and testing your code.

Best of all, it will teach you how to use the Haskell Language Server to get code hints in your editor, which can massively increase your rate of progress. You can read more about the course in this blog post.

If you subscribe to our monthly newsletter, you'll also get an extra bonus - a 20% discount on any of our paid courses. This offer is good for two more weeks (until May 1) so don't miss out!

How to Make ChatGPT Go Around in Circles (with GHC and Haskell)

As part of my research for the recently released (and free!) Setup.hs course, I wanted to explore the different kinds of compilation commands you can run with GHC outside the context of a build system.

I wanted to know…

Can I use GHC to compile a Haskell module without its dependent source files?

The answer, obviously, should be yes. When you use Stack or Cabal to get dependencies from Hackage, you aren't downloading and recompiling all the source files for those libraries.

And I eventually managed to do it. It doesn't seem hard once you know the commands already:

$ mkdir bin

$ ghc src/MyStrings.hs src/MyFunction.hs -hidir ./bin -odir ./bin

$ ghc -c app/Main.hs -i./bin -hidir ./bin -odir ./bin

$ ghc bin/Main.o ./bin/MyStrings.o ./bin/MyFunction.o -o ./bin/hello

$ ./bin/hello

...But, being unfamiliar with the inner workings of GHC, I struggled for a while to find this exact combination of commands, especially with their arguments.

So, like I did last week, I turned to the latest tool in the developer's toolkit: ChatGPT. But once again, everyone's new favorite pair programmer had some struggles of its own on the topic! So let's start by defining exactly the problem we're trying to solve.

The Setup

Let's start with a quick look at our initial file tree.

├── app

│ └── Main.hs

└── src

├── MyFunction.hs

└── MyStrings.hsThis is meant to look the way I would organize my code in a Stack project. We have two "library" modules in the src directory, and one executable module in the app directory that will depend on the library modules. These files are all very simple:

-- src/MyStrings.hs

module MyStrings where

greeting :: String

greeting = "Hello"

-- src/MyFunction.hs

module MyFunction where

modifyString :: String -> String

modifyString x = base <> " " <> base

where

base = tail x <> [head x]

-- app/Main.hs

module Main where

import MyStrings (greeting)

import MyFunction (modifyString)

main :: IO ()

main = putStrLn (modifyString greeting)Our goal is to compile and run the executable with two constraints:

1. Use only GHC (no Stack or Cabal involved)

2. Compile the library separately, so that the Main module could be compiled using only the library's build artifacts, and not the source files.

Trying to Compile

Now, there are two easy ways to compile this code if we're willing to violate our constraints (particularly the second one). If all three files are in the same directory, GHC can immediately find the modules we're importing, so we can just call ghc Main.hs.

└── src

├── Main.hs

├── MyFunction.hs

└── MyStrings.hs

...

$ cd src

$ ghc Main.hs

$ ./Main

elloH elloHIf we keep the original file structure, it's still pretty easy if we're willing to violate constraint 2 by using the library source files in the ghc command:

$ ghc app/Main.hs src/MyStrings.hs src/MyFunction.hs

$ ./Main

elloH elloHHowever, with my extra constraint of not using the library source files directly, things got a little more difficult. I originally thought "Hey, let's just include the build artifacts in the GHC call." Each library module generates an interface file (.hi) and an object file (.o). So let's try including those in the GHC call:

$ ghc src/MyStrings.hs src/MyFunction.hs

$ ls -l src/

MyFunction.hi MyFunction.hs MyFunction.o MyStrings.hi MyStrings.hs MyStrings.o

$ ghc app/Main.hs src/MyFunction.hi src/MyFunction.o src/MyStrings.hi src/MyStrings.o

...

Could not find module 'MyStrings'

...

Could not find module 'MyFunction'As we can see, it doesn't work! Even though we're including the build artifacts as direct arguments of ghc, this doesn't help our main module to find them.

So, feeling a little too lazy to dig deep through GHC documentation, and feeling like this problem might have too many arbitrary constraints to phrase in a Google query, I decided to try our aforementioned new favorite tool.

Enter: ChatGPT

The First Session

(Conversation has been abbreviated for relevance)

Me

How do I link existing

.ofiles with a new source module using the 'ghc' command?

Chat GPT

To link existing object (

.o) files with a new source module using the ghc command, you need to include the object files in the list of input files passed to the ghc command.

Assuming you have an existing

.ofile calledexample.oand a new source module calledMyModule.hs, you can link them together into an executable program using the following command:

$ ghc -o MyProgram MyModule.hs example.oThis command tells ghc to compile and link the

MyModule.hssource file with theexample.oobject file and create an executable program calledMyProgram. The-oflag specifies the output file name.

Me

Is it possible to use a ghc command to link a Haskell object file (

.o) with a Haskell source file that imports the other module?

Chat GPT

Yes, it is possible to use the ghc command to link a Haskell object file (

.o) with a Haskell source file that imports the other module. Here's an example:

Suppose you have two files,

MyModule.hsandOtherModule.o, andMyModule.hsimports a module that is defined inOtherModule.o. To link the two files into an executable program, you can use the following command:

$ ghc -o MyProgram MyModule.hs OtherModule.oThis command tells ghc to compile and link the

MyModule.hssource file with theOtherModule.oobject file, and create an executable program calledMyProgram.

Me

Hmmm I don't think this works. I created the following file

OtherModule.hs:

module OtherModule where

greeting :: String

greeting = "Hello"And I'm trying to include it in another file:

module Main where

import OtherModule (greeting)

main :: IO ()

main = putStrLn greetingI started by compiling OtherModule:

$ ghc src/OtherModule.hsThen I moved

OtherModule.oto the app directory (withMyModule.hs), and tried running this command:

$ cd app

$ ghc -o MyProgram MyModule.hs OtherModule.oI get the error:

"Could not find module 'OtherModule'"

Chat GPT

I apologize for the confusion in my previous answer. You are correct that simply linking the object file with the executable does not work in this case.

When GHC compiles a module, it generates an interface file (

.hi) that contains information about the module's name, type signatures, and other details. The compiled object file (.o) contains the compiled machine code for the functions defined in the module.

When you try to import a module in a source file, GHC looks for the interface file (

OtherModule.hi) to find information about the module's name and type signatures. If the interface file is not found, GHC will raise an error.

In your case, when you compile

OtherModule.hs, GHC generates an interface file calledOtherModule.hi. To compileMyModule.hs, GHC needs to be able to findOtherModule.hiin order to resolve the import ofOtherModule. Simply linking theOtherModule.oobject file is not enough.

To make this work, you need to compile OtherModule.hs and MyModule.hs together, like this:

ghc -o MyProgram MyModule.hs src/OtherModule.hsThis command tells GHC to compile

MyModule.hsandOtherModule.hstogether, and create an executable program calledMyProgram.

I hope this clears up the confusion. Again, I apologize for the confusion in my previous answer.

Me

Ah, so I need to include both source files, and it can't be done by linking an object file with another source file?

Chat GPT

Yes, that's correct. When you import a module in a source file, GHC needs to be able to find the interface file (

.hi) for that module in order to resolve the import. If the interface file is not found, GHC will raise an error.

When you compile a source file with GHC, it generates both an object file (

.o) and an interface file (.hi). The object file contains the compiled machine code for the functions defined in the module, while the interface file contains information about the module's name, type signatures, and other details.

When you compile a source file that imports another module, GHC needs to be able to find the interface file for that module in order to compile the importing module. If the interface file is not found, GHC will raise an error.

So in your case, you need to compile both

MyModule.hsandOtherModule.hstogether so that GHC can generate the necessary interface files and link the resulting object files together to produce the executable program.

It is not possible to directly link an object file with a source file that imports it, as GHC needs the interface file to be present in order to compile the importing module.

Analysis

Suspiciously, ChatGPT suggests a less detailed command than the previous one that failed for me!

$ ghc -o MyProgram MyModule.hs OtherModule.oIt includes only the object file, instead of the object and interface file. And, of course this fails, like I showed above.

Now there's an interesting statement that will come into play later, though I didn't realize it!

Yes, that's correct. When you import a module in a source file, GHC needs to be able to find the interface file (

.hi) for that module in order to resolve the import. If the interface file is not found, GHC will raise an error.

I assumed that to "find" the interface file meant providing it on the command line (as in my original command). So with that idea having failed, I fixated on one part of the final statement:

It is not possible to directly link an object file with a source file that imports it...

And concluded that getting the right information from ChatGPT might be a lost cause. It seemed like it was sure this was possible, and then just as sure that it was impossible. But details matter, as we'll see!

Working Around It

So feeling slightly defeated, I decided to poke around the areas of the code where Cabal actually saves packages to see if there was a pattern I could follow. And I managed to cobble together a convoluted workaround.

What I observed was that GHC could use any module from a library in the Cabal package database. On my system's setup, this could be found in the .ghcup cache:

$ cd ~/.ghcup/ghc/9.2.5/lib/ghc-9.2.5/

$ ls

array-0.5.4.0/

base-4.16.4.0/

...

package.conf.d/

$ ls package.conf.d

array-0.5.4.0.conf

base-4.16.4.0.conf

...And indeed, the package directories did not have source files, only various forms of compiled object and interface files. And each package had a corresponding .conf file in the package.conf.d directory.

So I could...

- Make my own directory in the package database (

MyPackage-0.1.0.0) - Place the

.hiand.ofiles in there - Create a phony

MyPackage-0.1.0.0.confand put it inpackage.conf.d. (Base it on an existing.conffile but make some substitutions)

And this...technically...works. But it makes me feel a little bit like:

But manually manipulating a package database wasn't the solution I had in mind though. Surely there's something easier, right?

The Second Session

After a week of sitting on this, I decided to come back to ChatGPT and see if it had any other ideas. I decided to frame my question in a different way, stating a lot more details up front. Lo and behold, it had a different answer this time!

Me

Suppose I have a Haskell module called

MyStrings:

module MyStrings where

greeting :: String

greeting = "Hello"I can compile this module:

$ ghc MyStrings.hsThis generates an interface file,

MyStrings.hi, and an object file,MyStrings.o.

Now suppose I have an executable file,

Main.hs, that importsMyStrings:

module Main where

import MyStrings (greeting)

main :: IO ()

main = putStrLn greetingCan I use ghc to compile this Main file by using the

.hiand.ofiles, without including the imported source file,MyStrings.hsin the command?

Chat

Yes, you can use the

.hiand.ofiles generated by compilingMyStrings.hsto compileMain.hswithout includingMyStrings.hsin the command. To do so, you can use the-coption to tell GHC to compileMain.hsto an object file, and then use the-ooption to link the object files together into an executable:

$ ghc -c Main.hs

$ ghc Main.o MyStrings.o -o myprogramThe first command compiles

Main.hsto an object fileMain.o, without attempting to link it. The second command linksMain.owithMyStrings.oand creates an executable calledmyprogram. The.hifiles are not needed for linking, so you don't need to include them in the command.

Note that in order for this to work, the interface file

MyStrings.himust be present in the same directory asMain.hswhen you compile it. If you moveMyStrings.hito a different directory, you'll need to use the-ioption to tell GHC where to find it:

$ ghc -i/path/to/MyStrings -c Main.hs

$ ghc Main.o /path/to/MyStrings/MyStrings.o -o myprogramHere,

-i/path/to/MyStringstells GHC to look forMyStrings.hiin the directory/path/to/MyStrings.

Analysis

So there are two key ingredients that weren't mentioned at all in our first pass: the -c option and the -i option.

Using -c performs compilation without linking. With this option, GHC is able to produce an object file for our Main module without needing its dependent objects. So .o files are unnecessary in this stage.

We still need the .hi files though. But instead of providing them on the command line, we use the -i argument to supply them. It's an odd argument, because we put the path right after the i, without any spacing.

After we're done with the first phase, then we can link all our object files together.

Solving It

And sure enough, this approach works!

$ ghc src/MyStrings.hs src/MyFunction.hs

$ ghc -c app/Main.hs -i./src

$ ghc app/Main.o ./src/MyStrings.o ./src/MyFunction.o -o hello

$ ./hello

elloH elloHAnd if we want to be a little cleaner about putting our artifacts in a single location, we can use the -hidir and -odir arguments for storing everything in a bin directory.

$ mkdir bin

$ ghc src/MyStrings.hs src/MyFunction.hs -hidir ./bin -odir ./bin

$ ghc -c app/Main.hs -i./bin -hidir ./bin -odir ./bin

$ ghc bin/Main.o ./bin/MyStrings.o ./bin/MyFunction.o -o ./bin/hello

$ ./bin/hello

elloH elloHAnd we're done! Our program is compiling as we wanted it to, without our "Main" compilation command directly using the library source files.

Conclusion

So with that fun little adventure concluded, what can we learn from this? Well first of all, prompts matter a great deal when you're using a Chatbot. The more detailed your prompt, and the more you spell out your assumptions, the more likely you'll get the answer you're looking for. My second prompt was waaay more detailed than my first prompt, and the solution was much better as a result.

But a more pertinent lesson for Haskellers might be that using GHC by itself can be a big pain. So if you're a beginner, you might be asking:

What's the normal way to build Haskell Code?

You can learn all about building and running your Haskell code in our new free course, Setup.hs. This course will teach you the easy steps to set up your Haskell toolchain, and show you how to build and run your code using Stack, Haskell's most popular build system. You'll even learn how to get Haskell integrations in several popular code editors so you can learn from your mistakes much more quickly. Learn more about it on the course page.

And if you subscribe to our monthly newsletter, you'll get a code for 20% off any of our paid courses until May 1st! So don't miss out on that offer!

How to Stump ChatGPT in 1 Easy Step (Hint: Ask a Windows Question)

While doing some research for Setup.hs, my new free course, I confronted a fact of Haskell development with Stack.

The commands for running individual test suites are cumbersome.

For example, if you have a parse-tests suite in your Project.cabal file, you can run it (without running other suites) like so:

$ stack build Project:test:parse-testsThe structure isn't hard once you're used to it, but remembering the exact names of your test suites isn't always easy on larger projects. This leads to the question:

How can I create aliases for 'stack build' commands?

On Unix-based systems, it's quite easy. Just slap something like this in your .bashrc (or equivalent) and you're good to go:

alias ppt="stack build Project:test:parse-tests"

...

$ source ~/.bashrc

$ ppt

(Tests run)Windows though is, as always, a special beast. And much as I generally prefer to use Windows Subsystem for Linux, I wanted to help any users who might be working with a pure Windows setup. This article will focus on:

How do I enable 'stack' aliases in Windows PowerShell?

Now, when I lay out the code I put in my PowerShell profile, it looks a little dense but fairly innocuous:

Function ProjectParseTest { Invoke-Expression "stack build Project:test:parse-tests" }

New-Alias -Name qpt -Value ProjectParseTestBut discovering the exact formula for getting all this to work was quite difficult. And as we'll see, in fact, even ChatGPT, the new kid on the block, struggles with this question. After a brief while struggling through Windows documentation, I turned to the chat bot. Let's review the first question:

ChatGPT Question 1

Me

How can I make command aliases in Windows 11 Powershell?

Chat GPT

You can create command aliases in Windows 11 PowerShell by using the

New-Aliascmdlet. Here's an example that creates an alias ls for theGet-ChildItemcmdlet:

New-Alias -Name ls -Value Get-ChildItemThis will create an alias named

lsthat can be used just like theGet-ChildItemcmdlet. For example:

ls .This will list the contents of the current directory, just as if you had typed

Get-ChildItem..

To persist your alias across sessions, you need to add it to your PowerShell profile. To do that, first determine the path to your profile by running the following command:

$ $profileIf the profile does not exist yet, you can create it by running the following command:

New-Item -Type File -Path $profile -ForceThen, open the profile in your favorite text editor and add the New-Alias command. Save the file and restart your PowerShell session. Your alias will be available every time you start PowerShell.

Analysis

Now, everything Chat has told me is technically true. The command it gives successfully creates an alias. Notably though, if you want to try this yourself, you should use a different alias, as Powershell already includes the alias ls in most recent Windows version (previously you would need to use the alias dir to get that effect).

Additionally, I found that the PROFILE variable was indeed set, but the file did not exist. In fact, the directory it was supposed to be in (Documents/WindowsPowerShell) did not exist either! So you'll have to make that before creating the file. But placing an alias command in there will persist the alias across sessions.

However, notice that this doesn't quite answer our question, because the subject of the alias was an established Windows expression (Get-ChildItem, also know as a cmdlet), rather than a terminal command we would write.

Let's suppose we just want to alias sb=stack build. It does not work to simply use a string for the command:

There are some clues to our eventual solution in the error message though. It tells us we need a cmdlet, a function, a script file, or an operable program.

Now stack by itself is an operable program. So we could actually do the following:

PS > New-Alias -Name st -Value "stack"

PS > st

(stack usage output)But we want to provide arguments as part of the alias. So let's see if ChatGPT knows how. We'll see that its answers are not quite right.

ChatGPT Question 2

Me

How do I make the alias run a command, like "stack build"

Chat GPT

You can create an alias that runs a command by using the

Invoke-Expressioncmdlet to evaluate a string as a PowerShell expression. Here's an example that creates an aliassbfor thestack buildcommand:

New-Alias -Name sb -Value { Invoke-Expression "stack build" }This will create an alias named

sbthat can be used to run thestack buildcommand. For example:

sbThis will run the

stack buildcommand, just as if you had typed it into the PowerShell prompt.

As with the previous example, you can add this alias to your PowerShell profile to persist it across sessions.

Me

I get an error "Cannot evaluate parameter 'Value' because its argument is specified as a script block and there is no input"

How do I specify input in this alias?

Chat GPT

To specify input in a script block alias, you can use the

Paramkeyword to declare input parameters and then pass the input to the underlying command. Here's an example that creates an aliassbfor the stack build command, allowing you to specify the target to build as input:

New-Alias -Name sb -Value {

Param ($target)

Invoke-Expression "stack build $target"

}This will create an alias named

sbthat can be used to run thestack buildcommand with a specified target. For example:

sb my-targetThis will run the

stack build my-targetcommand, just as if you had typed it into the PowerShell prompt.

As with the previous examples, you can add this alias to your PowerShell profile to persist it across sessions.

Analysis

Both of the proposed approaches fail! I commented on the issue explicitly after the first response, and then got the same error running the second approach ("Cannot evaluate parameter 'Value' because its argument is specified as a script block..."). So I gave up on my interrogation and started searching for documentation again.

Solving the Problem

I don't remember exactly where I found the solution. I think it involved cobbling together points from multiple Stack Overflow posts. Windows documentation was not very helpful. Let's recall the error message from earlier:

The term 'stack build' is not recognized as the name of a cmdlet, function, script file, or operable program.

We can't make an official Windows PS cmdlet out of our program, nor can we make an operable program with the arguments we want. We could make a script file, but running scripts from PowerShell is surprisingly difficult (there are some extra steps with permissions). The answer is that we can make a function that our alias will refer to.

This function will, incidentally, use the Invoke-Expression idea ChatGPT recommended as well, just not directly as the alias value!

The following code will go in our $PROFILE. First, we make a function that invokes our expression. We can give this function an arbitrary name, but I used a capitalized name (ProjectParseTest) to distinguish from any potential aliases.

Function ProjectParseTest { Invoke-Expression "stack build Project:test:parse-tests" }Now we can use this function as the object of a New-Alias call! So we use the command ChatGPT suggested, just substituting our function for the -Value instead of providing a raw Invoke-Expression command.

Function ProjectParseTest { Invoke-Expression "stack build Project:test:parse-tests" }

New-Alias -Name ppt -Value ProjectParseTestThis alias succeeds now, and by putting this in my PowerShell profile, I can persist the alias across sessions!

$ ppt

(Test runs)Haskell on Windows

Now aliases are just one small piece of the Haskell puzzle. So if you're trying to get started with Haskell, but don't have a Mac, and aren't familiar with Linux, you might want to know:

How do I set up my Haskell toolchain on Windows?

My new free course Setup.hs goes over all the basics of setting up your Haskell toolchain, including how to get started with code hints in three of the most popular editors out there. Plus, every lecture includes a walkthrough video for Windows* so you can learn what kinds of odd quirks you might come across! You can read more about the course in this article.

Plus, if you subscribe to our monthly newsletter, you'll also get a 20% discount code for all our paid courses that is good until May 1! So don't miss out on your chance to learn about Haskell!

*Separate walkthrough videos for MacOS and Linux are also included.

New Free Course: Setup.hs!

You can read all the Haskell articles you want, but unless you write the code for yourself, you'll never get anywhere! But there are so many different tools and ideas floating around out there, so how are you supposed to know what to do? How do you even get started writing a Haskell project? And how can you make your development process as efficient as possible?

The first course I ever made, Your First Haskell Project, was designed to help beginners answer these questions. But over the years, it's become a bit dated, and I thought it would be good to sunset that course and replace it with a new alternative, Setup.hs. Like its predecessor, Setup.hs is totally free!

Setup.hs is a short course designed for many levels of Haskellers! Newcomers will learn all the basics of building and running your code. More experienced Haskellers will get some new tools for managing all your Haskell-related programs, as well as some tips for integrating Haskell features into your code editor!

Here's what you'll learn in the course:

- How to install and manage all of the core Haskell tools (GHC, Cabal, Stack)

- What components you need in your Haskell project and how you can build and run them all

- How to get your editor to use advanced features, like flagging compilation errors and providing autocomplete suggestions.

We'll do all of this in a hands-on way with detailed, step-by-step exercises!

Improvements

Setup.hs makes a few notable updates and improvements compared to Your First Haskell Project.

First, it uses GHCup to install all the necessary tools instead of the now-deprecated Haskell Platform. GHCup allows for seamless switching between the different versions of all our tools, which can be very useful when you have many projects on your system!

Second, it goes into more details about pretty much every topic, whether that's project organization, Stack snapshots, and extra dependencies.

Third and probably most importantly, Setup.hs will teach you how to get Haskell code hints in three of the most common code editors (VS Code, Vim & Emacs) using Haskell Language Server. Even if these lectures don't cover the particular editor you use, they'll give you a great idea of what you need to search for to learn how. I can't overstate how useful these kinds of integrations are. They'll massively speed up your development and, if you're a beginner, they'll rapidly accelerate your learning.

If all this sounds super interesting to you, head over to the course page and sign up!

Series Spotlight: Monads and Functional Structures!

Every so often I like to spotlight some of the permanent series you can find on the skills page, which contains over a dozen tutorial series for you to follow! This week I’m highlighting my series on Monads and Functional Structures!

Monads are widely understood to be one of the trickier concepts for newcomers to Haskell, since they are very important, very abstract and conceptual, and do not really appear in most mainstream languages. There are a lot of monad tutorials out there on the internet, most of which are either too shallow, or too deep.

This series will help you understand the concept from the group up, starting with simpler abstract structures like functors and applicative functors.

Here’s an outline of the series:

For a more in depth look at monads and the effects they help us implement in our code, you can check out our two courses, Making Sense of Monads and Effectful Haskell!

GHC 9.6.1 Includes Javascript Backend

Some exciting news this week, as the release of GHC 9.6.1 includes the merger of a couple of different web-based backends - one for Web Assembly and one for Javascript. These features move Haskell in the direction of being a first-class web development language!

From the release notes:

The WebAssembly backend has been merged. This allows GHC to be built as a cross-compiler that targets

wasm32-wasiand compiles Haskell code to self-contained WebAssembly modules that can be executed on a variety of different runtimes.The JavaScript backend has been merged. GHC is now able to be built as a cross-compiler targeting the JavaScript platform.

This is a particularly exciting direction for me, since I’ve been exploring ways to use Haskell in web development for many years, but found a lot of the current approaches require a lot of onboarding work to really get going in a meaningful way. In my Practical Haskell course, I show how to do a basic integration of a Haskell Web Server and an Elm frontend. But I look forward to the day when I can re-do that section of the course entirely with Haskell!

Of course, merging the backends is just a first step - there’s a long way to go. A few caveats mentioned in the release notes as well:

There are a few caveats to be aware of [with the WebAssembly backend]:

To use the WebAssembly backend, one would need to follow the instructions on ghc-wasm-meta. The WebAssembly backend is not included in the GHC release bindists for the time being, nor is it supported by

ghcuporstackyet.The WebAssembly backend is still under active development. It’s presented in this GHC version as a technology preview, bugs and missing features are expected.

The [Javascript] backend should be considered a technology preview. As such it is not ready for use in production, is not distributed in the GHC release bindists and requires the user to manually build GHC as a cross-compiler. See the JavaScript backend wiki page on the GHC wiki for the current status, project roadmap, build instructions and demos.

Both of these backends are technology previews, meaning they’re only ready for the most adventurous Haskellers to start experimenting - a lot of setup work is still required. But it’s certainly an important step in the right direction! Since these are included with GHC 9.6.1, improvements are possible in further minor releases to GHC 9.6, rather than needing to wait for the next major release of GHC 9.8.

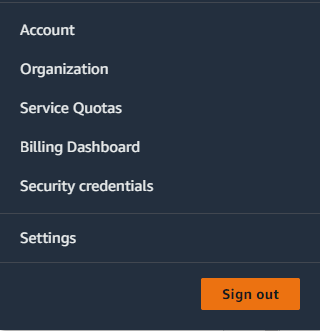

Adding a Database to our AWS Server

In the last few articles on the blog, we've been exploring how to launch a Haskell web server using AWS. Here are the steps we've done so far:

- Create a local Docker Image

- Upload the Docker Image to ECR

- Deploy your Server using Elastic Beanstalk

In this final part of the series, we're going to learn to attach a database to our application.

There are a few gotchas to this. Setting up the database for first time use is a bit tricky, because we have to do some initial migrations. Then we need to use environment variables to ensure it works both locally and on the remote server. Let's get started.

A Basic Schema

Let's first assume we have a super basic schema using the Persistent library. (If you want some details on how this works, see our Real World Haskell series). We'll just have one type in our database, and users will use server endpoints to create or fetch these "text entries".

import Database.Persist.Sql

import qualified Database.Persist.TH as PTH

import Data.Text (Text)

PTH.share [PTH.mkPersist PTH.sqlSettings, PTH.mkMigrate "migrateAll"] [PTH.persistLowerCase|

TextEntry sql=text_entries

text Text

|]An important product of this template Haskell sequence is the migrateAll function, which will run the proper commands to migrate a Postgres database to fit our schema by creating tables.

Whenever we first create a database, we have to make sure it's migrated. But before we even do that we have to make sure we've created a database for Postgres to use! Let's see the commands we need for this, and how to run them in Haskell.

Running Setup Commands

When you install Postgres on your machine, you'll have separate "databases" on your system to help you keep your data separate. Separating data allows each database to have its own "users" table without having any name conflicts, for one example. By default, Postgresql comes installed with a database called postgres.

But we don't want to use this to store our data. We want to create a separate database. We want to create this database if it's the first time we're running the server with a database. But otherwise, we just want to make sure its migrations are up to date.

Now, the command we would run to create this database is simple:

CREATE DATABASE quiz;But we can first run this command to see if this database already exists:

SELECT datname FROM pg_database WHERE datname = 'quiz';Both these commands assume we are connected to the postgres database.

Since these first two instructions are raw commands, we can run them using the Postgresql Simple library. Here's some code to do this.

createDBIfMissing :: String -> IO ()

createDBIfMissing connString = do

connection <- connectPostgreSQL (pack connString)

putStrLn "Checking/Creating 'quiz' Database"

let checkQuery = "SELECT datname FROM pg_database WHERE datname = 'quiz';"

(checkResult :: [Only String]) <- query_ connection checkQuery

when (null checkResult) $ do

putStrLn "Not found! Creating 'quiz' database!"

let createQuery = "CREATE DATABASE quiz;"

void $ execute_ connection createQueryWhen we run checkQuery, it sees if the quiz database exists. If its result is null, then we'll run the additional command to create our database.

Once we have this function, we can write a wrapper that will create the database and then migrate it for our schema. Here's what this wrapper looks like:

migrateDb :: String -> String -> IO ()

migrateDb baseConnString quizConnString = do

createDBIfMissing baseConnString

putStrLn "Migrating Database"

runPG quizConnString (runMigration migrateAll)

runPG :: String -> SqlPersistT (LoggingT IO) a -> IO a

runPG connString action = runStdoutLoggingT $

withPostgresqlConn (pack connString) $ \backend ->

runReaderT action backendNotice migrateDb takes two different connection strings. One is for the base (postgres) database. The other is for our new quiz database. The creation queries run on the first, the migration runs on the second.

But how do we use these functions within our server?

Loading the URI

When we kick off our server, we have to load the database URI for our Postgres database. We'll use the format of {host}:{port}. If you're running it locally, this would just be localhost:5432. But when we deploy the server, we'll use a different URI. So let's write a function to load the host and port (separated by a colon) from an environment variable named DATABASE_URI.

loadDatabaseEnv :: IO (String, String)

loadDatabaseEnv = do

dbEnv <- lookupEnv "DATABASE_URI"

if isNothing dbEnv || ':' `notElem` fromJust dbEnv

then return ("localhost", "5432")

else return (span (/= ':') (fromJust dbEnv))Now we need to construct the full Postgres connection string. This has the following general format:

host={host} port={port} dbname={dbname} user={user} password={password}As a default value, you can often just have the username and password both be postgres (though of course this isn't recommended for a serious database). But let's make a function to substitute in the other values:

mkPostgresUri :: String -> String -> String -> String

mkPostgresUri host port dbname =

"host='" <> host <> "' port=" <> tail port <> " dbname='" <> dbname <> "' user='postgres' password='postgres'"Finally, we'll pull our different pieces together, get both URIs, and launch our server. In my example, I'm using a Servant server (more details on that in this article), and this will often require passing the database string as an argument.

server :: String -> Server QuizAPI

server connString = ...

runServer :: IO ()

runServer = do

(host, port) <- loadDatabaseEnv

let baseConnString = mkPostgresUri host port "postgres"

let quizConnString = mkPostgresUri host port "quiz"

migrateDb baseConnString quizConnString

putStrLn "Running Server!"

run 8080 (serve api (server quizConnString))Having made all these modifications to our server, of course we have to rebuild and redeploy our docker image for that! We can create the new local image with:

docker build -t quiz-server .Then for more detailed instructions on deploying it, refer to part 2 and part 3 of this series!

When you deploy the server, you'll find it's crashing of course, because we haven't configured the database! So let's get to the real meat of this article…setting up the database on AWS!

Create a Database with RDS

This process is not actually too challenging. The first thing we're going to do is use RDS (Relational Database Service) to set up our database. This is easily done from the AWS console.

- Select the RDS service

- Hit the orange "Create Database" button

- Go through the creation wizard, making sure to select "Postgres" and the "Free Tier" option (assuming you're just making a test app).

Most of the default options are fine, but as I mentioned above I specified postgres for the username and password of the database. I also unchecked the box for "Performance Insights" since this could lead to additional billing charges if you forget to turn it off.

Once you've created your database, you can then click the "databases" link on the sidebar, and select your new database. On that screen, you'll be able to see the "endpoint" and "port" of your database. These are the values you'll need for your environment!

Add Environment Variable

To connect your environment to the database, now you just have to add an environment variable! To do this, you have to access the configuration from the web portal:

- Go to the Elastic Beanstalk service

- Select "Environments" from the sidebar and then click the environment you have running your server.

- Click on the "Configuration" link on the side, and then select the "Edit" button in the "Software" section.

- At the very bottom, you'll find the "Environment Properties" section. Fill in

DATABASE_URIas the key, and the{host}:{port}combination you got from your database in RDS. - Click "Apply" to make the change!

By adding an environment variable, you are changing the configuration of your server, so it will reboot. Once it relaunches, you should find that it works, and you can persist information from your database!

Conclusion

Hopefully this series has helped you learn how to deploy your Haskell code to AWS! If you'd like to see all this article in video form, you can check out our YouTube video covering these steps!

For more tips on creating a "Real World" application, you can read our series on web skills! You can also download our Haskell Production checklist for some ideas of other libraries and tools you can use to improve your Haskell!

Deploying a Haskell Server to AWS

In the last few articles, we've been talking about how to deploy a Haskell application using AWS. This is part 3 of the series. So if you haven't done parts 1 & 2, you should start there so you can follow along!

In Part 1, we wrote a Dockerfile and created a local Docker image containing a simple program for a Haskell web server.

In the Part 2, we pushed our container image to the AWS container registry (ECR). Notably, this involved creating an AWS account, downloading AWS command line tools and authenticating on the command line. We'll run a couple more of these commands today, so hopefully you're still authenticated!

But now that our container is uploaded, deploying that container is fairly straightforward. But it requires us to use a couple new concepts, as we'll see.

Adding ECR Permission

Before we get started, there's one step we have to take on the web portal. You need to give Elastic Beanstalk permission to download your ECR containers. You can do this using the IAM service from the AWS portal. Then follow these steps:

- Select "roles" on the left hand menu.

- Select "aws-elasticbeanstalk-ec2-role" in the list in the middle of the screen.

- Click "Add Permissions"

- Search for and select "AmazonEC2ContainerRegistryReadOnly"

Now let's get into the steps on our local machine.

Configuration File

There are multiple approaches to deploying a docker container, but the one that worked most easily for me was to create a file called Dockerrun.aws.json. (Full other methods, refer to the documentation). This approach involves a counter-intuitive idea. We're going to create a separate directory outside of our main project directory. We'll call it remote.

~/Quiz $ cd ..

~/ $ mkdir remote && cd remoteIn this directory, we'll make a single file, called Dockerrun.aws.json. This will, of course, be a JSON file. It will be a very simple configuration file telling our application to use the docker image we pushed last time to ECR. We have to start it by specifying the version of the program (which is 1 because we're only using a single container).

{

"AWSEBDockerrunVersion": "1",

...

}Now we'll use tell it to use the Docker image we pushed last time by giving the URI under the Image object:

{

"AWSEBDockerrunVersion": "1",

"Image": {

"Name": "165102442442.dkr.ecr.us-west-2.amazonaws.com/quiz-server"

},

...

}Finally, we'll specify the port, similar to a Dockerfile. We'll use 8080 both for the "Container" port and the "Host" port.

{

"AWSEBDockerrunVersion": "1",

"Image": {

"Name": "165102442442.dkr.ecr.us-west-2.amazonaws.com/quiz-server"

},

"Ports": [{

"ContainerPort": 8080,

"HostPort": 8080

}]

}This is the only file we need in this directory! So now let's see what commands we need to run.

Creating the Application

Now we have two more steps that can largely be accomplished on the command line. First, we have to create an application. Then we have to create an environment to use for that application.

Before we can create an application though, we have to create a Git repository, just to store our single file! That's how AWS figures out what to push for configuration.

~/remote $ git init

~/remote $ git add .

~/remote $ git commit -m "First Commit"Now we can create the application using the eb init command. We'll give our application the name quiz-server.

~/remote $ eb init -p docker quiz-serverYou can then see your application on the web portal by accessing the "Elastic Beanstalk" service and clicking the "Applications" tab on the left menu.

Creating the Environment

Now we have to deploy an environment to deploy for our application. When first creating this environment, we use the eb create command. We'll give this environment the name quiz-server-env.

~/remote $ eb create quiz-server-envThis will take a while to deploy. But once it's done, you should be able to see it by clicking the "Environments" tab from the previous screen in the web portal. This will also show you the URL you can use to access your server. It's now successfully deployed!

Debugging

Sometimes, your deployment might fail. For example, you might misspell the name of your container. If you click on your environment (from the "Environments" tab), then you'll be able to access the "Logs" on the left hand menu. This can help you debug.

If you need to change your configuration file, you'll need to commit it, though you don't need to push it to any remote repository. You instead use eb deploy to push your changes.

~/remote $ git add Dockerrun.aws.json

~/remote $ git commit -m "New Commit"

~/remote $ eb deployNow the deployment process should start again!

Video

You can also watch our YouTube video to see all these steps in action!

Conclusion

You now have enough information to deploy a Haskell web application to Heroku! We'll have one more installment in this series around adding a database to our application, so stay tuned for that! In the meantime, subscribe to our monthly newsletter so you can stay up to date with all the latest news!