Fighting Back!

In last week's article, we made our enemies a lot smarter. We gave them a breadth-first-search algorithm so they could find the shortest path to find us. This made it much harder to avoid them. This week, we fight back! We'll develop a mechanism so that our player can stun nearby enemies and bypass them.

None of the elements we're going to implement are particularly challenging in isolation. The focus this week is on maintaining a methodical development process. To that end, it'll help a lot to take a look at the Github Repository for this project when reading this article. The code for this part is on the part-7 branch.

We won't go over every detail in this article. Instead, each section will describe one discrete stage in developing these features. We'll examine the important parts, and give some high level guidelines for the rest. Then there will be a single commit, in case you want to examine anything else that changed.

Haskell is a great language for following a methodical process. This is especially true if you use the idea of compile driven development (CDD). If you've never written any Haskell before, you should try it out! Download our Beginners Checklist and get started! You can also read about CDD and other topics in our Haskell Brain series!

Feature Overview

To start, let's formalize the definition of our new feature.

- The player can stun all enemies within a 5x5 tile radius (ignoring walls) around them.

- This will stun enemies for a set duration of time. However, the stun duration will go down each time an enemy gets stunned.

- The player can only use the stun functionality once every few seconds. This delay should increase each time they use the stun.

- Enemies will move faster each time they recover from getting stunned.

- Stunned enemies appear as a different color

- Affected tiles briefly appear as a different color.

- When the player's stun is ready, their avatar should have an indicator.

It seems like there are a lot of different criteria here. But no need to worry! We'll follow our development process and it'll be fine! We'll need more state in our game for a lot of these changes. So, as we have in the past, let's start by modifying our World and related types.

World State Modifications

The first big change is that we're going to add a Player type to carry more information about our character. This will replace the playerLocation field in our World. It will have current location, as well as timer values related to our stun weapon. The first value will be the time remaining until we can use it again. The second value will be the next delay after we use it. This second value is the one that will increase each time we use the stun. We'll use Word (unsigned int) values for all our timers.

data Player = Player

{ playerLocation :: Location

, playerCurrentStunDelay :: Word

, playerNextStunDelay :: Word

}

data World = World

{ worldPlayer :: Player

...We'll add some similar new fields to the enemy. The first of these is a lagTime. That is, the number of ticks an enemy will wait before moving. The more times we stun them, the lower this will go, and the faster they'll get. Then, just as we keep track of a stun delay for the player, each enemy will have a stun remaining time. (If the enemy is active, this will be 0). We'll also store the "next stun duration", like we did with the Player. For the enemy, this delay will decrease each time the enemy gets stunned, so the game gets harder.

data Enemy = Enemy

{ enemyLocation :: Location

, enemyLagTime :: Word

, enemyNextStunDuration :: Word

, enemyCurrentStunTimer :: Word

}Finally, we'll add a couple fields to our world. First, a list of locations affected by the stun. These will briefly highlight when we use the stun and then go away. Second, we need a worldTime. This will help us keep track of when enemies should move.

data World = World

{ worldPlayer :: Player

, startLocation :: Location

, endLocation :: Location

, worldBoundaries :: Maze

, worldResult :: GameResult

, worldRandomGenerator :: StdGen

, worldEnemies :: [Enemy]

, stunCells :: [Location]

, worldTime :: Word

}At this point, we should stop thinking about our new features for a second and get the rest of our code to compile. Here are the broad steps we need to take.

- Every instance of

playerLocation wshould change to accessplayerLocation (worldPlayer w). - We should make a

newPlayerexpression and use it whenever we re-initialize the world. - We should make a similar function

mkNewEnemy. This should take a location and initialize an Enemy. - Any instances of

Enemyconstructors in pattern matches need the new arguments. Use wildcards for now. - Other places where we initialize the

Worldshould add extra arguments as well. Use the empty list for thestunCellsand 0 the world timer.

Take a look at this commit for details!

A Matter of Time

For the next step, we want to ensure all our time updates occur. Our game entities now have several fields that should be changing each tick. Our world timer should go up, our stun delay timers should go down. Let's start with a simple function that will increment the world timer:

incrementWorldTime :: World -> World

incrementWorldTime w = w { worldTime = worldTime w + 1 }In our normal case of the update function, we want to apply this increment:

updateFunc :: Float -> World -> World

updateFunc _ w

...

| otherwise = incrementWorldTime (w

{ worldRandomGenerator = newGen

, worldEnemies = newEnemies

})Now there are some timers we'll want to decrement. Let's make a quick helper function:

decrementIfPositive :: Word -> Word

decrementIfPositive 0 = 0

decrementIfPositive x = x - 1We can use this to create a function to update our player each tick. All we need to do is reduce the stun delay. We'll apply this function within our update function for the world.

updatePlayerOnTick :: Player -> Player

updatePlayerOnTick p = p

{ playerCurrentStunDelay =

decrementIfPositive (playerCurrentStunDelay p)

}

updateFunc :: Float -> World -> World

updateFunc _ w

...

| otherwise = incrementWorldTime (w

{ worldPlayer = newPlayer

, ...

})

where

player = worldPlayer w

newPlayer = updatePlayerOnTick player

...Now we need to change how we update enemies:

- The function needs the world time. Enemies should only move when the world time is a multiple of their lag time.

- Enemies should also only move if they aren't stunned.

- Reduce the stun timer if it exists.

updateEnemy

:: Word

-> Maze

-> Location

-> Enemy

-> State StdGen Enemy

updateEnemy time maze playerLocation

e@(Enemy location lagTime nextStun currentStun) =

if not shouldUpdate

then return e

else do

… -- Make the new move!

return (Enemy newLocation lagTime nextStun

(decrementIfPositive currentStun))

where

isUpdateTick = time `mod` lagTime == 0

shouldUpdate = isUpdateTick &&

currentStun == 0 &&

not (null potentialLocs)

potentialLocs = …

...There are also a couple minor modifications elsewhere.

- The time step argument for the

playfunction should now be 20 steps per second, not 1. - Enemies should start with 20 for their lag time.

We haven't affected the game yet, since we can't use the stun! This is the next step. But this is important groundwork for making everything work. Take a look at this commit for how this part went down.

Activating the Stun

Let's make that stun work! We'll do this with the space-bar key. Most of this logic will go into the event handler. Let's set up the point where we enter this command:

inputHandler :: Event -> World -> World

inputHandler event w

...

| otherwise = case event of

… -- (movement keys)

(EventKey (SpecialKey KeySpace) Down _ _) -> ...What are all the different things that need to happen?

- Enemies within range should get stunned. This means they receive their "next stun timer" value for their current stun timer.

- Their "next stun timers" should decrease (let's say by 5 to a minimum of 20).

- Our player stun delay timer should get the "next" value as well. Then we'll increase the "next" value by 10.

- Our "stun cells" list should include all cells within range.

None of these things are challenging on their own. But combining them all is a bit tricky. Let's start with some mutation functions:

activatePlayerStun :: Player -> Player

activatePlayerStun (Player loc _ nextStunTimer) =

Player loc nextStunTimer (nextStunTimer + 10)

stunEnemy :: Enemy -> Enemy

stunEnemy (Enemy loc lag nextStun _) =

Enemy loc newLag newNextStun nextStun

where

newNextStun = max 20 (nextStun - 5)

newLag = max 10 (lag - 1)Now we want to apply these mutators within our input handler. To start, let's remember that we should only be able to trigger any of this logic if the player's stun timer is already 0!

inputHandler :: Event -> World -> World

inputHandler event w

...

| otherwise = case event of

… -- (movement keys)

(EventKey (SpecialKey KeySpace) Down _ _) ->

if playerCurrentStunDelay currentPlayer /= 0 then w

else ...Now let's add a helper that will give us all the locations affected by the stun. We want everything in a 5x5 grid around our player, but we also want bounds checking. Luckily, we can do all this with a neat list comprehension!

where

...

stunAffectedCells :: [Location]

stunAffectedCells =

let (cx, cy) = playerLocation currentPlayer

in [(x,y) | x <- [(cx-2)..(cx+2)], y <- [(cy-2)..(cy+2)],

x >= 0 && x <= 24, y >= 0 && y <= 24]Now we'll make a wrapper around our enemy mutation to determine which enemies get stunned:

where

...

stunEnemyIfClose :: Enemy -> Enemy

stunEnemyIfClose e = if enemyLocation e `elem` stunAffectedCells

then stunEnemy e

else eNow we can incorporate all our functions into a final update!

inputHandler :: Event -> World -> World

inputHandler event w

...

| otherwise = case event of

… -- (movement keys)

(EventKey (SpecialKey KeySpace) Down _ _) ->

if playerCurrentStunDelay currentPlayer /= 0

then w

else w

{ worldPlayer = activatePlayerStun currentPlayer

, worldEnemies = stunEnemyIfClose <$> worldEnemies w

, stunCells = stunAffectedCells

}Other small updates:

- When initializing game objects, they should get default values for their "next" timers. For the player, we give 200 (10 seconds). For the enemies, we stun them for 60 ticks (3 seconds) initially.

- When updating the world, clear out the "stun cells". Use another mutator function to achieve this:

clearStunCells :: World -> World

clearStunCells w = w { stunCells = []}Take a look at this commit for a review on this part!

Drawing the Changes

Our game works as expected now! But as our last update, let's make sure we represent these changes on the screen. This will make the game a much better experience. Here are some changes:

- Enemies will turn yellow when stunned

- Affected squares will flash teal

- Our player will have red inner circle when the stun is ready

Each of these is pretty simple! For our enemies, we'll add a little extra logic around what color to use, depending on the stun timer:

enemyPic :: Enemy -> Picture

enemyPic (Enemy loc _ _ currentStun) =

let enemyColor = if currentStun == 0 then orange else yellow

...

in Color enemyColor (Polygon [tl, tr, br, bl])For the player, we'll add some similar logic. The indicator will be a smaller red circle inside of the normal black circle:

stunReadyCircle = if playerCurrentStunDelay (worldPlayer world) == 0

then Color red (Circle 5)

else Blank

playerMarker = translate px py (Pictures [stunReadyCircle, Circle 10])Finally, for the walls, we need to check if a location is among the stunCells. If so, we'll add a teal (cyan) background.

makeWallPictures :: (Location, CellBoundaries) -> [Picture]

makeWallPictures ((x,y), CellBoundaries up right down left) =

let coords = conversion (x,y)

tl = cellTopLeft coords

tr = cellTopRight coords

bl = cellBottomLeft coords

br = cellBottomRight coords

stunBackground = if (x, y) `elem` stunCells world

then Color cyan (Polygon [tl, tr, br, bl])

else Blank

in [ stunBackground

… (wall edges)

]And that's all! We can now tell what is happening in our game, so we're done with these features! You can take a look at this commit for all the changes we made to the drawing!

Conclusion

Now our game is a lot more interesting. There's a lot of tuning we can do with various parameters to make our levels more and more competitive. For instance, how many enemies is appropriate per level? What's a good stun delay timer? If we're going to experiment with all these, we'll want to be able to load full game states from the file system. We've got a good start with serializing mazes. But now we want to include information about the player, enemies, and timers.

So next week, we'll go further and serialize our complete game state. We'll also look at how we parameterize the application and fix all the "magic numbers". This will add new options for customization and flexibility. It will also enable us to build a full game that gets harder as it goes on, and allow saving and loading of your progress.

Throughout this article (and series), we've tried to use a clean, precise development process. Read our Haskell Brain series to learn more about this! You can also download our Beginners Checklist if you are less familiar with the language!

Smarter Enemies with BFS!

Last week we added enemies to our maze. These little squares will rove around the maze, and if they touch our character, we have to restart the maze. We made it so that these enemies moved around at random. Thus they're not particularly efficient at getting to us.

This week, we're going to make them much more dangerous! They'll use the breadth first search algorithm to find the shortest path towards our player. We'll use three kinds of data structures from the containers package. So if you want to get a little more familiar with that, this article is a great start! Take a look at our Github Repository to see the full code! Look at the part-6 branch for this article!

We'll also make use of the state monad throughout. If you're still a little uncomfortable with monads, make sure to read our series on them! It'll help you with the basics. By the end you'll know about the state monad and how to use it in conjunction with other monads! If you're new to Haskell, you should also take a look at our Beginners Checklist!

BFS Overview

The goal of our breadth first search will be to return the fastest path from one location to another. We'll be writing this function:

getShortestPath :: Maze -> Location -> Location -> [Location]It will return all the locations on the path from the initial location to the target location. If there's no possible path, we'll return the empty list. In practice, we'll usually only want to take the first element of this list. But there are use cases for having the whole path that we'll explore later. Here's a basic outline of our algorithm:

- Keep a queue of locations that we'll visit in the future. At the start, this should contain our starting location.

- Dequeue the first location (if the queue is empty, return the empty list). Mark this location as visited. If it is our target location, skip to step 5.

- Find all adjacent locations that we haven't visited/enqueued yet. Put them into the search queue. Mark the dequeued location as the "parent" location for each of these new locations.

- Continue dequeuing elements and inserting their unvisited neighbors. Stop when we dequeue the target location.

- Once we have the target location, use the "parents" map to create the full path from start to finish.

Data Structures Galore

Now let's start getting into the details. As we'll see, there are several different data structures we'll need for this! We'll do some of the same things we did for depth first search (the first time around). We'll make a type to represent our current algorithm state. Then we'll make a recursive, stateful function over that type. In this case, we'll want three items in our search state.

- A set of "visited" cells

- A queue for cells we are waiting to visit

- A mapping of cells to their "parent"

And for all three of these, we'll want different structures. Data.Set will suffice for our visited cells. Then we'll want Data.Map for the parent map. For the search queue though, we'll use something that we haven't used on this blog before: Data.Sequence. This structure allows us to add to the back and remove from the front quickly. Here's our search state type:

data BFSState = BFSState

{ bfsSearchQueue :: Seq.Seq Location

, bfsVisistedLocations :: Set.Set Location

, bfsParents :: Map.Map Location Location

}Before we get carried away with our search function, let's fill in our wrapper function. This will initialize the state with the starting location. Then it will call evalState to get the result:

getShortestPath :: Maze -> Location -> Location -> [Location]

getShortestPath maze initialLocation targetLocation = evalState

(bfs maze initialLocation targetLocation)

(BFSState

(Seq.singleton initialLocation)

(Set.singleton initialLocation)

Map.empty)

bfs :: Maze -> Location -> Location -> State BFSState [Location]

bfs = ...Making Our Search

As with depth first search, we'll start by retrieving the current state. Then we'll ask if the search queue is empty. If it is, this means we've exhausted all possibilities, and should return the empty list. This indicates no path is possible:

bfs :: Maze -> Location -> Location -> State BFSState [Location]

bfs maze initialLocation targetLocation = do

BFSState searchQueue visitedSet parentsMap <- get

if Seq.null searchQueue

then return []

else do

...Now let's consider the first element in our queue. If it's our target location, we're done. We'll write the exact helper for this part later. But first let's get into the meat of the algorithm:

bfs maze initialLocation targetLocation = do

BFSState searchQueue visitedSet parentsMap <- get

if Seq.null searchQueue

then return []

else do

let nextLoc = Seq.index searchQueue 0

if nextLoc == targetLocation

then … -- Get results

else do

...Now our code will actually look imperative, to match the algorithm description above:

- Get adjacent cells and filter based on those we haven't visited

- Insert the current cell into the visited set

- Insert the new cells at the end of the search queue, but drop the current (first) element from the queue as well.

- Mark the current cell as the "parent" for each of these new cells. The new cell should be the "key", the current should be the value.

There's a couple tricky folds involved here, but nothing too bad. Here's what it looks like:

bfs :: Maze -> Location -> Location -> State BFSState [Location]

bfs maze initialLocation targetLocation = do

BFSState searchQueue visitedSet parentsMap <- get

...

if nextLoc == targetLocation

then ...

else do

-- Step 1 (Find next locations)

let adjacentCells = getAdjacentLocations maze nextLoc

unvisitedNextCells = filter

(\loc -> not (Set.member loc visitedSet))

adjacentCells

-- Step 2 (Mark as visited)

newVisitedSet = Set.insert nextLoc visitedSet

-- Step 3 (Enqueue new elements)

newSearchQueue = foldr

(flip (Seq.|>))

-- (Notice we remove the first element!)

(Seq.drop 1 searchQueue)

unvisitedNextCells

-- Step 4

newParentsMap = foldr

(\loc -> Map.insert loc nextLoc)

parentsMap

unvisitedNextCellsThen once we're done, we'll insert these new elements into our search state. Then we'll make a recursive call to bfs to continue the process!

bfs :: Maze -> Location -> Location -> State BFSState [Location]

bfs maze initialLocation targetLocation = do

BFSState searchQueue visitedSet parentsMap <- get

...

if nextLoc == targetLocation

then ...

else do

-- Step 1

let adjacentCells = getAdjacentLocations maze nextLoc

unvisitedNextCells = filter

(\loc -> not (Set.member loc visitedSet))

adjacentCells

-- Step 2

newVisitedSet = Set.insert nextLoc visitedSet

-- Step 3

newSearchQueue = foldr

(flip (Seq.|>))

-- (Notice we remove the first element!)

(Seq.drop 1 searchQueue)

unvisitedNextCells

-- Step 4

newParentsMap = foldr

(\loc -> Map.insert loc nextLoc)

parentsMap

unvisitedNextCells

-- Replace the state and make recursive call!

put (BFSState newSearchQueue newVisitedSet newParentsMap)

bfs maze initialLocation targetLocationFor the last part of this, we need to consider what happens when we hit our target. In this case, we'll "unwind" the path using the parents map. We'll start with the target location in our path list. Then we'll look up its parent, and append it to the list. Then we'll look up the parent's parent. And so on. We do this recursion (of course).

bfs :: Maze -> Location -> Location -> State BFSState [Location]

bfs maze initialLocation targetLocation = do

BFSState searchQueue visitedSet parentsMap <- get

if Seq.null searchQueue

then return []

else do

let nextLoc = Seq.index searchQueue 0

if nextLoc == targetLocation

then return (unwindPath parentsMap [targetLocation])

...

where

unwindPath parentsMap currentPath =

case Map.lookup (head currentPath) parentsMap of

Nothing -> tail currentPath

Just parent -> unwindPath parentsMap (parent : currentPath)The only cell we should find without a parent is the initial cell. So when we hit this case, we return the trail of the current path (so removing the current cell from it). And that's all!

Modifying the Game

All we have to do to wrap things up is call this function instead of our random function for the enemy movements. We'll keep things a little fresh by having them make a random move about 20% of the time. (We'll make this a tunable parameter in the future). Here's the bit where we keep some randomness, like what we have now:

updateEnemy :: Maze -> Location -> Enemy -> State StdGen Enemy

updateEnemy maze playerLocation e@(Enemy location) =

if (null potentialLocs)

then return e

else do

gen <- get

let (randomMoveRoll, gen') = randomR (1 :: Int, 5) gen

let (newLocation, newGen) = if randomMoveRoll == 1

then

let (randomIndex, newGen) =

randomR (0, (length potentialLocs) - 1) gen'

in (potentialLocs !! randomIndex, newGen)

...

where

potentialLocs = getAdjacentLocations maze locationAnd in the rest of the cases, we'll call our getShortestPath function!

updateEnemy :: Maze -> Location -> Enemy -> State StdGen Enemy

updateEnemy maze playerLocation e@(Enemy location) =

if (null potentialLocs)

then return e

else do

gen <- get

let (randomMoveRoll, gen') = randomR (1 :: Int, 5) gen

let (newLocation, newGen) = if randomMoveRoll == 1

then

let (randomIndex, newGen) =

randomR (0, (length potentialLocs) - 1) gen'

in (potentialLocs !! randomIndex, newGen)

else

let shortestPath =

getShortestPath maze location playerLocation

in (if null shortestPath then location

else head shortestPath, gen')

put newGen

return (Enemy newLocation)

where

potentialLocs = getAdjacentLocations maze locationAnd now the enemies will chase us around! They're hard to avoid!

Conclusion

With our enemies now being more intelligent, we'll want to allow our player to fight back against them! Next week, we'll create a mechanism to stun the ghosts to give ourselves a better chance! After, we'll look a some other ways to power up our player!

If you've never programmed in Haskell, hopefully this series is giving you some good ideas of the possibilities! We have a lot of resources for beginners! Check out our Beginners Checklist as well as our Liftoff Series!

Running From Enemies!

We've spent a few weeks now refactoring a few things in our game. We made it more performant and examined some related concepts. This week, we're going to get back to adding new features to the game! We'll add some enemies, represented by little squares, to rove around our maze! If they touch our player, we'll have to re-start the level!

In the next couple weeks, we'll make these enemies smarter by giving them a better search strategy. Then later, we'll give ourselves the ability to fight back against the enemies. So there will be interesting trade-offs in features.

Remember we have a Github Repository for this project! You can find all the code for this part can in the part-5 branch! For some other interesting Haskell project ideas, download our Production Checklist!

Organizing

Let's remind ourselves of our process for adding new features. Remember that at the code level, our game has a few main elements:

- The

Worldstate type - The update function

- The drawing function

- The event handler

So to change our game, we should update each of these in turn. Let's start with the changes to our world type. First, it's now possible for us to "lose" the game. So we'll need to expand our GameResult type:

data GameResult = GameInProgress | GameWon | GameLostNow we need to store the enemies. We'll add more data about our enemies as the game develops. So let's make a formal data type and store a list of them in our World. But for right now, all we need to know about them is their current location:

data Enemy = Enemy

{ enemyLocation :: Location

}

data World = World

{ …

, worldEnemies :: [Enemy]

}Updating The Game

Now that our game contains information about the enemies, let's determine what they do! Enemies won't respond to any input events from the player. Instead, they'll update at a regular interval via our updateFunc. Our first concern will be the game end condition. If the player's current location is one of the enemies locations, we've "lost".

updateFunc :: Float -> World -> World

updateFunc _ w =

-- Game Win Condition

| playerLocation w == endLocation w = w { worldResult = GameWon }

-- Game Loss Condition

| playerLocation w `elem` (enemyLocation <$> worldEnemies w) =

w { worldResult = GameLost }

| otherwise = ...Now we'll need a function that updates the location for an individual enemy. We'll have the enemies move at random. This means we'll need to manipulate the random generator in our world. Let's make this function stateful over the random generator.

updateEnemy :: Maze -> Enemy -> State StdGen Enemy

...We'll want to examine the enemy's location, and find all the possible locations it can move to. Then we'll select from them at random. This will look a lot like the logic we used when generating our random mazes. It would also be a great spot to use prisms if we were generating them for our types! We might explore this possibility later on in this series.

updateEnemy :: Maze -> Enemy -> State StdGen Enemy

updateEnemy maze e@(Enemy location) = if (null potentialLocs)

then return e

else do

gen <- get

let (randomIndex, newGen) = randomR

(0, (length potentialLocs) - 1)

gen

newLocation = potentialLocs !! randomIndex

put newGen

return (Enemy newLocation)

where

bounds = maze Array.! location

maybeUpLoc = case upBoundary bounds of

(AdjacentCell loc) -> Just loc

_ -> Nothing

maybeRightLoc = case rightBoundary bounds of

(AdjacentCell loc) -> Just loc

_ -> Nothing

maybeDownLoc = case downBoundary bounds of

(AdjacentCell loc) -> Just loc

_ -> Nothing

maybeLeftLoc = case leftBoundary bounds of

(AdjacentCell loc) -> Just loc

_ -> Nothing

potentialLocs = catMaybes

[maybeUpLoc, maybeRightLoc, maybeDownLoc, maybeLeftLoc]Now that we have this function, we can incorporate it into our main update function. It's a little tricky though. We have to use the sequence function to combine all these stateful actions together. This will also give us our final list of enemies. Then we can insert the new generator and the new enemies into our state!

updateFunc _ w =

...

| otherwise =

w { worldRandomGenerator = newGen, worldEnemies = newEnemies}

where

(newEnemies, newGen) = runState

(sequence (updateEnemy (worldBoundaries w) <$> worldEnemies w))

(worldRandomGenerator w)Drawing our Enemies

Now we need to draw our enemies on the board. Most of the information is already there. We have a conversion function to get the drawing coordinates. Then we'll derive the corner points of the square within that cell, and draw an orange square.

drawingFunc =

…

| otherwise = Pictures

[..., Pictures (enemyPic <$> worldEnemies world)]

where

...

enemyPic :: Enemy -> Picture

enemyPic (Enemy loc) =

let (centerX, centerY) = cellCenter $ conversion loc

tl = (centerX - 5, centerY + 5)

tr = (centerX + 5, centerY + 5)

br = (centerX + 5, centerY - 5)

bl = (centerX - 5, centerY - 5)

in Color orange (Polygon [tl, tr, br, bl])One extra part of updating the drawing function is that we'll have to draw a "losing" message. This will be a lot like the winning message.

drawingFunc :: (Float, Float) -> Float -> World -> Picture

drawingFunc (xOffset, yOffset) cellSize world

...

| worldResult world == GameLost =

Translate (-275) 0 $ Scale 0.12 0.25

(Text "Oh no! You've lost! Press enter to restart this maze!")

...Odds and Ends

Two little things remain. First, we want a function to randomize the locations of the enemies. We'll use this to decide their positions at the beginning and when we restart. In the future we may add a power-up that allows the player to run this randomizer. As with other random functions, we'll make this function stateful over the StdGen element.

generateRandomLocation :: (Int, Int) -> State StdGen Location

generateRandomLocation (numCols, numRows) = do

gen <- get

let (randomCol, gen') = randomR (0, numCols - 1) gen

(randomRow, gen'') = randomR (0, numRows - 1) gen'

put gen''

return (randomCol, randomRow)As before, we can sequence these stateful actions together. In the case of initializing the board, we'll use replicateM and the number of enemies. Then we can use the locations to make our enemies, and then place the final generator back into our world.

main = do

gen <- getStdGen

let (maze, gen') = generateRandomMaze gen (25, 25)

numEnemies = 4

(randomLocations, gen'') = runState

(replicateM numEnemies (generateRandomLocation (25,25)))

gen'

enemies = Enemy <$> randomLocations

initialWorld = World (0, 0) (0,0) (24,24)

maze GameInProgress gen'' enemies

play ...The second thing we'll want to do is update the event handler so that it restarts the game when we lose. We'll have similar code to when we win. However, we'll stick with the original maze rather than re-randomizing.

inputHandler :: Event -> World -> World

inputHandler event w

...

| worldResult w == GameLost = case event of

(EventKey (SpecialKey KeyEnter) Down _ _) ->

let (newLocations, gen') = runState

(replicateM (length (worldEnemies w))

(generateRandomLocation (25, 25)))

(worldRandomGenerator w)

in World (0,0) (0,0) (24, 24)

(worldBoundaries w) GameInProgress gen'

(Enemy <$> newLocations)

_ -> w

...(Note we also have to update the game winning code!) And now we have enemies roving around our maze. Awesome!

Conclusion

Next week we'll step up the difficulty of our game! We'll make the enemies much smarter so that they'll move towards us! This will give us an opportunity to learn about the breadth first search algorithm. There are a few nuances to writing this in Haskell. So don't miss it! The week after, we'll develop a way to stun the enemies. Remember you can follow this project on our Github! The code for this article is on the part-5 branch.

We've used monads, particularly the State monad, quite a bit in this series. Hopefully you can see now how important they are! But they don't have to be difficult to learn! Check out our series on Functional Structures to learn more! It starts with simpler structures like functors. But it will ultimately teach you all the common monads!

Quicksort with Haskell!

Last week we referenced the ST monad and went into a little bit of depth with how it enables mutable arrays. It provides an alternative to the IO monad that gives us mutable data without side effects. This week, we're going to take a little bit of a break from adding features to our Maze game. We'll look at a specific example where mutable data can allow different algorithms.

Let's consider the quicksort algorithm. We can do this "in place", mutating an input array. But immutable data in Haskell makes it difficult to implement this approach. We'll examine one approach using normal, immutable lists. Then we'll see how we can use a more common quicksort algorithm using ST. At the end of the day, there are still difficulties with making this work the way we'd like. But it's a useful experiment to try nonetheless.

Still new to monads in Haskell? You should read our series on Monads and Functional Structures! It'll help you learn monads from the ground up, starting with simpler concepts like functors!

The ST Monad

Before we dive back into using arrays, let's take a quick second to grasp the purpose of the ST monad. My first attempt at using mutable arrays in the Maze game involved using an IOArray. This worked, but it caused generateRandomMaze to use the IO monad. You should be very wary of any action that changes your code from pure to using IO. The old version of the function couldn't have weird side effects like file system access! The new version could have any number of weird bugs present! Among other things, it makes it much harder to use and test this code.

In my specific case, there was a more pressing issue. It became impossible to run random generation from within the eventHandler. This meant I couldn't restart the game how I wanted. The handler is a pure function and can't use IO.

The ST monad provides precisely what we need. It allows us to run code that can mutate values in place without allowing arbitrary side effects, as IO does. We can use the generic runST function to convert a computation in the ST monad to it's pure result. This is similar to how we can use runState to run a State computation from a pure one.

runST :: (forall s. ST s a) -> aThe s parameter is a little bit magic. We generally don't have to specify what it is. But the parameter prevents the outside world from having extra side effects on the data. Don't worry about it too much.

There's another function runSTArray. This does the same thing, except it works with mutable arrays:

runSTArray :: (forall s. ST s (STArray s i e)) -> Array i eThis allows us to use STArray instead of IOArray as our mutable data type. Later in this article, we'll use this type to make our "in-place" quicksort algorithm. But first, let's look at a simpler version of this algorithm.

Slow Quicksort

Learn You a Haskell For Great Good presents a short take on the quicksort algorithm. It demonstrates the elegance with which we can express recursive solutions.

quicksort1 :: (Ord a) => [a] -> [a]

quicksort1 [] = []

quicksort1 (x:xs) =

let smallerSorted = quicksort1 [a | a <- xs, a <= x]

biggerSorted = quicksort1 [a | a <- xs, a > x]

in smallerSorted ++ [x] ++ biggerSortedThis looks very nice! It captures the general idea of quicksort. We take the first element as our pivot. We divide the remaining list into the elements greater than the pivot and less than the pivot. Then we recursively sort each of these sub-lists, and combine them with the pivot in the middle.

However, each new list we make takes extra memory. So we are copying part of the list at each recursive step. This means we will definitely use at least O(n) memory for this algorithm.

We can also note the way this algorithm chooses its pivot. It always selects the first element. This is quite inefficient on certain inputs (sorted or reverse sorted arrays). To get our expected performance to a good point, we want to choose the pivot index at random. But then we would need an extra argument of type StdGen, so we'll ignore it for this article.

It's possible of course, to do quicksort "in place", without making any copies of any part of the array! But this requires mutable memory. To get an idea of what this algorithm looks like, we'll implement it in Java first. Mutable data is more natural in Java, so this code will be easier to follow.

In-Place Quicksort (Java)

The quicksort algorithm is recursive, but we're going to handle the recursion in a helper. The helper will take two add extra arguments: the int values for the "start" and "end" of this quicksort section. The goal of quicksortHelper will be to ensure that we've sorted only this section. As a stylistic matter, I use "end" to mean one index past the point we're sorting to. So our main quicksort function will call the helper with 0 and arr.length.

public static void quicksort(int[] arr) {

quicksortHelper(arr, 0, arr.length);

}

public static void quicksortHelper(int[] arr, int start, int end) {

...

}Before we dive into the rest of that function though, let's design two smaller helpers. The first is very simple. It will swap two elements within the array:

public static void swap(int[] arr, int i, int j) {

int temp = arr[i];

arr[i] = arr[j];

arr[j] = temp;

}The next helper will contain the core of the algorithm. This will be our partition function. It's responsible for choosing a pivot (again, we'll use the first element for simplicity). Then it divides the array so that everything smaller than the pivot is in the first part of the array. After, we insert the pivot, and then we get the larger elements. It returns the index of partition:

public static int partition(int[] arr, int start, int end) {

int pivotElement = arr[start];

int pivotIndex = start + 1;

for (int i = start + 1; i < end; ++i) {

if (arr[i] <= pivotElement) {

swap(arr, i, pivotIndex);

++pivotIndex;

}

}

swap(arr, start, pivotIndex - 1);

return pivotIndex - 1;

}Now our quicksort helper is easy! It will partition the array, and then make recursive calls on the sub-parts! Notice as well the base case:

public static void quicksortHelper(int[] arr, int start, int end) {

if (start + 1 >= end) {

return;

}

int pivotIndex = partition(arr, start, end);

quicksortHelper(arr, start, pivotIndex);

quicksortHelper(arr, pivotIndex + 1, end);

}Since we did everything in place, we didn't allocate any new arrays! So our function definitions only add O(1) extra memory for the temporary values. Since the stack depth is, on average, O(log n), that is the asymptotic memory usage for this algorithm.

In-Place Quicksort (Haskell)

Now that we're familiar with the in-place algorithm, let's see what it looks like in Haskell. We want to do this with STArray. But we'll still write a function with pure input and output. Unfortunately, this means we'll end up using O(n) memory anyway. The thaw function must copy the array to make a mutable version of it. However, the rest of our operations will work in-place on the mutable array. We'll follow the same patterns as our Java code! Let's start simple and write our swap function!

swap :: ST s Int a -> Int -> Int -> ST s ()

swap arr i j = do

elem1 <- readArray arr i

elem2 <- readArray arr j

writeArray arr i elem2

writeArray arr j elem1Now let's write out our partition function. We're going to make it look as much like our Java version as possible. But it's a little tricky because we're don't have for-loops! Let's deal with this problem head on by first designing a function to handle the loop.

The loop produces our value for the final pivot index. But we have to keep track of its current value. This sounds like a job for the State monad! Our state function will take the pivotElement and the array itself as a parameter. Then it will take a final parameter for the i value we have in our partition loop in the Java version.

partitionLoop :: (Ord a)

=> STArray s Int a

-> a

-> Int

-> StateT Int (ST s) ()

partitionLoop arr pivotElement i = do

...We fill this with comparable code to Java. We read the current pivot and the element for the current i index. Then, if it's smaller, we swap them in our array, and increment the pivot:

partitionLoop :: (Ord a)

=> STArray s Int a

-> a

-> Int

-> StateT Int (ST s) ()

partitionLoop arr pivotElement i = do

pivotIndex <- get

thisElement <- lift $ readArray arr i

when (thisElement <= pivotElement) $ do

lift $ swap arr i pivotIndex

put (pivotIndex + 1)Now we incorporate this loop into our primary partition function after getting the pivot element. We'll use mapM to sequence the state actions together and pass that to execStateT. Then we'll return the final pivot (subtracting 1). Don't forget to swap the pivot into the middle of the array though!

partition :: (Ord a)

=> STArray s Int a

-> Int

-> Int

-> ST s Int

partition arr start end = do

pivotElement <- readArray arr start

let pivotIndex_0 = start + 1

finalPivotIndex <- execStateT

(mapM (partitionLoop arr pivotElement) [(start+1)..(end-1)])

pivotIndex_0

swap arr start (finalPivotIndex - 1)

return $ finalPivotIndex - 1Now it's super easy to incorporate these into our final function!

quicksort2 :: (Ord a) => Array Int a -> Array Int a

quicksort2 inputArr = runSTArray $ do

stArr <- thaw inputArr

let (minIndex, maxIndex) = bounds inputArr

quicksort2Helper minIndex (maxIndex + 1) stArr

return stArr

quicksort2Helper :: (Ord a)

=> Int

-> Int

-> STArray s Int a

-> ST s ()

quicksort2Helper start end stArr = when (start + 1 < end) $ do

pivotIndex <- partition stArr start end

quicksort2Helper start pivotIndex stArr

quicksort2Helper (pivotIndex + 1) end stArrThis completes our algorithm! Notice again though, that we use thaw and freeze. This means our main quicksort2 function can have pure inputs and outputs. But it comes at the price of extra memory. It's still cool though that we can use mutable data from inside a pure function!

Conclusion

Since we have to copy the list, this particular example doesn't result in much improvement. In fact, when we benchmark these functions, we see that the first one actually performs quite a bit faster! But it's still a useful trick to understand how we can manipulate data "in-place" in Haskell. The ST monad allows us to do this in a "pure" way. If we're willing to accept impure code, the IO monad is also possible.

Next week we'll get back to game development! We'll add enemies to our game that will go around and try to destroy our player! As we add more and more features, we'll continue to see cool ways to learn about algorithms in Haskell. We'll also see new ways to architect the game code.

There are many other advanced Haskell programs you can write! Check out our Production Checklist for ideas!

Making Arrays Mutable!

Last week we walked through the process of refactoring our code to use Data.Array instead of Data.Map. But in the process, we introduced a big inefficiency! When we use the Array.// function to "update" our array, it has to create a completely new copy of the array! For various reasons, Map doesn't have to do this.

So how can we fix this problem? The answer is to use the MArray interface, for mutable arrays. With mutable arrays, we can modify them in-place, without a copy. This results in code that is much more efficient. This week, we'll explore the modifications we can make to our code to allow this. You can see a quick summary of all the changes in this Git Commit.

Refactoring code can seem like an hard process, but it's actually quite easy with Haskell! In this article, we'll use the idea of "Compile Driven Development". With this process, we update our types and then let compiler errors show us all the changes we need. To learn more about this, and other Haskell paradigms, read our Haskell Brain series!

Mutable Arrays

To start with, let's address the seeming contradiction of having mutable data in an immutable language. We'll be working with the IOArray type in this article. An item of type IOArray acts like a pointer, similar to an IORef. And this pointer is, in fact, immutable! We can't make it point to a different spot in memory. But we can change the underlying data at this memory. But to do so, we'll need a monad that allows such side effects.

In our case, with IOArray, we'll use the IO monad. This is also possible with the ST monad. But the specific interface functions we'll use (which are possible with either option) live in the MArray library. There are four in particular we're concerned with:

freeze :: (Ix i, MArray a e m, IArray b e) => a i e -> m (b i e)

thaw :: (Ix i, IArray a e, MArray b e m) => a i e -> m (b i e)

readArray :: (MArray a e m, Ix i) => a i e -> i -> m e

writeArray :: (MArray a e m, Ix i) => a i e -> i -> e -> m ()The first two are conversion functions between normal, immutable arrays and mutable arrays. Freezing turns the array immutable, thawing makes it mutable. The second two are our replacements for Array.! and Array.// when reading and updating the array. There are a lot of typeclass constraints in these. So let's simplify them by substituting in the types we'll use:

freeze

:: IOArray Location CellBoundaries

-> IO (Array Location CellBoundaries)

thaw

:: Array Location CellBoundaries

-> IO (IOArray Location CellBoundaries)

readArray

:: IOArray Location CellBoundaries

-> Location

-> IO CellBoundaries

writeArray

:: IOArray Location CellBoundaries

-> Location

-> CellBoundaries

-> IO ()Obviously, we'll need to add the IO monad into our code at some point. Let's see how this works.

Basic Changes

We won't need to change how the main World type uses the array. We'll only be changing how the SearchState stores it. So let's go ahead and change that type:

type MMaze = IA.IOArray Location CellBoundaries

data SearchState = SearchState

{ randomGen :: StdGen

, locationStack :: [Location]

, currentBoundaries :: MMaze

, visitedCells :: Set.Set Location

}The first issue is that we should now pass a mutable array to our initial search state. We'll use the same initialBounds item, except we'll thaw it first to get a mutable version. Then we'll construct the state and pass it along to our search function. At the end, we'll freeze the resulting state. All this involves making our generation function live in the IO monad:

-- This did not have IO before!

generateRandomMaze :: StdGen -> (Int, Int) -> IO Maze

generateRandomMaze gen (numRows, numColumns) = do

initialMutableBounds <- IA.thaw initialBounds

let initialState = SearchState

g2

[(startX, startY)]

initialMutableBounds

Set.empty

let finalBounds = currentBoundaries

(execState dfsSearch initialState)

IA.freeze finalBounds

where

(startX, g1) = …

(startY, g2) = …

initialBounds :: Maze

initialBounds = …This seems to "solve" our issues in this function and push all our errors into dfsSearch. But it should be obvious that we need a fundamental change there. We'll need the IO monad to make array updates. So the type signatures of all our search functions need to change. In particular, we want to combine monads with StateT SearchState IO. Then we'll make any "pure" functions use IO instead.

dfsSearch :: StateT SearchState IO ()

findCandidates :: Location -> Maze -> Set.Set Location

-> IO [(Location, CellBoundaries, Location, CellBoundaries)]

chooseCandidate

:: [(Location, CellBoundaries, Location, CellBoundaries)]

-> StateT SearchState IO ()This will lead us to update our generation function.

generateRandomMaze :: StdGen -> (Int, Int) -> IO Maze

generateRandomMaze gen (numRows, numColumns) = do

initialMutableBounds <- IA.thaw initialBounds

let initialState = SearchState

g2

[(startX, startY)]

initialMutableBounds

Set.empty

finalBounds <- currentBoundaries <$>

(execStateT dfsSearch initialState)

IA.freeze finalBounds

where

…The original dfsSearch definition is almost fine. But findCandidates is now a monadic function. So we'll have to extract its result instead of using let:

-- Previously

let candidateLocs = findCandidates currentLoc bounds visited

-- Now

candidateLocs <- lift $ findCandidates currentLoc bounds visitedThe findCandidates function though will need a bit more re-tooling. The main this is that we need readArray instead of Array.!. The first swap is easy:

findCandidates currentLocation@(x, y) bounds visited = do

currentLocBounds <- IA.readArray bounds currentLocation

...It's tempting to go ahead and read all the other values for upLoc, rightLoc, etc. right now:

findCandidates currentLocation@(x, y) bounds visited = do

currentLocBounds <- IA.readArray bounds currentLocation

let upLoc = (x, y + 1)

upBounds <- IA.readArray bounds upLoc

...We can't do that though, because this will access them in a strict way. We don't want to access upLoc until we know the location is valid. So we need to do this within the case statement:

findCandidates currentLocation@(x, y) bounds visited = do

currentLocBounds <- IA.readArray bounds currentLocation

let upLoc = (x, y + 1)

maybeUpCell <- case (upBoundary currentLocBounds,

Set.member upLoc visited) of

(Wall, False) -> do

upBounds <- IA.readArray bounds upLoc

return $ Just

( upLoc

, upBounds {downBoundary = AdjacentCell currentLocation}

, currentLocation

, currentLocBounds {upBoundary = AdjacentCell upLoc}

)

_ -> return NothingAnd then we'll do the same for the other directions and that's all for this function!

Choosing Candidates

We don't have to change too much about our chooseCandidates function! The primary change is to eliminate the line where we use Array.// to update the array. We'll replace this with two monadic lines using writeArray instead. Here's all that happens!

chooseCandidate candidates = do

(SearchState gen currentLocs boundsMap visited) <- get

...

lift $ IA.writeArray boundsMap chosenLocation newChosenBounds

lift $ IA.writeArray boundsMap prevLocation newPrevBounds

put (SearchState newGen (chosenLocation : currentLocs) boundsMap newVisited)Aside from that, there's one small change in our runner to use the IO monad for generateRandomMaze. But after that, we're done!

Conclusion

As mentioned above, you can see all these changes in this commit on our github repository. The last two articles have illustrated how it's not hard to refactor our Haskell code much of the time. As long as we are methodical, we can pick the one thing that needs to change. Then we let the compiler errors direct us to everything we need to update as a result. I find refactoring other languages (particularly Python/Javascript) to be much more stressful. I'm often left wondering...have I actually covered everything? But in Haskell, there's a much better chance of getting everything right the first time!

To learn more about Compile Driven Development, read our Haskell Brain Series. If you're new to Haskell you can also read our Liftoff Series and download our Beginners Checklist!

Compile Driven Development In Action: Refactoring to Arrays!

In the last couple weeks, we've been slowly building up our maze game. For instance, last week, we added the ability to serialize our mazes. But software development is never a perfect process! So it's not uncommon to revisit some past decisions and come up with better approaches. This week we're going to address a particular code wart in the random maze generation code.

Right now, we store our Maze as a mapping from Locations to CellBoundaries items. We do this using Data.Map. The Map.lookup function returns a Maybe result, since it might not exist. But most of the time we accessed a location, we had good reason to believe that it would exist in the map. This led to several instances of the following idiom:

fromJust $ Map.lookup location boundsMapUsing a function like fromJust is a code smell, a sign that we could be doing something better. This week, we're going to change this structure so that it uses the Array type instead from Data.Array. It captures our idiomatic definitions better. We'll use "Compile Driven Development" to make this change. We won't need to hunt around our code to figure out what's wrong. We'll just make type changes and follow the compiler errors!

To learn more about compile driven development and the mental part of Haskell, read our Haskell Brain series. It will help you think about the language in a different way. So it's a great tool for beginners!

Another good resource for this article is to look at the Github repository for this project. The complete code for this part is on the part-3 branch. You can consult this commit to see all the changes we make in migrating to arrays.

Initial Changes

To start with, we should make sure our code uses the following type synonym for our maze type:

type Maze = Map.Map Location CellBoundariesNow we can observe the power of type synonyms! We'll make a change in this one type, and that'll update all the instances in our code!

import qualified Data.Array as Array

type Maze = Array.Array Location CellBoundariesOf course, this will cause a host of compiler issues! But most of these will be pretty simple to fix. But we should be methodical and start at the top. The errors begin in our parsing code. In our mazeParser, we use Map.fromList to construct the final map. This requires the pairs of Location and CellBoundaries.

mazeParser :: (Int, Int) -> Parsec Void Text Maze

mazeParser (numRows, numColumns) = do

…

return $ Map.fromList (cellSpecToBounds <$> (concat rows))The Array library has a similar function, Array.array. However, it also requires us to provides the bounds for the Array. That is, we need the "min" and "max" locations in a tuple. But these are easy, since we have the dimensions as an input!

mazeParser :: (Int, Int) -> Parsec Void Text Maze

mazeParser (numRows, numColumns) = do

…

return $ Array.array

((0,0), (numColumns - 1, numRows - 1))

(cellSpecToBounds <$> (concat rows))Our next issue comes up in the dumpMaze function. We use Map.mapKeys to transpose the keys of our map. Then we use Map.toList to get the association list back out. Again, all we need to do is find the comparable functions for arrays to update these.

To change the keys, we want the ixmap function. It does the same thing as mapKeys. As with Array.array, we need to provide an extra argument for the min and max bounds. We'll provide the bounds of our original maze.

transposedMap = Array.ixmap (Array.bounds maze) (\(x, y) -> (y, x)) mazeA few lines below, we can see the usage of Map.toList when grouping our pairs. All we need instead is Array.assocs

cellsByRow :: [[(Location, CellBoundaries)]]

cellsByRow = groupBy

(\((r1, _), _) ((r2, _), _) -> r1 == r2)

(Array.assocs transposedMap)Updating Map Generation

That's all the changes for the basic parsing code. Now let's move on to the random generation code. This is where we have a lot of those yucky fromJust $ Map.lookup calls. We can now instead use the "bang" operator, Array.! to access those elements!

findCandidates currentLocation@(x, y) bounds visited =

let currentLocBounds = bounds Array.! currentLocation

...Of course, it's possible for an "index out of bounds" error to occur if we aren't careful! But our code should reflect the fact that we expect all these calls to work. After fixing the initial call, we need to change each directional component. Here's what the first update looks like:

findCandidates currentLocation@(x, y) bounds visited =

let currentLocBounds = bounds Array.! currentLocation

upLoc = (x, y + 1)

maybeUpCell = case (upBoundary currentLocBounds,

Set.member upLoc visited) of

(Wall, False) -> Just

( upLoc

, (bounds Array.! upLoc) {downBoundary =

AdjacentCell currentLocation}

, currentLocation

, currentLocBounds {upBoundary =

AdjacentCell upLoc}

)

_ -> NothingWe've replaced Map.lookup with Array.! in the second part of the resulting tuple. The other three directions need the same fix.

Then there's one last change in the random generation section! When we choose a new candidate, we currently need two calls to Map.insert. But arrays let us do this with one function call. The function is Array.//, and it takes a list of association updates. Here's what it looks like:

chooseCandidate candidates = do

(SearchState gen currentLocs boundsMap visited) <- get

...

-- Previously used Map.insert twice!!!

let newBounds = boundsMap Array.//

[(chosenLocation, newChosenBounds),

(prevLocation, newPrevBounds)]

let newVisited = Set.insert chosenLocation visited

put (SearchState

newGen

(chosenLocation : currentLocs)

newBounds

newVisited)Final Touch Ups

Now our final remaining issues are within the Runner code. But they're all similar fixes to what we saw in the parsing code.

In our sample boundariesMap, we once again replace Map.fromList with Array.array. Again, we add a parameter with the bounds of the array. Then, when drawing the pictures for our cells, we need to use Array.assocs instead of Map.toList.

For the final change, we need to update our input handler so that it accesses the array properly. This is our final instance of fromJust $ Map.lookup! We can replace it like so:

inputHandler :: Event -> World -> World

inputHandler event w = case event of

...

where

cellBounds = (worldBoundaries w) Array.! (playerLocation w)And that's it! Now our code will compile and work as it did before!

Conclusion

There's a pretty big inefficiency with our new approach. Whereas Map.insert can give us an updated map in log(n) time, the Array.// function isn't so nice. It has to create a complete copy of the array, and we run that function many times! How can we fix this? Next week, we'll find out! We'll use the Mutable Array interface to make it so that we can update our array in-place! This is super efficient, but it requires our code to be more monadic!

For some more ideas of cool projects you can do in Haskell, download our Production Checklist! It goes through a whole bunch of libraries on topics from database management to web servers!

Serializing Mazes!

Last week we improved our game so that we could solve additional random mazes after the first. This week, we'll step away from the randomness and look at how we can serialize our mazes. This will allow us to have a consistent and repeatable game. It will also enable us to save the game state later.

We'll be using the Megaparsec library as part of this article. If you aren't familiar with that (or parsing in Haskell more generally), check out our Parsing Series!

A Serialized Representation

The serialized representation of our maze doesn't need to be human readable. We aren't trying to create an ASCII art style representation. That said, it would be nice if it bore some semblance to the actual layout. There are a couple properties we'll aim for.

First, it would be good to have one character represent one cell in our maze. This dramatically simplifies any logic we'll use for serializing back and forth. Second, we should layout the cell characters in a way that matches the maze's appearance. So for instance, the top left cell should be the first character in the first row of our string. Then, each row should appear on a separate line. This will make it easier to avoid silly errors when coming up with test cases.

So how can we serialize a single cell? We could observe that for each cell, we have sixteen possible states. There are 4 sides, and each side is either a wall or it is open. This suggests a hexadecimal representation.

Let's think of the four directions as being 4 bits, where if there is a wall, the bit is set to 1, and if it is open, the bit is set to 0. We'll order the bits as up-right-down-left, as we have in a couple other areas of our code. So we have the following example configurations:

- An open cell with no walls around it is

0. - A totally surrounded cell is

1111 = F. - A cell with walls on its top and bottom would be

1010 = A. - A cell with walls on its left and right would be

0101 = 5.

With that in mind, we can create a small 5x5 test maze with the following representation:

98CDF

1041C

34775

90AA4

32EB6And this ought to look like so:

This serialization pattern lends itself to a couple helper functions we'll use later. The first, charToBoundsSet, will take a character and give us four booleans. These represent the presence of a wall in each direction. First, we convert the character to the hex integer. Then we use patterns about hex numbers and where the bits lie. For instance, the first bit is only set if the number is at least 8. The last bit is only set for odd numbers. This gives us the following:

charToBoundsSet :: Char -> (Bool, Bool, Bool, Bool)

charToBoundsSet c =

( num > 7,

, num `mod` 8 > 3

, num `mod` 4 > 1

, num `mod` 2 > 0

)Then, we also want to go backwards. We want to take a CellBoundaries item and convert it to the proper character. We'll look at each direction. If it's an AdjacentCell, it contributes nothing to the final Int value. But otherwise, it contributes the hex digit value for its place. We add these up and convert to a char with intToDigit:

cellToChar :: CellBoundaries -> Char

cellToChar bounds =

let top = case upBoundary bounds of

(AdjacentCell _) -> 0

_ -> 8

let right = case rightBoundary bounds of

(AdjacentCell _) -> 0

_ -> 4

let down = case downBoundary bounds of

(AdjacentCell _) -> 0

_ -> 2

let left = case leftBoundary bounds of

(AdjacentCell _) -> 0

_ -> 1

in toUpper $ intToDigit (top + right + down + bottom)We'll use both of these functions in the next couple parts.

Serializing a Maze

Let's move on now to determining how we can take a maze and represent it as Text. For this part, let's first apply a type synonym on our maze type:

type Maze = Map.Map Location CellBoundaries

dumpMaze :: Maze -> Text

dumpMaze = ...First, let's imagine we have a single row worth of locations. We can convert that row to a string easily using our helper function from above:

dumpMaze = …

where

rowToString :: [(Location, CellBoundaries)] -> String

rowToString = map (cellToChar . snd)Now we'd like to take our maze map and group it into the different rows. The groupBy function seems appropriate. It groups elements of a list based on some predicate. We'd like to take a predicate that checks if the rows of two elements match. Then we'll apply that against the toList representation of our map:

rowsMatch :: (Location, CellBoundaries) -> (Location, CellBoundaries) -> Bool

rowsMatch ((_, y1), _) ((_, y2), _) = y1 == y2We have a problem though because groupBy only works when the elements are next to each other in the list. The Map.toList function will give us a column-major ordering. We can fix this by first creating a transposed version of our map:

dumpMaze maze = …

where

transposedMap :: Maze

transposedMap = Map.mapKeys (\(x, y) -> (y, x)) mazeNow we can go ahead and group our cells by row:

dumpMaze maze = …

where

transposedMap = …

cellsByRow :: [[(Location, CellBoundaries)]]

cellsByRow = groupBy (\((r1, _), _) ((r2, _), _) -> r1 == r2)

(Map.toList transposedMap)And now we can complete our serialization function! We get the string for each row, and combine them with unlines and then pack into a Text.

dumpMaze maze = pack $ (unlines . reverse) (rowToString <$> cellsByRow)

where

transposedMap = …

cellsByRow = …

rowToString = ...As a last trick, note we reverse the order of the rows. This way, we get that the top row appears first, rather than the row corresponding to y = 0.

Parsing a Maze

Now that we can dump our maze into a string, we also want to be able to go backwards. We should be able to take a properly formatted string and turn it into our Maze type. We'll do this using the Megaparsec library, as we discussed in part 4 of our series on parsing in Haskell. So we'll create a function in the Parsec monad that will take the dimensions of the maze as an input:

import qualified Text.Megaparsec as M

mazeParser :: (Int, Int) -> M.Parsec Void Text Maze

mazeParser (numRows, numColumns) = ...We want to parse the input into a format that will match each character up with its location in the (x,y) coordinate space of the grid. This means parsing one row at a time, and passing in a counter argument. To make the counter match with the desired row, we'll use a descending list comprehension like so:

mazeParser (numRows, numColumns = do

rows <- forM [(numRows - 1), (numRows - 2)..0] $ \i -> do

...For each row, we'll parse the individual characters using M.hexDigit and match them up with a column index:

mazeParser (numRows, numColumns = do

rows <- forM [0..(numRows - 1)] $ \i -> do

(columns :: [(Int, Char)]) <-

forM [0..(numColumns - 1)] $ \j -> do

c <- M.hexDigitChar

return (j, c)

...We conclude the parsing of a row by reading the newline character. Then we make the indices match the coordinates in discrete (x,y) space. Remember, the "column" should be the first item in our location.

mazeParser (numRows, numColumns = do

(rows :: [[(Location, Char)]]) <-

forM [0..(numRows - 1)] $ \i -> do

columns <- forM [0..(numColumns - 1)] $ \j -> do

c <- M.hexDigitChar

return (j, c)

M.newline

return $ map (\(col, char) -> ((col, i), char)) columns

...Now we'll need a function to convert one of these Location, Char pairs into CellBoundaries. For the most part, we just want to apply our charToBoundsSet function and get the boolean values. Remember these tell us if walls are present or not:

mazeParser (numRows, numColumns = do

rows <- …

where

cellSpecToBounds :: (Location, Char) -> (Location, CellBoundaries)

cellSpecToBounds (loc@(x, y), c) =

let (topIsWall, rightIsWall, bottomIsWall, leftIsWall) =

charToBoundsSet c

...Now it's a matter of applying a case by case basis in each direction. We just need a little logic to determine, in the True case, if it should be a Wall or a WorldBoundary. Here's the implementation:

cellSpecToBounds :: (Location, Char) -> (Location, CellBoundaries)

cellSpecToBounds (loc@(x, y), c) =

let (topIsWall, rightIsWall, bottomIsWall, leftIsWall) =

charToBoundsSet c

topCell = if topIsWall

then if y + 1 == numRows

then WorldBoundary

else Wall

else (AdjacentCell (x, y + 1))

rightCell = if rightIsWall

then if x + 1 == numColumns

then WorldBoundary

else Wall

else (AdjacentCell (x + 1, y))

bottomCell = if bottomIsWall

then if y == 0

then WorldBoundary

else Wall

else (AdjacentCell (x, y - 1))

leftCell = if leftIsWall

then if x == 0

then WorldBoundary

else Wall

else (AdjacentCell (x - 1, y))

in (loc, CellBoundaries topCell rightCell bottomCell leftCell)And now we can complete our parsing function by applying this helper over all our rows!

mazeParser (numRows, numColumns = do

(rows :: [[(Location, Char)]]) <-

forM [0..(numRows - 1)] $ \i -> do

columns <- forM [0..(numColumns - 1)] $ \j -> do

c <- M.hexDigitChar

return (j, c)

M.newline

return $ map (\(col, char) -> ((col, i), char)) columns

return $ Map.fromList (cellSpecToBounds <$> (concat rows))

where

cellSpecToBounds = ...Conclusion

This wraps up our latest part on serializing maze definitions. The next couple parts will still be more code-focused. We'll look at ways to improve our data structures and an alternate way of generating random mazes. But after those, we'll get back to adding some new game features, such as wandering enemies and combat!

To learn more about serialization, you should read our series on parsing. You can also download our Production Checklist for more ideas!

Declaring Victory! (And Starting Again!)

In last week's article, we used a neat little algorithm to generate random mazes for our game. This was cool, but nothing happens yet when we "finish" the maze! We'll change that this week. We'll allow the game to continue re-generating new mazes when we're finished! You can find all the code for this part on the part-2 branch on the Github repository for this project!

If you're a beginner to Haskell, hopefully this series is helping you learn simple ways to do cool things! If you're a little overwhelmed, try reading our Liftoff Series first!

Goals

Our objectives for this part are pretty simple. We want to make it so that when we reach the "end" location, we get a "victory" message and can restart the game by pressing a key. We'll get a new maze when we do this. There are a few components to this:

- Reaching the end should change a component of our

World. - When that component changes, we should display a message instead of the maze.

- Pressing "Enter" with the game in this state should start the game again with a new maze.

Sounds pretty simple! Let's get going!

Game Result

We'll start by adding a new type to represent the current "result" of our game. We'll add this piece of state to our World. As an extra little piece, we'll add a random generator to our state. We'll need this when we re-make the maze:

data GameResult = GameInProgress | GameWon

deriving (Show, Eq)

data World = World

{ playerLocation :: Location

, startLocation :: Location

, endLocation :: Location

, worldBoundaries :: Maze

, worldResult :: GameResult

, worldRandomGenerator :: StdGen

}Our generation step needs a couple small tweaks. The function itself should now return its final generator as an extra result:

generateRandomMaze :: StdGen -> (Int, Int) -> (Maze, StdGen)

generateRandomMaze gen (numRows, numColumns) =

(currentBoundaries finalState, randomGen finalState)

where

...

finalState = execState dfsSearch initialStateThen in our main function, we incorporate the new generator and game result into our World:

main = do

gen <- getStdGen

let (maze, gen') = generateRandomMaze gen (25, 25)

play

windowDisplay

white

20

(World (0, 0) (0, 0) (24, 24) maze GameInProgress gen')

...Now let's fix our updating function so that it changes the game result if we hit the final location! We'll add a guard here to check for this condition and update accordingly:

updateFunc :: Float -> World -> World

updateFunc _ w

| playerLocation w == endLocation w = w { worldResult = GameWon }

| otherwise = wWe could do this in the eventHandler but it seems more idiomatic to let the update function handle it. If we use the event handler, we'll never see our token enter the final square. The game will jump straight to the victory screen. That would be a little odd. Here there's at least a tiny gap.

Displaying Victory!

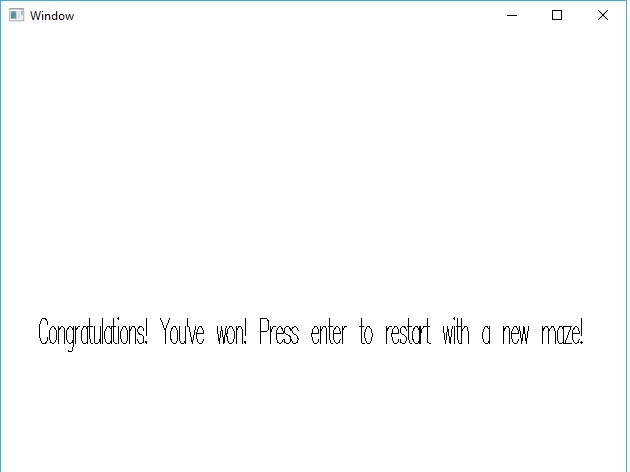

Now our game will update properly. But we have to respond to this change by changing what the display looks like! This is a quick fix. We'll add a similar guard to our drawingFunc:

drawingFunc :: (Float, Float) -> Float -> World -> Picture

drawingFunc (xOffset, yOffset) cellSize world

| worldResult world == GameWon =

Translate (-275) 0 $ Scale 0.12 0.25

(Text "Congratulations! You've won!\

\Press enter to restart with a new maze!")

| otherwise = ...Note that Text here is the Gloss Picture constructor, not Data.Text. We also scale and translate it a bit to make the text appear on the screen. This is all we need to get the victory screen to appear on completion!

Restarting the Game

The last step is that we have to follow through on our process to restart the game if they hit enter! This involves changing our inputHandler to give us a brand new World. As with our other functions, we'll add a guard to handle the GameWon case:

inputHandler :: Event -> World -> World

inputHandler event w

| worldResult w == GameWon = …

| otherwise = case event of

...We'll want to make a new case section that accounts for the user pressing the "Enter" key. All this section needs to do is call generateRandomMaze and re-initialize the world!

inputHandler event w

| worldResult w == GameWon = case event of

(EventKey (SpecialKey KeyEnter) Down _ _) ->

let (newMaze, gen') = generateRandomMaze

(worldRandomGenerator w) (25, 25)

in World (0, 0) (0, 0) (24, 24) newMaze GameInProgress gen'

_ -> wAnd with that, we're done! We can restart the game and navigate random mazes to our heart's content!

Conclusion

The ability to restart the game is great! But if we want to make our game re-playable instead of random, we'll need some way of storing mazes. In the next part, we'll look at some code for dumping a maze to an output format. We'll also need a way to re-load from this stored representation. This will ultimately allow us to make a true game with saving and loading state.

In preparation for that, you can read our series on Parsing. You'll especially want to acquaint yourself with the Megaparsec library. We go over this in Part 4 of the series!

Generating More Difficult Mazes!

In the last part of this series, we established the fundamental structures for our maze game. But our "maze" was still rather bland. It didn't have any interior walls, so getting to the goal point was trivial. In this next part, we'll look at an algorithm for random maze generation. This will let us create some more interesting challenges. In upcoming parts of this series, we'll explore several more related topics. We'll see how to serialize our maze definition. We'll refactor some of our data structures. And we'll also take a look at another random generation algorithm.

If you've never programmed in Haskell before, you should download our Beginners Checklist! It will help you learn the basics of the language so that the concepts in this series will make more sense. The State monad will also see a bit of action in this part. So if you're not comfortable with monads yet, you should read our series on them!

Getting Started

We represent a maze with the type Map.Map Location CellBoundaries. For a refresher, a Location is an Int tuple. And the CellBoundaries type determines what borders a particular cell in each direction:

type Location = (Int, Int)

data BoundaryType = Wall | WorldBoundary | AdjacentCell Location

data CellBoundaries = CellBoundaries

{ upBoundary :: BoundaryType

, rightBoundary :: BoundaryType

, downBoundary :: BoundaryType

, leftBoundary :: BoundaryType

}An important note is that a Location refers to the position in discrete x,y space. That is, the first index is the column (starting from 0) and the second index is the row. Don't confuse row-major and column-major ordering! (I did this when implementing this solution the first time).

To generate our maze, we'll want two inputs. The first will be a random number generator. This will help randomize our algorithm so we can keep generating new, fresh mazes. The second will be the desired size of our grid.

import System.Random (StdGen, randomR)

…

generateRandomMaze

:: StdGen

-> (Int, Int)

-> Map.Map Location CellBoundaries

generateRandomMaze gen (numRows, numColumns) = ...A Simple Randomization Algorithm

This week, we're going to use a relatively simple algorithm for generating our maze. We'll start by assuming everything is a wall, and we've selected some starting position. We'll use the following depth-first-search pattern:

- Consider all cells around us

- If there are any we haven't visited yet, choose one of them as the next cell.

- "Break down" the wall between these cells, and put that new cell onto the top of our search stack, marking it as visited.

- If we have visited all other cells around us, pop this current location from the stack

- As long as there is another cell on the stack, choose it as the current location and continue searching from step 1.