Contributing to GHC 4: Real Issues

In the last few weeks, we’ve taken a good look at GHC. We started by looking at the steps we would need to prepare our local machine for GHC development. This was an especially difficult process on Windows, so we focused there. After that, we looked at the basic way of creating a development cycle for ourselves. We validated that by changing an error message and seeing how it appeared in the compiler. Last week, we made some more complicated changes. This week, we’re going to wrap this series up by looking at some basic ways of making contributions.

Documentation

Documentation is a tricky thing on any software project. At any given moment, most of the effort is going into making sure the program works as it ought to. When you understand the code already, you don’t need to look at the documentation. So the temptation is to not change any of the comments. This means documentation is always likely to fall out of date. Haskell, if anything, is more prone to this kind of lapse. We look for issues by making changes, compiling, and seeing what breaks. And documentation never breaks!

Experienced developers will remember to change documentation more. Still though, it’s inevitable that something will slip through the cracks. But there's good news for us as newcomers to the GHC code base! We’re in the best position to find holes in the documentation, since we’re the ones who need to read it most! This is how I found the first contribution I could make.

While exploring the lexing types, I found a comment that didn’t quite make sense. At the top of compiler/basicTypes/BasicTypes.hs, it states:

-- There is considerable overlap between the logic here and the logic

-- in Lexer.x, but sadly there seems to be way to merge them.That doesn’t quite read right. From the context, it seems pretty clear that the author intended to write “there seems to be no way to merge them”. Great, so let’s submit a pull request for this! We’ll fork the repository and open a pull request. So we’ll create our fork, clone the repo, open a new branch, and open a pull request against master.

Now there’s a somewhat annoying issue with the fact that the CI builds don’t actually seem to be passing right now. But hopefully this PR will get merged in at some point.

Issue Tracking with Trac

Of course, there are also much more complicated issues at stake with GHC. There’s the real features we want to add to the codebase, and the bugs we want to fix! To take a look at what’s going on there, you’ll need to look at the issue tracker. GHC uses Trac for this, and you can observe all the issues on that list. They have labels based on what release they’re for, and how important they are.

It can be quite an overwhelming list. I scrolled through many different tickets and wasn’t sure what I could actually help with. So how can you find something to start out with? First, you can subscribe to the GHC devs mailing list. Conversations there will help you find what people are working on. Second, you can log onto Freenode and get onto the #ghc channel. You can ask anyone what’s going on and where you might help. Luckily, there is also a tag for “newcomers” on the list of issues. These are issues that the GHC devs have highlighted should be easy for people new to the codebase. Let’s take a look at one of these issues.

Looking at a Real Issue: Infix Patterns

From this hunt, I found this ticket, related to the infix value of (->). The ticket claims that the stated infix level of 0 for the arrow operator is actually incorrect. Let’s take a look at what they mean.

As a reminder, the infix level states an operator's priority when determining order of operations. For instance, the multiplication operator (*) has a higher infix level than the addition operator (+). We can confirm this information with a quick ghci session by using the :info command on each of these.

>> :i (+)

…

infixl 6 +

>> :i (*)

…

infixl 7 *

>> 5 + 2 * 3

11 -- Would be 21 if addition were higher precedenceNow, when two operators have the same infix level, then we refer to the direction of the infix level. As an example, we can compare subtraction to addition. We’ll find it's also infixl 6. Since it’s infixl (as opposed to infixr), we give the left side operation priority. Here’s an example.

>> :i (-)

…

infixl 6 -

>> 5 - 2 + 18

21 -- Not (-15)So let’s look at our arrow operator, which we use when defining our type signatures:

>> :i (->)

data (->) (a :: TYPE q) (b :: TYPE r) -- Defined . `GHC.Prim`

infixr 0 `(->)`

...This suggests an infix level of 0 for this operator, and that we should prioritize things on the right. However, the person filing the bug suggests the following code:

{-# LANGUAGE TypeOperators #-}

module Bug where

import Data.Type.Equality

type (~>) = (->)

infixr 0 ~>

f :: (a ~> b -> c) :~: (a ~> (b -> c))

f = ReflThere’s a lot going on here with some higher level concepts, so let’s break it all down. First, (->) is a type operator, meaning that it itself is actually a type. Thus we can create a type synonym for it called (~>). Then we can assign this new operator to have whatever infix level we like. In this case, we’ll choose the same stated infix level as we have for the original operator, infixr 0.

The next part creates an expression f. Its type signature uses the (:~:) operator for relational equality between types. This type has the Refl constructor. The only thing you need to understand is that each of our arrow patterns ((a ~> b -> c) and (a ~> (b -> c))) is a type. And this code should only compile if those types are the same.

And on the face of it, these types should be the same. After all, both operators purport to be infixr 0, meaning the way we parenthesize it on the right side of (:~:) should match how it is naturally ordered. But the code does not compile!

>> ghci

>> :l Bug.hs

Bug.hs:11:5: error:

* Couldn’t match type `a` with `a ~> b`

`a` is a rigid type variable bound by

f :: forall a b c. ((a ~> b) -> c) :~: (a ~> ( b -> c))

At Bug.hs:10:1-38

Expected type: ((a ~> b) -> c) :~: (a ~> (b -> c))

Actual type: ((a ~> b) -> c) :~: ((a ~> b) -> c)

* In the expression: Refl

In an equation for `f’: f = Refl

* Relevant bindings include

f :: ((a ~> b) -> c) :~: (a ~> (b -> c))

(bound at Bug.hs:11:1)

|

11 | f = Refl

|We can see on the “Actual type” line how the compiler interprets (a ~> b -> c). It gives priority to the left, not the right. Indeed, if we change the type signature to reflect priority given to (~>), our code will compile:

f :: (a ~> b -> c) :~: ((a ~> b) -> c)

f = Refl

…

>> ghci

>> :l Bug.hs

Ok, one module loaded.The Fix

The fix, luckily for us, has already is already proposed in the ticket. The compiler represents the infix level of our operators using the Fixity type. We can see a particular location where we’ve defined the level for some of our built-in operators:

negateFixity, funTyFixity :: Fixity

negateFixity = Fixity NoSourceText 6 InfixL -- Fixity of unary negate

funTyFixity = Fixity NoSourceText 0 InfixR -- Fixity of `->`We want to change the fixity of the function type operator. Instead of it appearing to be 0, we should make it appear to be -1, showing the lower precedence of this operator. Note this code refers to our we report it. The actual reasons why it ends up having lower priority are more complicated. But let’s make that change:

funTyFixity = Fixity NoSourceText (-1) InfixRTesting Our Change

This seems like it should be a simple change to test. First, we’ll make our code again. Then we’ll boot up GHCI and ask for info on (->). But this doesn’t appear to work when we try it!

> make

> ghci

...

>> :i (->)

data (->) (a :: TYPE q) (b :: TYPE r) -- Defined . `GHC.Prim`

infixr 0 `(->)`

...The issue here is that re-making does not cause GHCI to use our new locally built version of GHC. Even when using ghci.exe from within the ghc/inplace/bin directory, it still doesn’t account for this change. The way around this is that instead of using ghci, we can pass the --interactive flag to a normal call to ghc. So we’ll want something like this:

~/ghc/inplace/bin/ghc-stage2.exe -o prog --interactive Main.hsThis will bring up a GHCI prompt that loads our main module. And now when we go ahead and get info, we’ll see that it works!

> ~/ghc/inplace/bin/ghc-stage2.exe -o prog --interactive Main.hs

...

>> :i (->)

data (->) (a :: TYPE q) (b :: TYPE r) -- Defined . `GHC.Prim`

infixr -1 `(->)`

...So I’ll now make a simple pull request addressing this bug. You can follow the progress here. I’ll update this post as it moves further along in the process.

Conclusion

This wraps up our series on contributing to GHC! There are a lot of bugs out there, so don’t be afraid to take a look at anything labeled as newcomer. Just make sure to take a look at the discussion that’s occurred already on the ticket!

To learn more about Haskell, you can read our Liftoff Series (for beginners) or our Haskell Web Series if you’re already familiar with the language. You can also download our Haskell Beginners Checklist to get started! Or you can look at our Production Checklist if you want some ideas for more advanced projects.

Contributing to GHC 3: Hacking Syntax and Parsing

In last week's article, we made more progress in understanding GHC. We got our basic development cycle down and explored the general structure of the code base. We also made the simplest possible change by updating one of the error messages. This week, we'll make some more complex changes to the compiler, showing the ways you can tweak the language. It's unlikely you would make changes like these to fix existing issues. But it'll help us get a better grasp of what's going on.

As always, you can learn more about the basics of Haskell by checking out our other resources. Take a look at our Liftoff Series or download our Beginners Checklist!

Comments and Changing the Lexer

Let's get warmed up with a straightforward change. We'll add some new syntax to allow different kinds of comments. First we have to get a little familiar with the Lexer, defined in parser/Lexer.x. Let's try and define it so that we'll be able to use four apostrophes to signify a comment. Here's what this might look like in our code and the error message we'll get if we try to do this right now.

module Main where

'''' This is our main function

main :: IO ()

main = putStrLn "Hello World!"

…

Parser error on `''`

Character literals may not be empty

|

5 | '''' This is our main function

| ^^Now, it's easy enough to add a new line describing what to do with this token. We can follow the example in the Lexer file. Here's where GHC defines a normal single line comment:

"-- " ~$docsym .* { lineCommentToken }

"--" [^$symbol \ ] . * { lineCommentToken }It needs two cases because of Haddock comments. But we don't need to worry about that. We can specify our symbol on one line like so:

"''''" .* { lineCommentToken }Now we can add the comment above into our code, and it compiles!

Adding a New Keyword

Let's now look at how we could add a new keyword to the language. We'll start with a simple substitution. Suppose we want to use the word iffy like we use if. Here's what a code snippet would look like, and what the compiler error we get is at first:

main :: IO ()

main = do

i <- read <$> getLine

iffy i `mod` 2 == 0

then putStrLn "Hello"

else putStrLn "World"

…

Main.hs:11:5: error: parse error on input 'then'

|

11 | then putStrLn "Hello"

| ^^^^Let's do a quick search for where the keyword "if" already exists in the parser section. We'll find two spots. The first is a list of all the reserved words in the language. We can update this by adding our new keyword to the list. We'll look for the reservedIds set in basicTypes/Lexeme.hs, and we can add it:

reservedIds :: Set.Set String

reservedIds = Set.fromList [ …

, "_", "iffy" ]Now we also have to parse it so that it maps against a particular token. We can see a line in Lexer.x where this happens:

( "if", ITif, 0)We can add another line right below it, matching it to the same ITif token:

( "iffy", ITif, 0)Now the lexer matches it against the same token once we start putting the language together. Now our code compiles and produces the expected result!

lghc Main.hs

./prog.exe

5

WorldReversing If

Now let's add a little twist to this process. We'll add another "if" keyword and call it reverseif. This will change the ordering of the if-statement. So when the boolean is false, our code will execute the first branch instead of the second. We'll need to work a little further upstream. We want to re-use as much of the existing machinery as possible and just reverse our two expressions at the right moment. Let's use the same code as above, except with the reverse keyword. Then if we input 5 we should get Hello instead of World.

main :: IO ()

main = do

i <- read <$> getLine

reverseif i `mod` 2 == 0

then putStrLn "Hello"

else putStrLn "World"So we'll have to start by adding a new constructor to our Token type, under the current if token in the lexer.

data Token =

…

| ITif

| ITreverseif

...Now we'll have to add a line to convert our keyword into this kind of token.

...

("if", ITif, 0),

("reverseif", ITreverseif, 0),

...As before, we'll also add it to our list of keywords:

reservedIds :: Set.Set String

reservedIds = Set.fromList [ …

, "_", "iffy", "reverseif" ]Let's take a look now at the different places where we use the ITif constructor. Then we can apply them to ITreverseif as well. We can find two more instances in Lexer.x. First, there's the function maybe_layout, which dictates if a syntactic construct might need an open brace. Then there's the isALRopen function, which tells us if we can start some kind of other indentation. In both of these, we'll follow the example of ITif:

maybe_layout :: Token -> P ()

…

where

f ITif = pushLexState layout_if

f ITreverseif = pushLexState layout_if

...

isALRopen ITif = True

isALRopen ITreverseif = True

...There's also a bit in Parser.y where we'll need to parse our new token:

%token

…

'if' { L _ ITif }

'reverseif' { L _ ITreverseif }Now we need to figure out how these tokens create syntactic constructs. This also seems to occur in Parser.y. We can look, for instance, at the section that constructs basic if statements:

| 'if' exp optSemi 'then' exp optSemi 'else' exp

{% checkDoAndIfThenElse $2 (snd $3) $5 (snd $6) $8 >>

Ams (sLL $1 $> $ mkHsIf $2 $5 $8)

(mj AnnIf $1:mj AnnThen $4

:mj AnnElse $7

:(map (\l -> mj AnnSemi l) (fst $3))

++(map (\l -> mj AnnSemi l) (fst $6))) }There's a lot going on here, and we're not going to try to understand it all right now! But there are only two things we'll need to change to make a new rule for reverseif. First, we'll obviously need to use that token instead of if on the first line.

Second, see that mkHsIf statement on the third line? This is where we make the actual Haskell "If" expression in our syntax tree. The $5 refers to the second instance of exp in the token list, and the $8 refers to the third and final expression. These are, respectively, the True and False branch expressions of our "If" statement. Thus, to reverse our "If", all we need to do is flip this arguments on the third line!

| 'reverseif' exp optSemi 'then' exp optSemi 'else' exp

{% checkDoAndIfThenElse $2 (snd $3) $5 (snd $6) $8 >>

Ams (sLL $1 $> $ mkHsIf $2 $8 $5)

(mj AnnIf $1:mj AnnThen $4

:mj AnnElse $7

:(map (\l -> mj AnnSemi l) (fst $3))

++(map (\l -> mj AnnSemi l) (fst $6))) }Finally, there's one more change we need to make. Adding this line will introduce a couple new shift/reduce conflicts into our grammar. There are already 233, so we're not going to worry too much about that right now. All we need to do is change the count on the assertion for the number of conflicts:

%expect 235 -- shift/reduce conflictsNow when we compile and run our simple program, we'll indeed see that it works as expected!

lghc Main.hs

./prog.exe

5

HelloConclusion

So this week we saw some more complicated changes to GHC that have tangible effects. Next week, we'll wrap up our discussion of GHC by looking at the contribution process. We'll see the "simple" way with Github first. Then we'll also walk through the more complicated process using tools like Arc and Phabricator.

To learn more about Haskell, you should check out some of our basic materials! If you're a beginner to the language, read our Liftoff Series. It'll teach you how to use Haskell from the ground up. You can also take a look at our Haskell Web Series to see some more advanced and practical skills!

Contributing to GHC 2: Basic Hacking and Organization

Last week, we took our first step into the world of GHC, the Glasgow Haskell Compiler. We summarized the packages and tools we needed to install to get it building. We did this even in the rather hostile environment of a windows laptop. But, at the end of the day, we can now build the project with make and create our local version of GHC.

This week, we’ll establish our development cycle by looking at a very simple change we can make to the compiler. We’ll also discuss the architecture of the repository so we’ll can make some cooler changes next week.

GHC is truly a testament to some of the awesome benefits of open source software. Haskell would not be the same language without it. But to understand GHC, you first have to have a decent grasp of Haskell itself! If you’ve never written a line of Haskell before, take a look at our Liftoff Series for some tips on how to get going. You can also download our Beginners Checklist.

You may have also heard that while Haskell is a neat language, it’s useless from an industry perspective. But if you take a look at our Production Checklist, you’ll find tons of tools to write more interesting Haskell programs!

Getting Started

Let’s start off by writing a very simple program in Main.hs.

module Main where

main :: IO ()

main = do

putStrLn "Using GHC!"We can compile this program into an executable using the ghc command. We start by running:

ghc -o prog Main.hsThis creates our executable prog.exe (or just prog if you’re not using Windows). Then we can run it like we can run any kind of program:

./prog.exe

Using GHC!However, this is using the system level GHC we had to install while building it locally!

which ghc

/mingw/bin/ghcWhen we build GHC, it creates executables for each stage of the compilation process. It produces these in a directory called ghc/inplace/bin. So we can create an alias that will simplify things for us. We’ll write lghc to be a "local GHC" command:

alias lghc="~/ghc/inplace/bin/ghc-stage2.exe -o prog"This will enable us to compile our single module program with lghc Main.hs.

Hacking Level 0

Ultimately, we want to be able to verify our changes. So we should be able to modify the compiler, build it again, use it on our program, and then see our changes reflected in the code. One simple way to test the compiler’s behavior is to change the error messages. For example, we could try to import a module that doesn’t exist:

module Main where

import OtherModule (otherModuleString)

main :: IO ()

main = do

putStrLn otherModuleStringOf course, we’ll get an error message:

[1 of 1] Compiling Main (Main.hs, Main.o)

Main.hs:3:1: error:

Could not find module 'OtherModule'

Use -v to see a list of the files search for.

|

3 |import OtherModule (otherModuleString)

|^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^Let’s try now changing the text of this error message. We can do a quick search for this message in the compiler section of the codebase and find where it’s defined:

cd ~/ghc/compiler

grep -r "Could not find module" .

./main/Finder.hs:cannotFindModule = cantFindErr (sLit "Could not find module")Let’s go ahead and update that string to something a little different:

cannotFindModule :: DynFlags -> ModuleName -> FindResult -> SDoc

cannotFindModule = cantFindErr

(sLit "We were unable to locate the module")

(sLit "Ambiguous module name")Now let’s go ahead and rebuild, except let’s use some of the techniques from last week to make the process go a bit faster. First, we’ll copy mk/build.mk.sample to mk/build.mk. We’ll uncomment the following line, as per the recommendation from the setup guide:

BuildFlavour=devel2 We’ll also uncomment the line that says stage=2. This will restrict the compiler to only building the final stage of the compiler. It will skip past stage 0 and stage 1, which we’ve already build.

We’ll also build from the compiler directory instead of the root ghc directory. Note though that since we’ve changed our build file, we’ll have to boot and configure once again. But after we’ve re-compiled, we’ll now find that we have our new error message!

[1 of 1] Compiling Main (Main.hs, Main.o)

Main.hs:3:1: error:

We were unable to locate the module 'OtherModule'

Use -v to see a list of the files search for.

|

3 |import OtherModule (otherModuleString)

|^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^General Architecture

Next week, we’ll look into making a more sophisticated change to the compiler. But at least now we’ve validated that we can develop properly. We can make a change, compile in a short amount of time, and then determine that the change has made a difference. But now let’s consider the organization of the GHC repository. This will help us think some more about the types of changes we’ll make. I’ll be drawing on this description written by Simon Peyton Jones and Simon Marlow.

There are three main parts to the GHC codebase. The first of these is the compiler itself. The job of the compiler is to take our Haskell source code and convert it into machine executable code. Here is a very non-exhaustive list of some of the compiler’s tasks

- Determining the location of referenced modules

- Reading a single source file

- Breaking that source into its simplest syntactic representation

Then there is the boot section. This section deals with the libraries that the compiler itself depends on. They include things such as low level types like Int or else Data.Map. This section is somewhat more stable, so we won’t look at it too much.

The last major section is the Runtime System (RTS). This takes the code generated by the compiler above and determines how to run it. A lot of magic happens in this part that makes Haskell particularly strong at tasks like concurrency and parallelism. It’s also where we handle mechanics like garbage collection.

We’ll try to spend most of our time in the compiler section. The compilation pipeline has many stages, like type checking and de-sugaring. This will let us zero in on a particular stage and make a small change. Also the Runtime System is mostly C code, while much of the compiler is in Haskell itself!

Conclusion

Next week we’ll take a look at a couple more ways to modify the compiler. After that, we’ll start looking at taking real issues from GHC and see what we can do to try and fix them! We’ll eventually take a peak at the submission process both with Github and Phabricator.

If you want to start out your Haskell journey, you should read our Liftoff Series! It will help you learn the basics of this awesome language. For more updates, you can also subscribe to our monthly newsletter!

Contributing to GHC 1: Preparation

In the last few weeks, we’ve looked at a few different open source Haskell projects like HNix and Codeworld. This week, we’ll start looking at perhaps the biggest and most important open source element of the Haskell ecosystem. This is GHC, the Glasgow Haskell Compiler. Without GHC and the hard work that goes into it from many volunteers, Haskell would not be the language it is today. So in the next few weeks we’ll be explore the process of building and (hopefully) contributing to GHC.

I’m currently operating on a Windows laptop, which brings many frustrations. Getting GHC to build on Windows is a non-trivial task with many potential hurdles. On the bright side, I view this as an opportunity to show that one can contribute even in the most adverse circumstances. So most of this article will focus on the trials of using Windows. There is a section further down that goes over the most important parts of building for Mac and Linux. I’ll be following this guide by Simon Peyton Jones, sharing my own complications.

Now, you need to walk before you can run. If you’ve never used Haskell before, you have to try it out first to understand GHC! Download our Beginner’s Checklist to get started! You can also read our Liftoff Series to learn more about the language basics.

MSys

The main complication with Windows is that the build tools for GHC are made for Unix-like environments. These tools include programs like autoconf and make. And they don’t work in the normal Windows terminal environment. This means we need some way of emulating a Unix terminal environment in Windows. There are a couple different options for this. One is Cygwin, but the more supported option for GHC is MSYS 2. So my first step was to install this program. This terminal will apply the “Minimalist GNU for Windows” libraries, abbreviated as “MinGW”.

Installing this worked fine the first time. However, there did come a couple points where I decided to nuke everything and start from scratch. Re-installing did bring about one problem I’ll share. In a couple circumstances where I decided to start over, I would run the installer, only to find an error stating bash.exe: permission denied. This occurred because the old version of this program was still running on a process. You can delete the process or else just restart your machine to get around this.

Once MSys is working, you’ll want to set up your terminal to use MinGW programs by default. To do this, you’ll want to set the path to put the mingw directory first:

echo “export PATH=/mingw<bitness>/bin:\$PATH” >> ~/.bash_profileUse either 32 or 64 for <bitness> depending on your system. Also don’t forget the quotation marks around the command itself!

Package Preparation

Our next step will be to get all the necessary packages for GHC. MSys 2 uses an older package manager called pacman, which operates kind’ve like apt-get. First you’ll need to update your package repository with this command:

pacman -SyuuAs per the instructions in SPJ’s description, you may need to run this a couple times if a connection times out. This happened to me once. Now that pacman is working, you’ll need to install a host of programs and libraries that will assist in building GHC:

pacman -S --needed git tar bsdtar binutils autoconf make xz \

curl libtool automake python python2 p7zip patch ca-certificates \

mingw-w64-$(uname -m)-gcc mingw-w64-$(uname -m)-python3-sphinx \

mingw-w64-$(uname -m)-tools-gitThis command typically worked fine for me. The final items we’ll need are alex and happy. These are Haskell programs for lexing and parsing. We’ll want to install Cabal to do this. First let’s set a couple variables for our system:

arch=x64_64 # could also be i386

bitness=64 # could also be 32Now we’ll get a pre-built GHC binary that we’ll use to Bootstrap our own build later:

curl -L https://downloads.haskell.org/~ghc/8.2.2/ghc-8.2.2-${arch}-unknown-mingw32.tar.xz | tar -xJ -C /mingw${bitness} --strip-components=1Now we’ll use Cabal to get those packages. We’ll place them (and Cabal) in /usr/local/bin, so we’ll make sure that’s created first:

mkdir -p /usr/local/bin

curl -L https://www.haskell.org/cabal/release/cabal-install-2.2.0.0/cabal-install-2.2.0.0-${arch}-unknown-mingw32.zip | bsdtar -xzf- -C /usr/local/binNow we’ll update our Cabal repository and get both alex and happy:

cabal update

cabal install -j --prefix=/usr/local/bin alex happyOnce while running this command I found that happy failed to install due to an issue with the mtl library. I got errors of this sort when running the ghc-pkg check command:

Cannot find any of [“Control\\Monad\\Cont.hi”, “Control\\Monad\Cont.p_hi”, “Control\\Monad\\Cont.dyn_hi”]

Cannot find any of [“Control\\Monad\\Cont\\Class.hi”, “Control\\Monad\Cont\\Class.p_hi”, “Control\\Monad\\Cont\\Class.dyn_hi”]I managed to fix this by doing a manual re-install of the mtl package:

cabal install -j --prefix=/usr/local/ mtl --reinstallAfter this step, there were no errors on ghc-pkg check, and I was able to install happy without any problems.

cabal install -j --prefix=/usr/local/ happy

Resolving dependencies…

Configuring happy-1.19.9…

Building happy-1.19.9…

Installed happy-1.19.9Getting the Source and Building

Now our dependencies are all set up, so we can actually go get the source code now! The main workflow for contributing to GHC uses some other tools, but we can start from Github.

git clone --recursive git://git.haskell.org/ghc.gitNow, you should run the ./boot command from the ghc directory. This resulted in some particularly nasty problems for me thanks to my antivirus. It decided that perl was an existential threat to my system and threw it in the Virus Chest. You might see an error like this:

sh: /usr/bin/autoreconf: /usr/bin/perl: bad interpreter: No such file or directoryEven after copying another version of perl over to the directory, I saw errors like the following:

Could not locate Autom4te/ChannelDefs.pm in @INC (@INC contains /usr/share/autoconf C:/msys64/usr/lib .) at C:/msys64/usr/bin/autoreconf line 39In reality, the @INC path should have a lot more entries than that! It took me a while (and a couple complete do-overs) to figure out that my antivirus was the problem here. Everything worked once I dug perl out of the Virus chest. Once boot runs, you’re almost set! You now need to configure everything:

./configure --enable-tarballs-autodownloadThe extra option is only necessary on Windows. Finally you’ll use to make command to build everything. Expect this to take a while (12 hours and counting for me!). Once you’re familiar with the codebase, there are a few different ways you can make things build faster. For instance, you can customize the build.mk file in a couple different ways. You can set BuildFlavor=devel2, or you can set stage=2. The latter will skip the first stage of the compiler.

You can also run make from the sub-directories rather than the main directory. This will only build the sub-component, rather than the full compiler. Finally, there’s also a make fast command that will skip building a lot of the dependencies.

Mac and Linux

I won’t go into depth on the instructions for Mac and Linux here, since I haven’t gotten the chance to try them myself. But due to the developer-friendly nature of those systems, they’re likely to have fewer hitches than Windows.

On Linux for instance, you can actually do most of the setup by using a Docker container. You’ll download the source first, and then you can run this docker command:

>> sudo docker run --rm -i -t -v `pwd`:/home/ghc gregweber/ghc-haskell-dev /bin/bashOn Mac, you’ll need to install some similar programs to windows, but there’s no need to use a terminal emulator like MSys. If you have the basic developer tools and a working version of GHC and Cabal already, it might be as simple as:

>> brew install autoconf automake

>> cabal install alex happy haddock

>> sudo easy_install pip

>> sudo pip install sphinxFor more details, check here. But once you’re set up, you’ll follow the same boot, configure and make instructions as for Windows.

Conclusion

So that wraps up our first look at GHC. There’s plenty of work to do just to get it to build! But next week we’ll start looking at some of the simplest modifications we can make to GHC. That way, we can start getting a feel for how the code base works.

If you haven’t written Haskell, it’s hard to appreciate the brilliance of GHC! Get started by downloading our Beginners Checklist and reading our Liftoff Series!

Codeworld: Haskell as a First Programming Language

In the last couple weeks, we’ve explored a couple different Haskell open source projects. We checked out the Nix package manager and its Haskell cousin. Open source is very important to the Haskell community, so we’ll continue in this vein for a little while longer. This week, we’ll explore Codeworld, another project I learned about at Bayhac about a month ago. In the coming weeks, we’ll look at GHC itself, a vital open-source component of the Haskell ecosystem.

What is Codeworld?

Codeworld is an educational tool for teaching kids about mathematics and programming. The most basic version of Codeworld allows students to create geometric images. They do this using simple programming expressions similar to Haskell. Here’s a very basic program we can write and the picture it would draw.

leaves = sector(0, 180, 4)

trunk = solidRectangle(1,4)

tree = colored(leaves, translucent(green)) & colored(trunk, dark(brown))

program = drawingOf(tree)This is different from similar sorts of programs and language in many ways. The Logo programming language that I first learned used a more procedural style. You create “turtles” that move around the screen and perform commands. For example, you could tell a turtle to start drawing, move 25 pixels, turn, and move again. You might also approach drawing in an object oriented fashion. You'd create shapes that have different properties and change these over time. But Codeworld eschews both these approaches in favor of a more functional style.

Your program is ultimately a single drawing. You can compose this drawing with different components, always represented by expressions. As you learn more about the different patterns, you can create your own functions.

leaves = sector(0, 180, 4)

trunk = solidRectangle(1,4)

tree :: (Color, Color) -> Picture

tree(c1, c2) = colored(leaves, translucent(c1)) &

colored(trunk, dark(c2))

myTree :: (Number, Color, Color) -> Picture

myTree(x, c1, c2) = translated(tree(c1, c2), x, 0)

program = drawingOf(myTree(-5, green, brown) & myTree(5, red, black))Within a few examples, it’s relatively easy to teach the concept of recursion! Here’s a simple example showing repetition and fractals:

branch :: Number -> Picture

branch(0) = blank

branch(n) =

polyline([(0,0), (0, 5)]) &

translated(smallBranch, 0, 5) &

translated(rotated(smallBranch, 30), 0, 2.5) &

translated(rotated(smallBranch, -30), 0, 2.5)

where smallBranch = scaled(branch(n-1), 0.5, 0.5)

tree :: Picture

tree = branch(7)

program = drawingOf(tree)Codeworld Haskell

Now the basic version of Codeworld is like Haskell but with some simplifications and syntactic changes. There is also Codeworld Haskell, which employs the full Haskell feature set. This lets you use more complex items and dive into the type signatures a bit more.

It also involves more complex functions than drawing. You can animations and interactions between different elements, or track a global state. It’s even possible to create simple games. The interactionOf function allows you to handle input events that can affect the world. The collaborationOf function looks a bit complicated with its use of StaticPtr. But it allows you to create multiplayer games with relative ease!

drawingOf :: Picture -> IO ()

animationOf :: (Double -> Picture) -> IO ()

simulationOf

:: world

-> (Double -> world -> world)

-> (world -> Picture)

-> IO ()

interactionOf

:: world

-> (Double -> world -> world)

-> (Event -> world -> world)

-> (world -> Picture)

-> IO ()

collaborationOf

:: Int

-> StaticPtr (Stdgen -> world)

-> StaticPtr (Double -> world -> world)

-> StaticPtr (Int -> Event -> world -> world)

-> StaticPtr (Int -> world -> Picture)

-> IO ()Using Codeworld

The easiest way to get started is to go to https://code.world, follow the Guide, and make some simple programs! Everything takes place in your web browser, so you can get a feel for how it works without needing to do any setup.

If you want to contribute to or fiddle with the source code at all, you’ll have to do some more involved work. You’ll need to follow the instructions on the Github repository, which are primarily for the main Linux distributions. You’ll also need to sign a Google Contributor License Agreement if you haven’t already. But if you want to help on some kind of educational Haskell tool, this is a great project to contribute on! It’s already in use in several schools!

Conclusion

Next week we’ll continue our open-source focus by beginning to look at the process of contributing to GHC. This compiler is a mainstay of the Haskell community. And it depends entirely on volunteer contributions! Naturally though, it's difficult to understand all the inner workings of a compiler. So we’ll start at a very basic level and work our way up. We'll begin by looking at contributions to less technical areas. Only at the end of our discussion will we start looking at any of the organization of the code itself.

If you’ve never written any Haskell before, Codeworld is actually a great way to introduce yourself to some of the fundamentals! But for a more classical introduction, you can also get our Haskell Beginner’s Checklist. It’ll walk you through the basics of setting Haskell up on your system.

HNix: Enhancing Nix with Haskell

Last week we introduced Nix, the purely functional package manager. We saw how it used some different conceptual techniques from functional programming. With these concepts, it seeks to solve some problems in package management. It shares many concepts with Haskell, so it is most often used by Haskell developers.

Because of the Haskell community's interest in Nix, an interesting project has arose alongside it. This is HNix, which I mentioned a few weeks ago in my article about BayHac. HNix is a Haskell implementation of various components of Nix. In this quick article, we’ll look at the different elements of this project.

The Nix Language and the Nix Store

The term “Nix” is a little overloaded. It refers to the package manager or the operating system, but also refers to a language. The Nix language is how we specify the values that represent our different packages. The core repository of this project implements the Nix language in Haskell.

This implementation would make it easier to integrate Nix with your Haskell code. For example, you could combine Nix versioning of your packages with a database schema. This could ensure that you can automatically handle migrations.

Another part of the project is an interface to the Nix Store. The store deals with how Nix actually saves all the different packages on your system. While Nix does sandbox its packages, it can still be useful to have a programmatic interface on them. This allows you to manipulate a representation of this store in-memory, rather than on the file system. For instance, one store implementation has no side effects at all, to allow for unit testing. Another would read from the file system. But then it would perform all write effects in memory without modifying anything.

Open Source Haskell

One of the main reasons I’m discussing HNix is that it’s a good gateway to open source Haskell. If you’ve wanted to contribute to an OS Haskell project and weren’t sure where to start, HNix is a great option. The maintainers are very friendly guys. They'd be more than happy to help you get started in understanding the code base. At BayHac I was very impressed with how well organized the project was. Specifically, the maintainers made it easy for new people to get involved in the project. They laid out many different issue tickets that were doable even for non-experts.

So to get started, take a look at the repository. The README instructions are pretty thorough. Then you can go through the issues section for a little bit and pick up one of the tickets with a “Help Wanted” label. You can email one of the maintainers for help (John Wiegley is probably your best bet). Tagging them in an issue comment should also work if you need some direction.

Conclusion

Haskell depends a lot of open source contributions. A lot of the core pieces of infrastructure (GHC, Stack, Cabal) are all maintained via open source. When you can make these contributions, you’ll be able to rapidly improve your Haskell, add value to the community, and meet great people along the way! Next week, we’ll look at another open source Haskell project.

And if you’ve never written any Haskell before, don’t be afraid! You can start your learning journey with our Beginners Checklist. You’ll be able to make solid contributions much quicker than you think!

Nix: Haskell Concepts for Package Managment

Back in my BayHac article, I discussed some of my adventures with Nix and HNix. I didn’t get a lot done. But I was still curious to learn more about these systems. I “used” Nix a little bit at a previous job. And by “used” I mean I learned enough of the basic commands to write code and get on with my life. But I never developed a full understanding of “why Nix” or “what’s good about Nix”. So I’m going to spend a couple weeks doing a high level overview of this program and why it's so cool.

As an introduction, Nix is a purely functional package manager. It aims to be a language-agnostic system to achieve deterministic builds. We’ll get into what it means to be a “purely functional” package manager down below. But a lot of the properties that make Nix what it is are also present in Haskell. So while you could use Nix for any language, most of the development effort so far has come from Haskellers. Meanwhile, NixOS is a linux distribution that seeks to apply the main principles of Nix at the operating system level.

This first article will discuss the basics of Nix, its advantages, and disadvantages. Next week, we’ll take a look at the HNix project, which seeks to implement Nix in Haskell. It’s important to understand though that Nix is definitely not the easiest package manager to use for Haskell. For now, I wold still recommend starting out with Stack. You can read the docs or check out our free Stack mini-course to learn more! And if you’ve never used Haskell before, download our Beginners Checklist to get started!

Now to motivate the use of Nix, let’s consider some of the broader issues are with package management.

Package Problems

At the most basic level, a package manager should enable you to get a program up and running in a small number (~3) of commands. And most accomplish this task, but there are always complications. We’ll look at two main issues. One is versioning. This includes both versioning your own projects and versioning dependencies. The other problem relates to the portability of your application.

The versioning problem plagued Haskell developers when Cabal was still young. Cabal would, by default, install dependencies system wide. But suppose you had many projects on your machine. These might depend on different versions of the same library. And this could lead to conflicts in your system that might render multiple projects unusable.

The addition of Cabal sandboxes and the Stack program mitigated this problem. Both these systems install dependencies in project specific locations. But there was still a problem where it could be difficult to roll back to a previous version of your project. The commands to uninstall and downgrade the packages weren’t intuitive. They could easily break things if you weren't careful.

Meanwhile, unseen dependencies threaten our portability. This is somewhat more common in building C or C++ programs than Haskell programs. C libraries are often still installed system wide. One of the consequences is that you might have a library from another project on your system. Then a new project also depends on it, but you forget to list that dependency. It works fine for you on your local machine. But then when you push your code somewhere else, that dependency isn’t found. This can be quite a hassle.

The Nix Functional Approach

Nix (the package manager) seeks to avoid these problems by using a functional approach to package management. It treats every package as a value constructed by a function. The key input to the function of any package is its dependency graph. That is, a package is the final output, and the other (versioned) libraries are the input. Each version of a package you build has a unique identifier. This identifier is a cryptographic hash of the dependency graph. So if any of the dependencies to your program change, you’ll rebuild and create a totally new version of your package. This means adding dependencies, removing them, or changing versions.

Nix stores all its packages in the /nix/store directory. So you might build one version of your project that ends up in this directory:

/nix/store/2gk7rk2sxx2dkmsjr59gignrfdmya8f6s-my-project-1.0.1And then you might change the dependencies and end up with another directory.

/nix/store/lg5mkbclaphyayzsxnjlxcko3kll5nbaie-my-project-1.0.2What are the consequences of this?

Notice it’s very easy to version our project! If we decide to rollback to a previous set of dependencies, that version will still be living on our machine! We’ll update the dependency set. It then calculates the hash of the dependency graph, and this will match an old configuration. So we’ll be all set! This goes for any of our dependencies as well.

There are in fact specific commands related to rollbacks. This means you can upgrade packages without being afraid of any difficulties.

Nix also solves the second problem we mentioned above. First, we explicitly declare all the dependencies as inputs. And second, we only use dependencies we get from the Nix store, rather than any system wide location. This means our derivations are complete. Thus someone else should be able to take the definition and build it themselves.

Nix OS

NixOS seeks to take many of the lessons from the Nix package manager and apply them at the OS level. Many of the problems that plague package management also plague OS management. For instance, upgrading packages with sudo apt-get install can be a risky operation. It can be difficult to rollback, and almost impossible to test what is going to happen before you upgrade. NixOS fixes these. It allows you to have versioned, reproducible system configurations. And you can roll back to a configuration with ease. It also gives you atomic transactions on system modifications. This way, even if something goes wrong, you’ll be completely reverted to your old system state.

Weaknesses with Nix

One potential weakness with Nix is that it defaults to building from source. This means you’ll often have long build times, even for small changes in your code or dependencies. If you’re in luck, you can use the Nix cache for your specific libraries. It stores pre-built binaries you can use. But from my experience using Nix, the length of build times was one of the biggest things holding it back. In particular it was very difficult to incorporate Nix into a CI system, as it was prone to cause timeouts.

Conclusion

So hopefully this gives you some idea of what Nix is about. Next week, we’ll look into HNix. This open source project is seeking to re-implement Nix in Haskell. We’ll see why in our exploration of the project. In the meantime, check out some of our resources on Getting Started with Haskell so you can learn how to get going! And if you want a little bit of experience with package management in Haskell, make sure to try out Stack! Check out our free Stack mini-course to learn how!

Advanced Github: Webhooks and Automation

A couple weeks ago, we saw how to use Docker in conjunction with Heroku to deploy our Haskell application. The process resulted in a simpler Circle CI config than we had before, as we let Docker do most of the heavy lifting. In particular, we no longer needed to download and build stack ourselves. We specified the build process in our Dockerfile, and then called docker build. We also saw a couple different ways to login to these services from our Circle CI box.

In the future, we’ll look at ways to use more diverse deployment platforms than Heroku. In particular, we’ll look at AWS. But that’s a tough nut to crack, so it might be worthy of its own series! For now, we’ll conclude our series on deployment by looking at the Github developer API. Most projects you’ll work on use Github for version control. But with the API, there are a lot of interesting tricks that can make your experience cooler! This week, we’ll see how to setup a server that will respond to events that happen within our repository. Then we’ll see how we can send our own events from the server! You can follow along with this code by looking at this Github repository!

This article builds a lot on our knowledge of the Servant library. If you’ve never used that before, I highly recommend you read our Haskell Web Skills series. You'll learn about Servant and much more! You can also download our Production Checklist for more tools to use in your applications.

Github Webhooks Primer

First let’s understand the concept of webhooks. Many services besides Github also use them. A webhook is an integration where a service will send an HTTP request to an endpoint of your choosing whenever some event happens. Webhooks are often a way for you to get some more advanced functionality out of a system. They can let you automate a lot of your processes. With Github, we can customize the events where this occurs. So for instance, we can trigger a request whenever creates a pull request.

In this article, we’ll set up a very simple server that will do just that. When they open a new PR, we’ll add a comment saying we’ll take a look at the pull request soon. We’ll also have the comment tag our account so we get a notification.

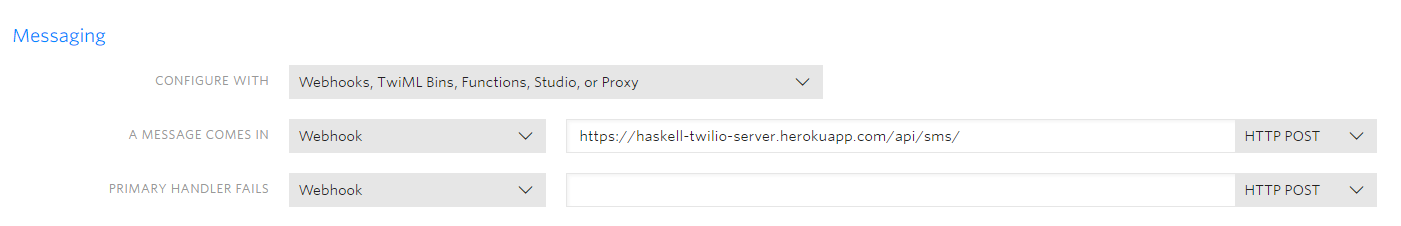

The Github part of this is easy. We go to the settings for our repository, and then find the “Webhooks” section. We’ll add a webhook for custom events, and we’ll only check the box next to “Pull Requests”. We’ll assign this to the URL of a Server that we’ll put up on a Heroku server, hitting the /api/hook endpoint.

Building Our Server

First let’s make a data type for a Github request. This will be a simple two-constructor type. Our first constructor will contain information about an opened pull request. We’ll want to get the user’s name out of the request object, as well as the URL for us to send our comment to. We’ll also have an Other constructor for when the request isn’t about an open pull request.

data GithubRequest =

GithubOpenPRRequest Text Text | -- User’s name, comments URL

GithubOtherRequest

deriving (Show)So we need a simple server that listens for requests on a particular endpoint. As we have in the past, we’ll use Servant for this process. Our endpoint type will use our desired path. Then it will also take a request body with our GithubRequest. We’ll listen for a post request, and then return a Text as our result, to help debug.

type ServerAPI = “api” :> “hook” :>

ReqBody ‘[JSON] GithubRequest :> Post ‘[JSON] TextNow we need to specify a FromJSON instance for our request type. Using the documentation, we’ll find a few fields we need to read to make this happen. First, we’ll check that, indeed, this request has a pull request section and that it’s action is “opened”. If these aren’t there, we’ll return Other:

instance FromJSON GithubRequest where

parseJSON = withObject “GithubRequest” $ \o -> do

(action :: Maybe Text) <- o .:? “action”

prSectionMaybe <- o .:? “Pull_request”

case (action, prSectionMaybe) of

(Just “opened”, Just pr_section :: Maybe Value) -> do

…

_ -> return GithubOtherRequestNow we can fetch the user section and the comments URL from the pull_request section. We do this with a function on a Data.Aeson object like so:

where

fetchUserAndComments o’ = do

uSection <- o’ .: “user”

commentsURL <- o’ .: “comments_url”

return (uSection, commentsURL)Note we want comments_url, NOT review_comments_url! We want to leave a single comment, rather than performing a full review of this PR. It was VERY annoying to figure out that the documentation covers this under the Issues section, NOT the section on pull requests! Once we get the user section and comments, URL, we need one more step. We’ll get the user name out of the section, and we’ll return our final request!

instance FromJSON GithubRequest where

parseJSON = withObject “GithubRequest” $ \o -> do

(action :: Maybe Text) <- o .:? “action”

prSectionMaybe <- o .:? “Pull_request”

case (action, prSectionMaybe) of

(Just “opened”, Just pr_section :: Maybe Value) -> do

(userSection :: Value, commentsURL :: Text) <-

withObject “PR Section” fetchUserAndComments prSection

userName <-

withObject “User Section” (\o’ -> o’ .: “login”) userSection

return $ GithubOpenPRRequest userName commentsURL

_ -> return GithubOtherRequestHandling the Endpoint

Now we need a handler function for endpoint. This handler will pattern match on the type of request and return a debugging string. If we have indeed found a request to open the PR, we’ll also want to call another IO function that will add our comment:

hookHandler :: GithubRequest -> Handler Text

hookHandler GithubOtherRequest =

return “Found a non-PR opening request.”

hookHandler (GithubOpenPRRequest userName commentsURL) = do

liftIO $ addComment userName commentsURL

return $ “User: “ <> userName <>

“ opened a pull request with comments at: “ <> commentsURL

addComment :: Text -> Text -> IO ()

...Adding a Comment

In order to add a comment to this pull request, we’ll need to hit the Github API with our own request. Again, we’ll do this using Servant’s magic! First, let’s make another API type to represent Github’s own developer API. Since we’re getting the full comments URL as part of our request, we don’t need any path components here. But we will need to authenticate using BasicAuth:

type GithubAPI = BasicAuth “GithubUser” () :>

ReqBody GitPRComment :> Post ‘[JSON] ()Our GitPRComment will only need a Text for the body of the comment. So let’s make a simple newtype wrapper and add a ToJSON instance for it:

newtype GitPRComment = GitPRComment Text

instance ToJSON GitPRComment where

toJSON (GitPRComment body) = object [ “body” .= body ]We can create a client function for this API now using the magic client function from Servant.Client:

sendCommentClient :: BasicAuthData -> GitPRComment -> ClientM ()

sendCommentClient = client (Proxy :: Proxy GithubAPI)Now to build our commenting function, we’ll start by building the auth data.

import qualified Data.ByteString.Char8 as BSC

...

addComment :: Text -> Text -> IO ()

addComment userName commentsURL = do

gitUsername <- getEnv “GITHUB_USERNAME”

gitPassword <- getEnv “GITHUB_PASSWORD”

let authData = BasicAuthData (BSC.pack gitUsername)

(BSC.pack gitPassword)

...Now we’ll set up our client environment using the comments URL:

addComment :: Text -> Text -> IO ()

addComment userName commentsURL = do

...

manager <- newManager tlsManagerSettings

baseUrl <- parseBaseUrl (Data.Text.unpack commentsURL)

let clientEnv = clientEnv maanger baseUrl

...We’ll add a simple function taking our admin’s username and composing the body of the comment. We’ll tag ourselves as well as the user who opened the PR:

addComment :: Text -> Text -> IO ()

addComment userName commentsURL = do

…

where

commentBody adminName = GitPRComment $

“Thanks for posting this @” <> userName <>

“! I’ll take a look soon! - @” <> adminNameNow we wrap everything together by making our client call. And that’s it!

addComment :: Text -> Text -> IO ()

addComment userName commentsURL = do

gitUsername <- getEnv “GITHUB_USERNAME”

gitPassword <- getEnv “GITHUB_PASSWORD”

let authData = BasicAuthData (BSC.pack gitUsername)

(BSC.pack gitPassword)

manager <- newManager tlsManagerSettings

baseUrl <- parseBaseUrl (Data.Text.unpack commentsURL)

let clientEnv = clientEnv maanger baseUrl

runClientM (sendCommentClient

authData

(commentBody gitUsername))

clientEnv

return ()

where

commentBody = ...Conclusion

Services like Github do their best to provide a good user experience to all their normal users. But if you get a little bit advanced, you can often customize their behavior to a great degree! Notice how important it is to know how to setup a simple server. This gives you limitless freedom to manipulate the system and add your own behaviors. It’s a cool perk of learning these specific web skills. If you want to see the full code I wrote for this article, check it out on this Github repo!

To learn about more web skills that can magnify your programming ability, check out our Haskell Web Skills Series. It’ll walk you through some different Haskell libraries, like Persistent for databases, and Servant for web servers. You can also download our Production Checklist. It’ll give you a lot more ideas of libraries to use to enhance your Haskell experience!

BayHac 2018!

This week we’ll be taking a quick breather from our work on deploying our Haskell code. Instead, I’ll be giving a brief overview of BayHac, the Bay Area Haskell Hackathon, which took place a week ago from April 27-29. It was hosted once again by Formation (formerly Takt). Many Haskellers from the Bay Area and beyond met up, hacked and discussed many ideas.

Presentations

This year there was a larger focus on projects and hacking, and less on presentations. But there were still a few short talks each morning. I was only able to make one set of these, but that included some very interesting topics. A couple speakers discussed some of the theoretical aspects of Haskell’s containers. One went through the idea of free objects, a generalization of free monads as seen on this blog. Another speaker discussed ways to perform type-level validation within Postgres.

And speaking of databases, Travis Athougies gave an overview of his Beam database library. This library has some awesome semantics. It might force me to re-think my habit of defaulting to Persistent, so it's definitely worth a look!

Finally, I gave a short overview of some of the work I did last year with Tensorflow and dependent types. I’ll post a link to the presentation as soon as it’s up. But in the meantime, you can check out the full blog series to learn more!

Nix and HNix

I spent most of my time at the Hackathon trying to setup Nix, so that I could work on HNix. Nix is a functional package manager with incredible reliability. We could use it for any language in theory. But it shares many conceptual ideas with Haskell, so many Haskellers have adopted it. In particular, if you do frontend web programming with GHCJS, you’ll want to use Nix instead of Stack.

Several people at the Hackathon worked on HNix, a Haskell implementation of Nix. The work was well organized by John Wiegley. He put in a lot of time parceling out tasks that newcomers could contribute to the codebase.

Having a Windows laptop, I wasn’t able to contribute a whole lot to the project (Nix only runs on *nix systems). Instead, I let myself be a guinea pig to see if I could get Nix working on the Windows Subsystem for Linux. My efforts were unsuccessful, though Jonas Chevalier of Tweag insists it’s possible.

Codeworld

The last talk I saw came from Chris Smith, who gave an overview of Haskell Codeworld, an educational tool for math and programming. This project in particular caught my attention for a couple reasons. First, I’ve developed a passion for teaching Haskell to beginners and showing it’s not so hard. But even I tend not to focus on teaching Haskell as a first language. Chris’s idea is to teach Haskell to middle school kids who have never written code before.

His primary intention is to teach mathematics. Since Haskell has such a mathematical view of programming, it's a natural fit. He stated an interesting finding from an academic study. Children’s success in calculus depends a lot on their understanding of functions. Those who view functions as a mere series of steps to compute tend to struggle. But there's another more correct way to view functions. This idea is that functions express a fundamental relationship between sets. Those who view functions this way have a better chance of flourishing.

This research suggests Haskell is great as a primary programming language for kids! It matches the latter definition, while object oriented languages teach the former idea. Codeworld a cool project, so check it out and see if you can help in any way!

Conclusion

Next week, we’ll conclude our series on deploying Haskell code by looking at Github’s API. It has some neat little tricks we can play to enhance our development experience.

Events like BayHac show that there are a lot of different ways to get involved in the Haskell community. See if you can find one in your city! And don’t worry if you’ve never written Haskell before! The Haskell community is very welcoming! Check out our Beginners Checklist to get started!

Dockerizing our Haskell App

Last week, we explored how to automate the deployment of our Haskell app with Circle CI. Every time we push a branch, Circle CI will load our code onto a container, build it, and run any tests we have. We also configured Heroku to deploy our new code whenever the master branch passed the build.

Our system had a couple weaknesses though. First, it was a bit of a hassle that our configuration required us to download the Stack program every time. Setting up Stack required about half the commands in our Circle config! The second weakness was that we built our code twice on each deploy. First, the Circle container would build it. Then Heroku would also compile it. This week, we’ll solve these problems using Docker images.

Using Docker Images

Last week we used a vanilla Circle container. We can start simplifying our configuration by using a pre-existing Docker image instead. Remember the start of our build_project section? It looked like this:

jobs:

build_project:

machine: trueThe machine keyword tells Circle to use an unconfigured Linux box. Since it had nothing on it, we needed to download and install Stack ourselves. However, Circle also allows us to use Docker images as the starting point for our machines. We’ll use an image from the Haskell Docker repository. These each have a particular version of GHC installed, and the later ones also come with Stack. These images lag behind GHC releases a little bit. So we’ll use GHC 8.0.2, and update our stack.yaml file to use LTS 9.21, the latest version for this GHC. Here’s how we write our Circle configuration to use this image:

jobs:

build_project:

docker:

- image: haskell:8.0.2Now we can radically simplify the rest of the file! Stack and GHC will be pre-installed, so we can remove all the steps related to those. We’ll also remove the caching step on the installed Stack directory. This leaves us with the following configuration file:

version: 2

jobs:

build_project:

docker:

- image: haskell:8.0.2

steps:

- checkout

- restore-cache:

keys:

- stack-work-{{checksum “stack.yaml”}}-\

{{checksum “HaskellTestApp.cabal”}}

- run: stack setup

- run: stack build

- run: stack test

- save_cache:

key: stack-work-{{checksum “stack.yaml”}}-\

{{checksum “HaskellTestApp.cabal”}}

paths:

- “.stack-work”

workflows:

version: 2

build_and_test:

jobs:

- build_projectMaking Our Own Docker Image

Now our builds are a little more efficient, but we haven’t solved the bigger problem in our system. In the rest of this article, we’ll use Docker to create a new image with our code built on it. Then we can push this image to Heroku instead of re-building our code with the buildpack.

To do this, we’ll fold some of the existing Circle configuration work into Docker itself. To start, we need to define a Dockerfile at the root of our project. This file specifies the commands Docker needs to run to create an image with our code and run the server. Here’s what ours looks like:

# Use the existing Haskell image as our base

FROM haskell:8.0.2

# Checkout our code onto the Docker container

WORKDIR /app

ADD . /app

# Build and test our code, then install the “run-server” executable

RUN stack setup

RUN stack build --test --copy-bins

# Expose a port to run our application

EXPOSE 80

# Run the server command

CMD [“run-server”]The first important part is that we’ll “inherit” from the Haskell Docker image we were using on Circle with FROM. Then we’ll run our setup command, and build the project. We’ll pass arguments to build that will run the tests, and install our executables. Then, we’ll run the server off the container.

Build our Docker Image on Circle

To actually save a Docker image on a remote repository, we’ll need to make a Docker account. We don’t need to create our own repository, since we’ll end up storing our image on a Heroku repository.

We no longer need to run Stack commands as part of our Circle configuration. Docker handles them for us. We can go back to using a normal machine, as Docker also handles using the Haskell image. Here’s the core of our configuration on Circle:

jobs:

build_project:

machine: true

steps:

- checkout

- run: echo $DOCKER_PASSWORD | docker login \

--username=$DOCKER_USERNAME \

--password-stdin

- run: docker pull \

registry.heroku.com/$HEROKU_APP/web:$CIRCLE_BRANCH

- run: docker build -t \

registry.heroku.com/$HEROKU_APP/web:$CIRCLE_BRANCH

- run: docker push \

registry.heroku.com/$HEROKU_APP/web:$CIRCLE_BRANCHThe key commands are obviously the four docker commands. First, we log into our Docker account using our credentials as environment variables. Next, we’ll pull the existing image off the Heroku image repository tied to our app. We don’t need to do anything to set this repository up, but we’ll need to configure the app to use it below. Then we build our container and tag it with our current branch name. As long as this succeeds, we’ll push this new image back to our Docker repository.

Heroku Integration

To use this image on Heroku, we’ll need to update the “Deploy” section of our app again from the dashboard. Instead of using Circle CI, we’ll use the Heroku registry option. Now our successful builds will push our code up to our Heroku registry. Then Heroku updates our app automatically! Plus, there will now be no need for us to rebuild the code on Heroku!

There’s one more caveat though. To push and pull from the Heroku registry, we also need to login to Heroku from our circle machine. Circle CI version 2 doesn’t yet have built-in support for this, so it’s a little tricky. On our own machine, we would login to Heroku using the CLI with the heroku login command. But we can’t use that command with stdin the way we can with Docker’s login command.

But we can replicate the ultimate result of logging in with a little script. Logging into Heroku creates a file called ~/.netrc storing our credentials. We can write this script that will output all that information like so:

#! /bin/bash

cat > ~/.netrc << EOF

machine api.heroku.com

login $HEROKU_LOGIN

password $HEROKU_PASSWORD

machine git.heroku.com

login $HEROKU_LOGIN

password $HEROKU_PASSWORD

EOF

heroku container:loginWe run the final heroku container command to actually connect to the repository. Note that the $HEROKU_PASSWORD environment variable should use your Heroku API Key, NOT your Heroku password. We call the variable PASSWORD because the HEROKU_API_KEY environment variable is special. It can cause problems with the CLI to have it set pre-maturely.

With this script saved as setup_heroku.sh, we can call it from our Circle script like so:

jobs:

build_project:

machine: true

steps:

- checkout

- bash .circleci/setup_heroku.sh

...Now everything should work! Our app should be automatically deployed to Heroku without re-compilation!

Conclusion

We’ve now made our deployment process a lot more efficient. First we used a Docker Haskell image to avoid manually downloading Stack. Then we created our own Docker image off of this, and pushed it to a registry. Once we connected our Heroku app to this registry, we no longer needed to compile it there. Next week, we’ll conclude this series by using a similar process to push our code to AWS instead of Heroku.

Now that you can deploy your code, you can make whatever Haskell apps you want! Download our Production Checklist to get some more ideas for libraries you can use in your apps.

And if you’ve never used Haskell before, download our Beginners Checklist to get started!

Deploying Confidently: Haskell and Circle CI

In last week’s article, we deployed our Haskell code to the cloud using Heroku. Our solution worked, but the process was also very basic and very manual. Let’s review the steps we would take to deploy code on a real project with this approach.

- Make a pull request against master branch

- Merge code into master

- Pull master locally, run tests

- Manually run

git push heroku master - Hope everything works fine on Heroku

This isn’t a great approach. Wherever there are manual steps in our development process, we’re likely to forget something. This will almost always come around to bite us at some point. In this article, we’ll see how we can automate our development workflow using Circle CI.

Getting Started with Circle

To follow along with this article, you should already have your project stored on Github. As soon as you have this, you can integrate with Circle easily. Go to the Circle Website and login with Github. Then go to “Add Project”. You should see all your personal repositories. Clicking your Haskell project should allow you to integrate the two services.

Now that Circle knows about our repository, it will try to build whenever we push code up to Github. But we have to tell Circle CI what to do once we’ve pushed our code! For this step, we’ll need to create a config file and store it as part of our repository. Note we’ll be using Version 2 of the Circle CI configuration. To define this configuration we first create a folder called .circleci at the root of our repository. Then we make a YAML file called config.yaml.

In Circle V2, we specify “workflows” for the Circle container to run through. To keep things simple, we’ll limit our actions to take place within a single workflow. We specify the workflows section at the bottom of our config:

workflows:

version: 2

build_and_test:

jobs:

- build_projectNow at the top, we’ll again specify version 2, and then lay out a bare-bones definition of our build_project job.

version: 2

jobs:

build_project:

machine: true

steps:

- checkout

- run: echo “Hello”The machine section indicates a default Circle machine image we’re using for our project. There’s no built-in Haskell machine configuration we can use, so we’re using a basic image. Then for our steps, we’ll first checkout our code, and then run a simple “echo” command. Let’s now consider how we can get this machine to get the Stack utility so we can actually go and build our code.

Installing Stack

So right now our Circle container has no Haskell tools. This means we'll need to do everything from scratch. This is a useful learning exercise. We’ll learn the minimal steps we need to take to build a Haskell project on a Linux box. Next week, we’ll see a shortcut we can use.

Luckily, the Stack tool handles most of our problems for us, but we first have to download it. So after checking our our code, we’ll run several different commands to install Stack. Here’s what they look like:

steps:

- checkout

- run: wget https://github.com/commercialhaskell/stack/releases/download/v1.6.1/stack-1.6.1-linux-x86_64.tar.gz -O /tmp/stack.tar.gz

- run: sudo mkdir /tmp/stack-download

- run: sudo tar -xzf /tmp/stack.tar.gz -C /tmp/stack-download

- run: sudo chmod +x /tmp/stack-download/stack-1.6.1-linux-x86_64/stack

- run: sudo mv /tmp/stack-download/stack-1.6.1-linux-x86_64/stack /usr/bin/stackThe wget command downloads Stack off Github. If you’re using a different version of Stack than we are (1.6.1), you’ll need to change the version numbers of course. We’ll then create a temporary directory to unzip the actual executable to. Then we use tar to perform the unzip step. This leaves us with the stack executable in the appropriate folder. We’ll give this executable x permissions, and then move it onto the machine’s path. Then we can use stack!

Building Our Project

Now we’ve done most of the hard work! From here, we’ll just use the Stack commands to make sure our code works. We’ll start by running stack setup. This will download whatever version of GHC our project needs. Then we’ll run the stack test command to make sure our code compiles and passes all our test suites.

steps:

- checkout

- run: wget …

...

- run: stack setup

- run: stack testNote that Circle expects our commands to finish with exit code 0. This means if any of them has a non-zero exit code, the build will be a “failure”. This includes our stack test step. Thus, if we push code that fails any of our tests, we’ll see it as a build failure! This spares us the extra steps of running our tests manually and “hoping” they’ll work on the environment we deploy to.

Caching

There is a pretty big weakness in this process right now. Every Circle container we make starts from scratch. Thus we’ll have to download GHC and all the different libraries our code depends on for every build. This means you might need to wait 30-60 minutes to see if your code passes depending on the size of your project! We don’t want this. So to make things faster, we’ll tell Circle to cache this information, since it won’t change on most builds. We’ll take the following two steps:

- Only download GHC when

stack.yamlchanges (since the LTS might have changed). This involves caching the~/.stackdirectory - Only re-download libraries when either

stack.yamlor our.cabalfile changes. For this, we’ll cache the.stack-worklibrary.

For each of these, we’ll make an appropriate cache key. At the start of our build process, we’ll attempt to restore these directories from the cache based on particular keys. As part of each key, we’ll use a checksum of the relevant file.

steps:

- checkout

- restore-cache:

keys:

- stack-{{ checksum “stack.yaml” }}

- restore-cache:

keys:

- stack-{{checksum “stack.yaml”}}-{{checksum “project.cabal”}}If these files change, the checksum will be different, so Circle won’t be able to restore the directories. Then our other steps will run in full, downloading all the relevant information. At the end of the process, we want to then make sure we’ve saved these directories under the same key. We do this with the save_cache command:

steps:

…

- stack test

- save-cache:

key: stack-{{ checksum “stack.yaml” }}

paths:

- “~/.stack”

- restore-cache:

keys: stack-{{checksum “stack.yaml”}}-{{checksum “project.cabal”}}

paths:

- “.stack-work”Now the next builds won’t take as long! There are other ways we can make our cache keys. For instance, we could use the Stack LTS as part of the key, and bump this every time we change which LTS we’re using. The downside is that there’s a little more manual work required. But this work won’t happen too often. The positive side is that we won’t need to re-download GHC when we add extra dependencies to stack.yaml.

Deploying to Heroku

Last but not least, we’ll want to actually deploy our code to heroku every time we push to the master branch. Heroku makes it very easy for us to do this! First, go to the app dashboard for Heroku. Then find the Deploy tab. You should see an option to connect with Github. Use it to connect your repository. Then make sure you check the box that indicates Heroku should wait for CI. Now, whenever your build successfully completes, your code will get pushed to Heroku!

Conclusion

You might have noticed that there’s some redundancy with our approaches now! Our Circle CI container will build the code. Then our Heroku container will also build the code! This is very inefficient, and it can lead to deployment problems down the line. Next week, we’ll see how we can use Docker in this process. Docker fully integrates with Circle V2. It will simplify our Circle config definition. It will also spare us from needing to rebuild all our code on Heroku again!

With all these tools at your disposal, it’s time to finally build that Haskell app you always wanted to! Download our Production Checklist to learn some cool libraries you can use!

If you’ve never programmed in Haskell before, hopefully you can see that it’s not too difficult to use! Download our Haskell Beginner’s Checklist and get started!

For All the World to See: Deploying Haskell with Heroku